A Complete Guide to Spherical Equivariant Graph Transformers

A 2.5-hour breakdown of spherical equivariant graph neural networks (EGNNs) and a deconstruction of the SE(3)-Transformer model.

Update: a technical version of this article is now published on arXiv! If you find this article helpful for your publications, please consider citing the published version below:

@article{tang2025complete,

title={A Complete Guide to Spherical Equivariant Graph Transformers},

author={Tang, Sophia},

journal={arXiv preprint arXiv:2512.13927},

year={2025}

}Introduction

Over the past month, I’ve become obsessed with understanding the architecture behind the protein-prediction model, RoseTTAFold, which ultimately led me down a deep (but fascinating) rabbit hole of geometric graph neural networks (geometric GNNs).

The RosseTTAFold model leverages a three-path approach that incorporates multiple classes of input data (sequence MSA, 2D distance map, and 3D coordinates) to predict protein structure. Here, I will break down how to handle 3D geometric data for deep learning, which I find to be a beautiful example of how concepts from quantum physics, mathematics, biology, and computer science work together.

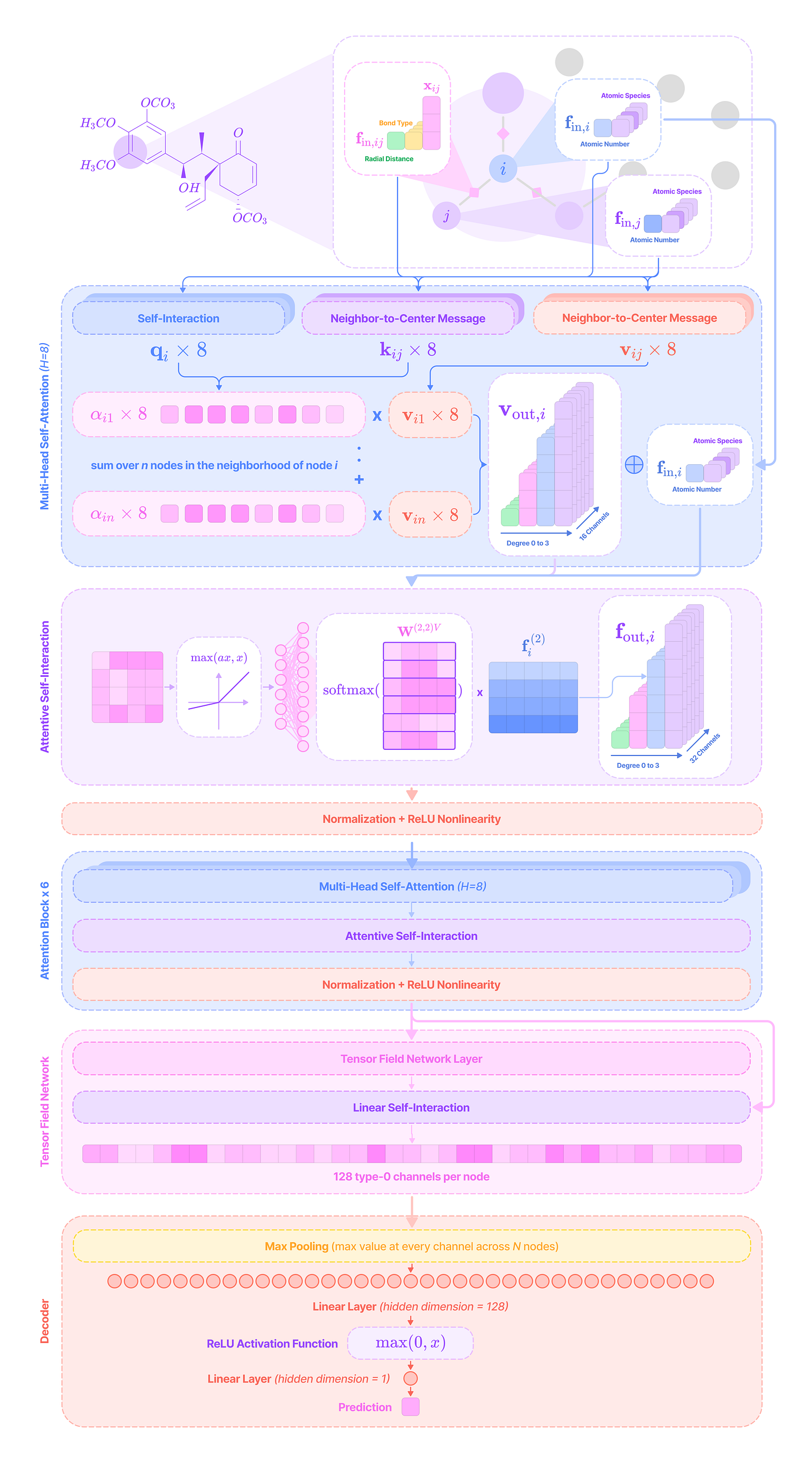

Generative protein structure prediction models like RoseTTAFold and AlphaFold have two primary modules: the sequence module that converts protein sequence data into a 3D representation of the protein, and a structure module where geometric GNNs come in. At a high level, geometric GNNs in the structure module function by:

Converting the initial 3D representation into a graph with nodes representing amino acids, arrays of feature data corresponding to each node, spatial coordinates describing a node’s relative location in 3D space, and edges containing information on pair-wise interactions between residues.

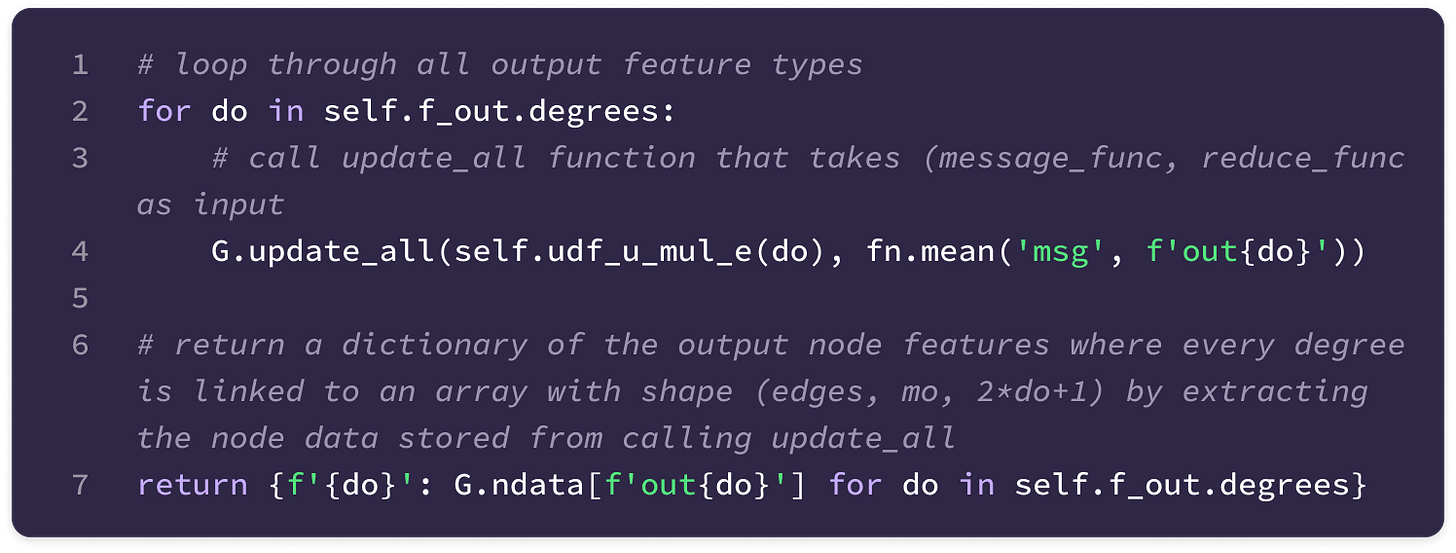

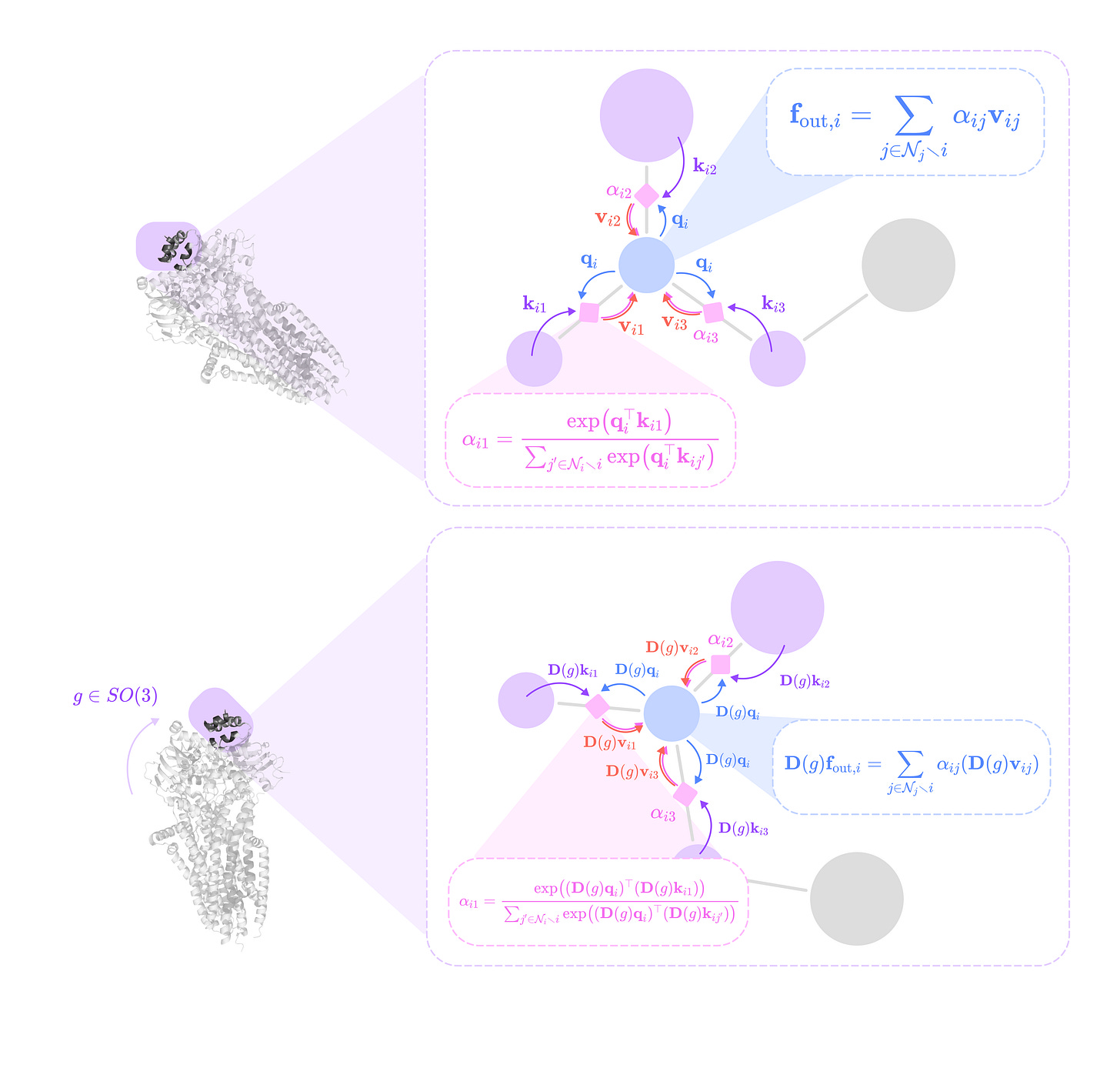

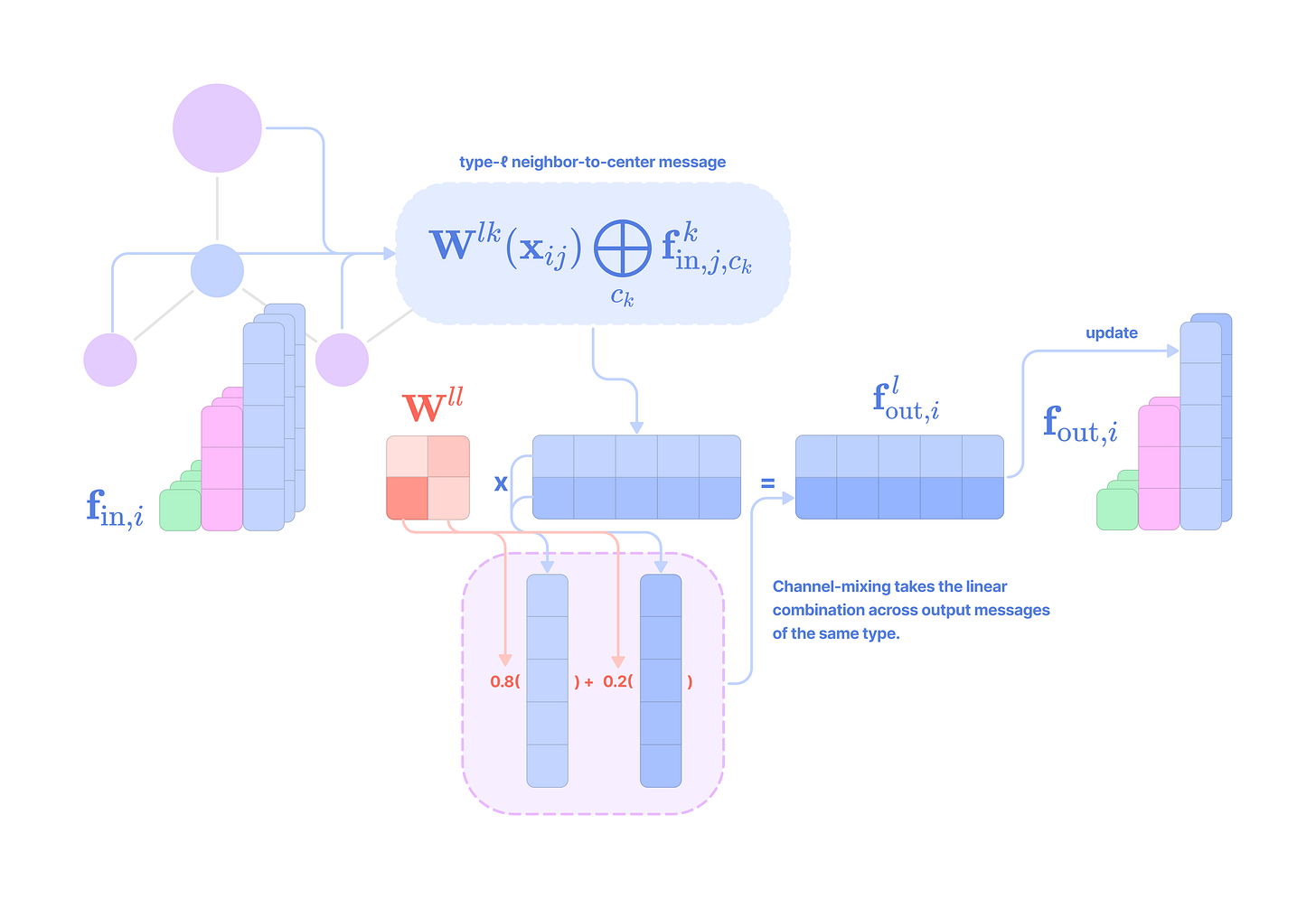

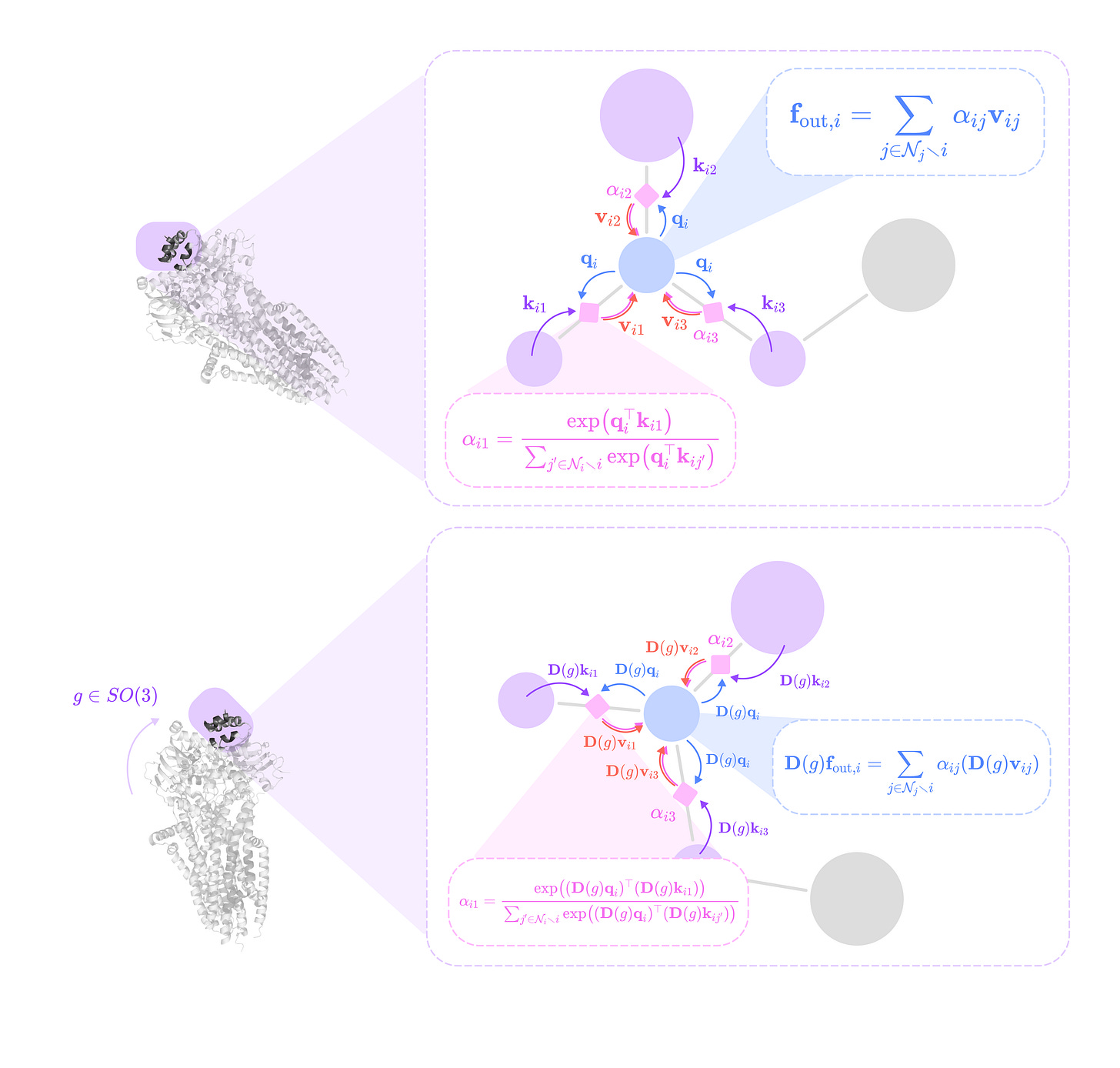

Computing updates for the feature embedding of each node from all connected nodes with an equivariant message-passing layer, which leverages learnable kernels that respect the spatial symmetries of the graph to transform the feature embeddings of adjacent nodes into a message used to update the feature embedding at the center node.

Aggregating messages from all adjacent nodes with a permutation-invariant and rotation-equivariant operator (e.g., mean or sum) that does not depend on the order in which the messages are aggregated and transforms equivariantly under rotation of the graph.

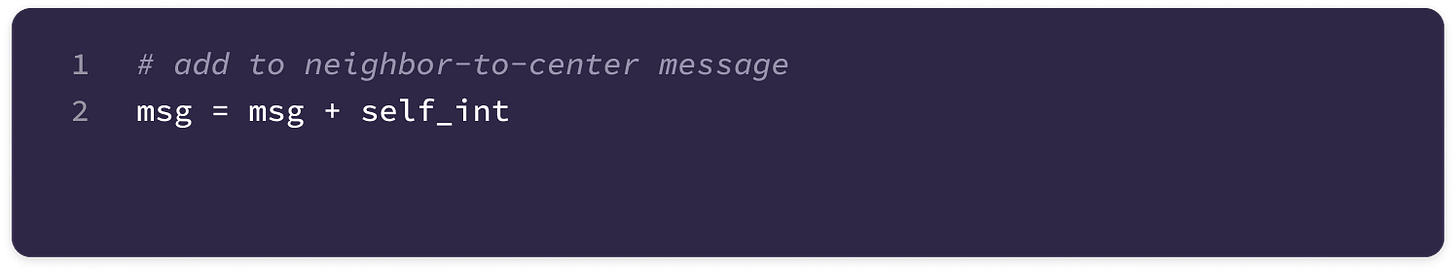

Updating the feature embedding of the center node using a combination of its input features (self-interaction) and the aggregated message from adjacent nodes (neighbor-to-center message-passing).

Performing the message-passing process for every node in the graph in parallel to generate an updated set of coordinates and displacement vectors between residues or atoms in the protein backbone (C-alpha atom, amine, and carboxyl groups).

Iteratively refining the graph based on the updated structure to minimize the loss function until convergence. The final graph represents the 3D protein structure with high spatial precision.

Not only are geometric GNNs useful for protein structure prediction, but they also have extensive applications in chemical property prediction, molecular dynamics simulation, and the generative design of biomolecules.

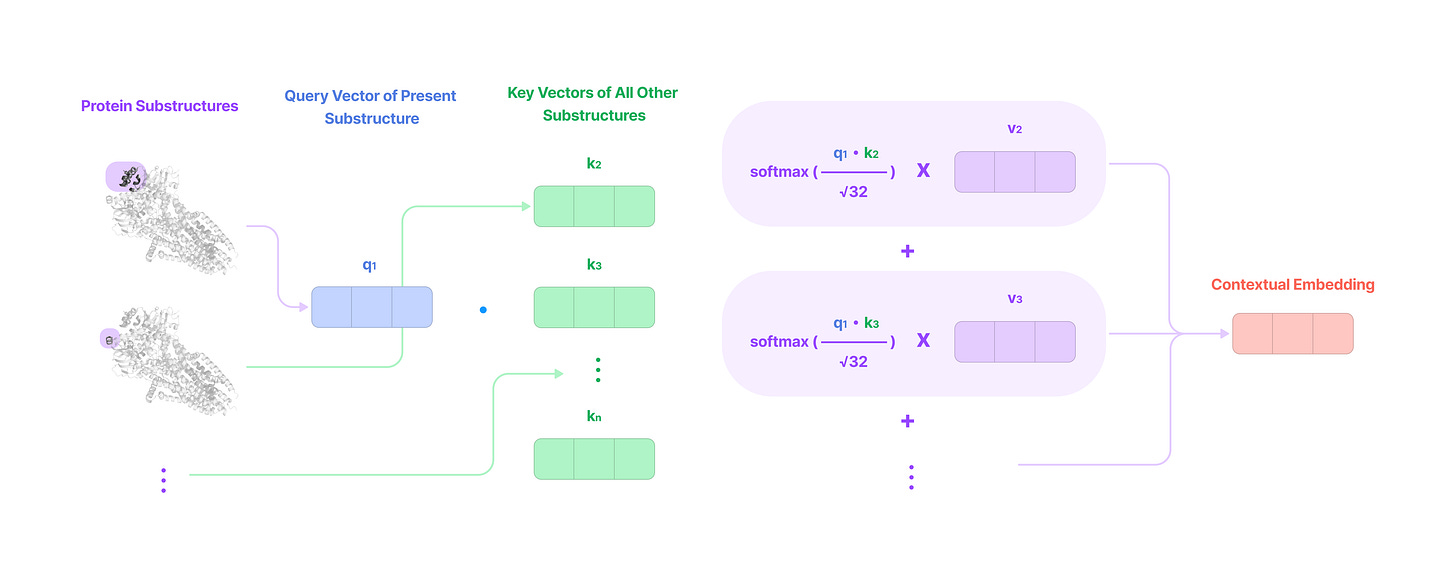

This article will focus on a specific type of geometric GNN called Spherical Equivariant GNNs (Spherical EGNNs), which are extremely useful in tasks dealing with geometric graph representations of objects with rotational symmetries, like molecules and proteins. Then, we will describe a specific spherical EGNN called the SE(3)-Transformer that incorporates the self-attention mechanism for molecular property prediction.

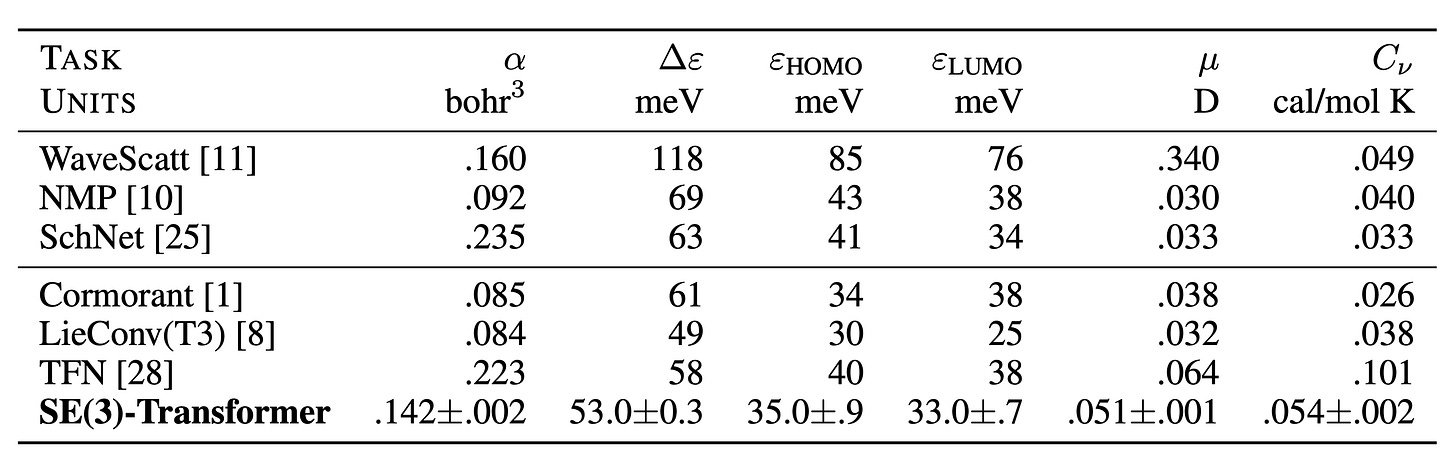

Underlying geometric GNNs are a lot of technically challenging concepts to grasp, involving quantum physics and mathematics, so this article aims to break down the fundamental concepts of spherical EGNNs intuitively and extend these concepts to deconstruct the Tensor Field Network and SE(3)-Transformer models. At the end of the article, I will discuss how these models can be applied for chemical property prediction on the QM9 dataset.

Preface

The majority of notation used in this article aligns with the convention used in the SE(3)-Transformers paper. In the original paper, specific indices distinguishing which kernels are unique and the extension to multiple channels of each feature type are omitted for brevity, but I included an expanded version in this article for completeness. Thus, the mathematical symbols denoting weights, kernels, vectors, and feature tensors have several sub- and superscripts that can refer to the following meanings:

in, out → input features and output features (after message-passing)

i → center node or destination node.

j → nodes in the neighborhood of node i with an outgoing edge pointing towards node i.

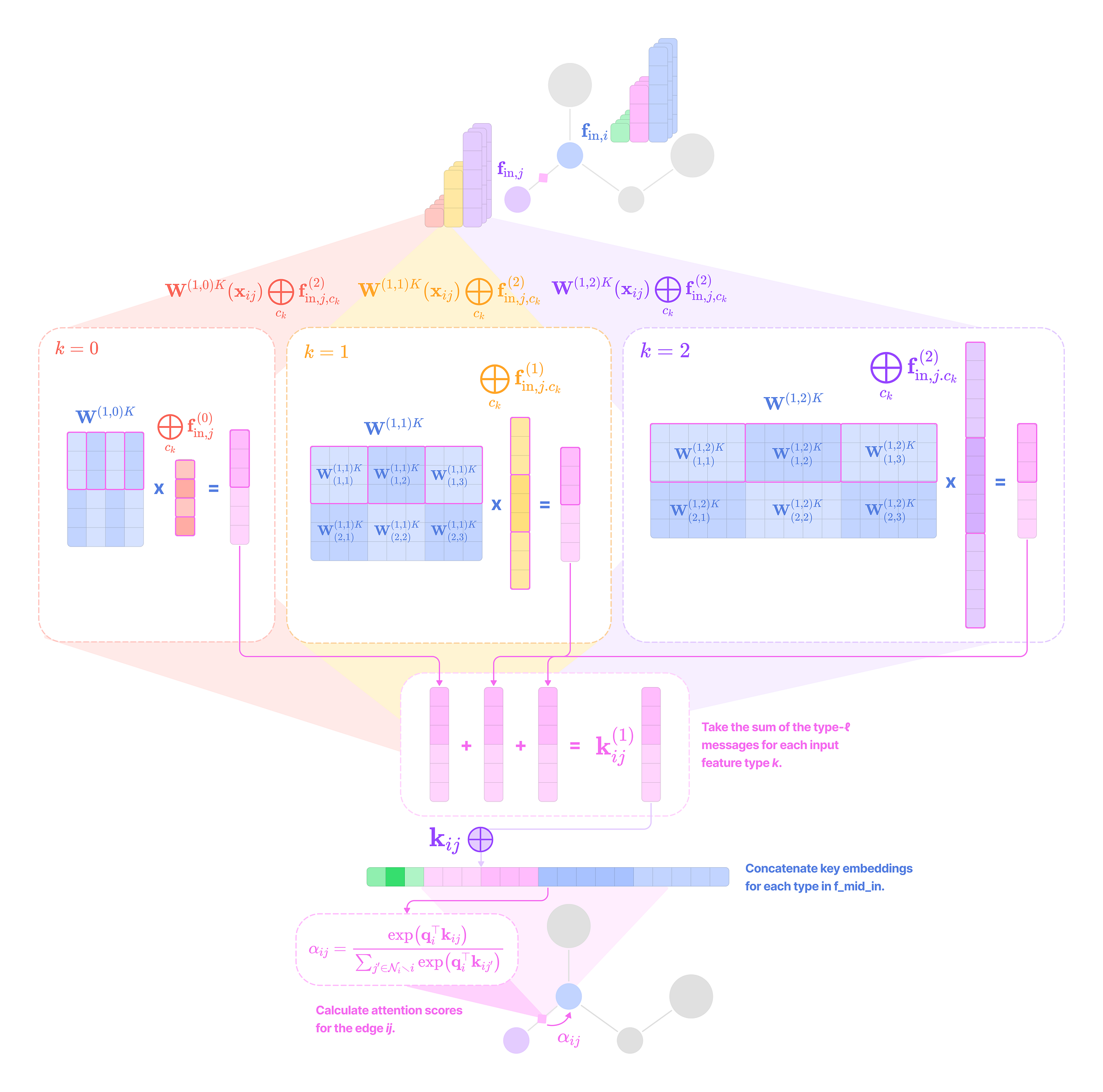

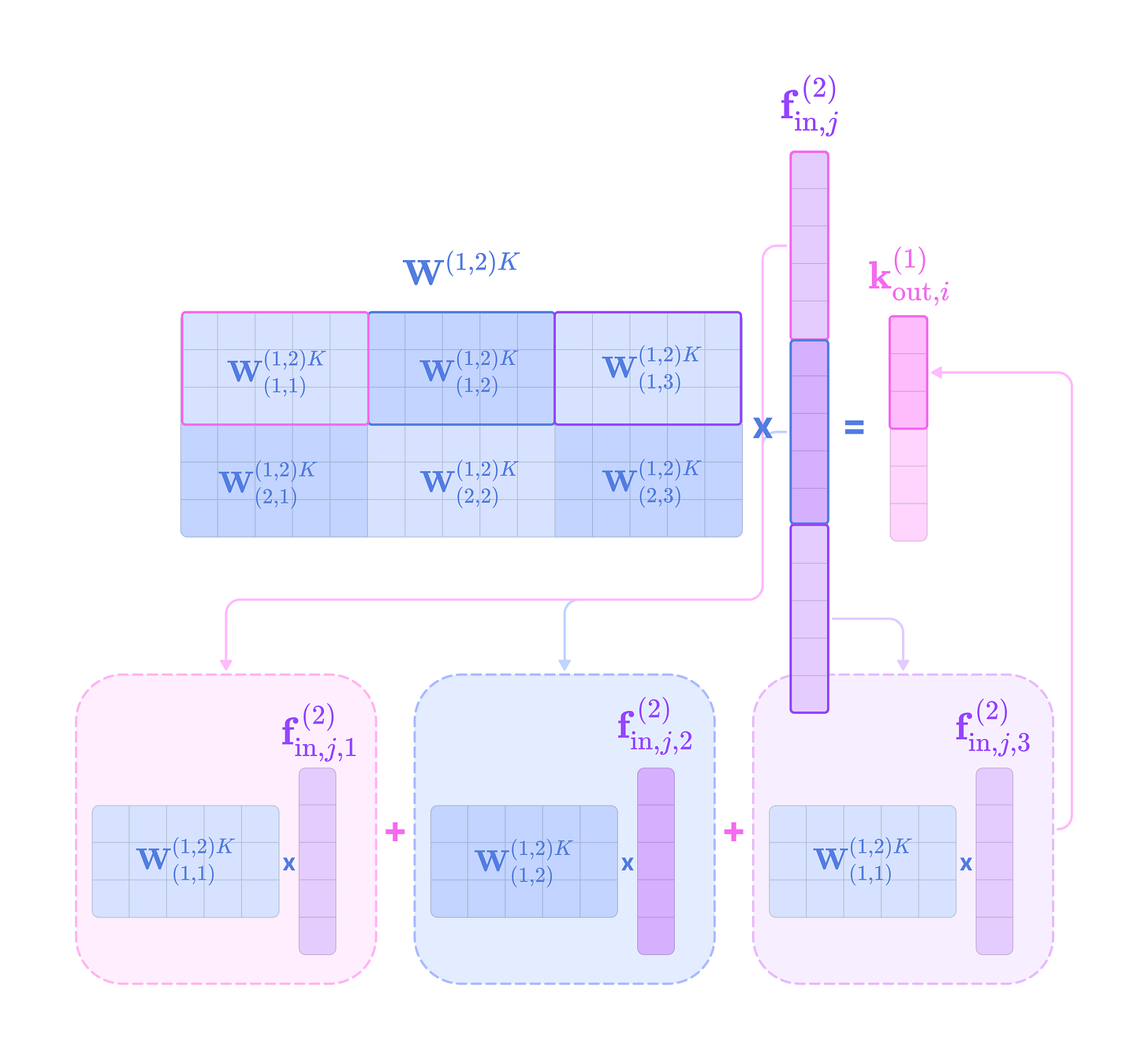

k → the type/degree of node features from the source or neighborhood nodes.

c_k → index of the type-k feature channel.

l → the type/degree of node features from the center node.

c_l → index of the type-l feature channel.

m_l, m_k, m → indices of the elements of the type-l, type-k, and type-J spherical tensors, which also correspond to magnetic quantum numbers corresponding to the angular momentum numbers l, k, and J

J → the intermediate feature types for spherical harmonics projections ranging from |k - l| to |k + l|.

ij → denotes an edge feature (displacement vector) or embedding stored in the edge (messages or key and value embeddings) from the neighborhood node j to the center node i.

lk→ denotes equivariant kernels that transform tensors from type-k to type-l features.

Q, K, V → denotes the kernels that transform features into query, key, and value embeddings, respectively.

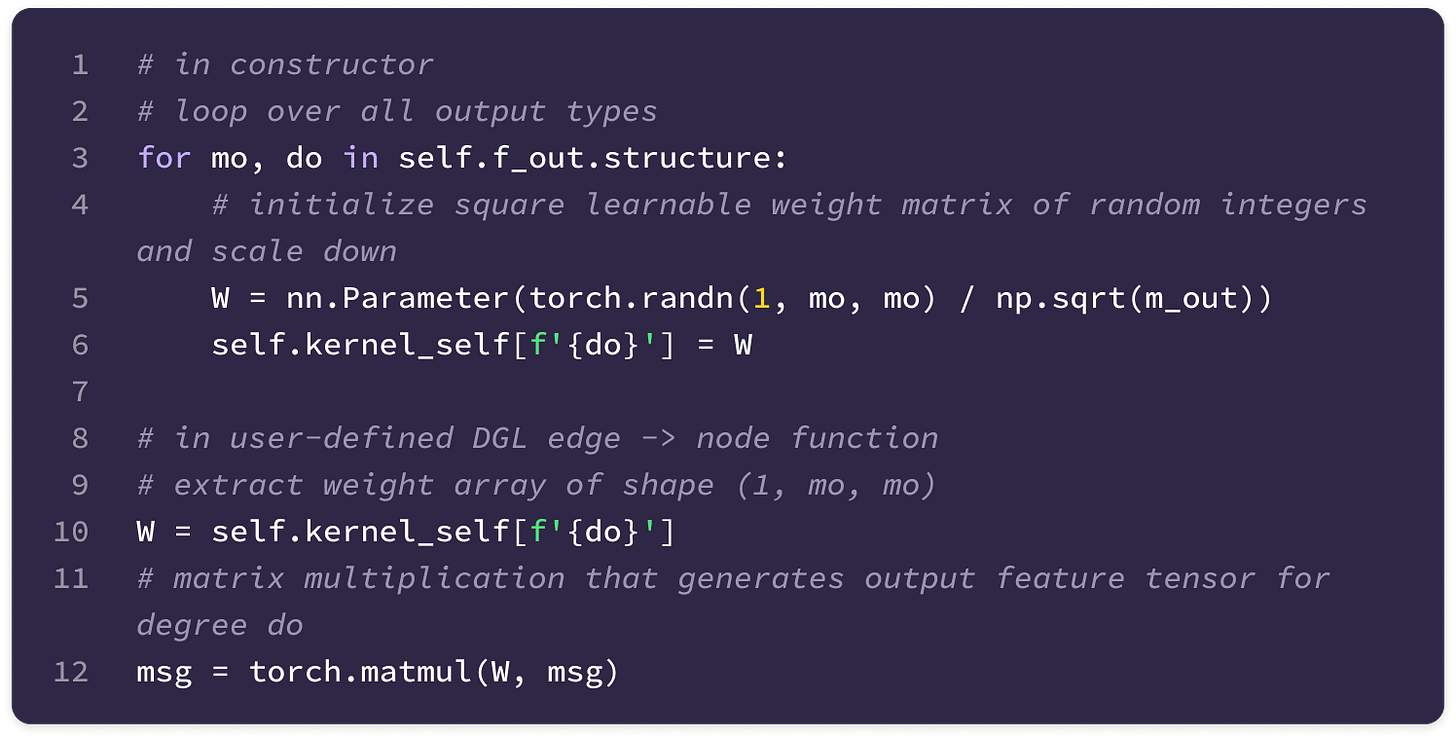

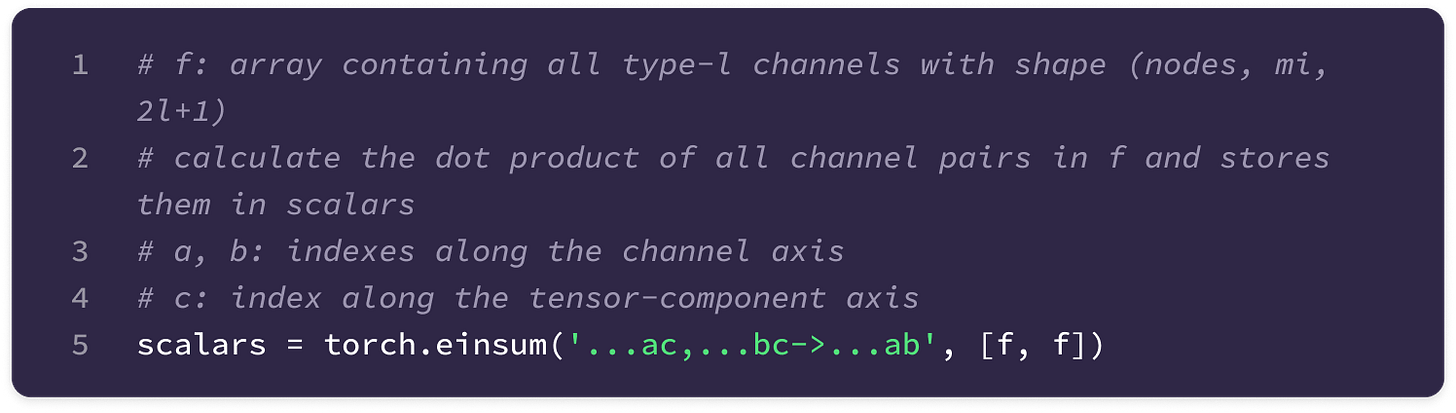

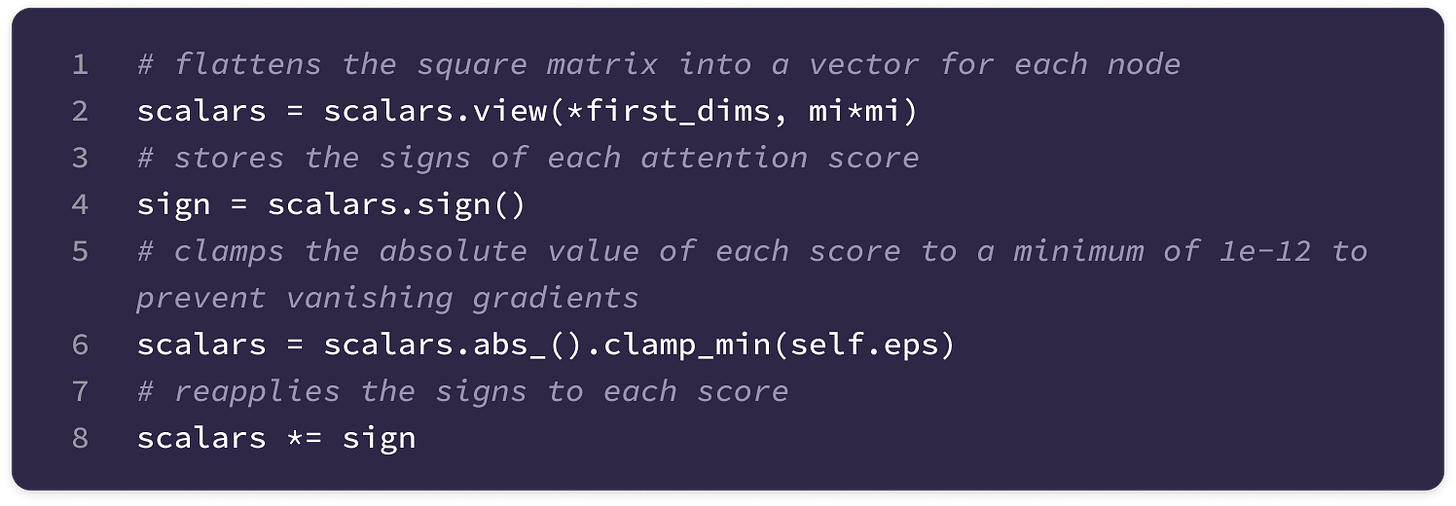

mi → total input channels (or multiplicity) of degree di.

mo → total output channels of degree do.

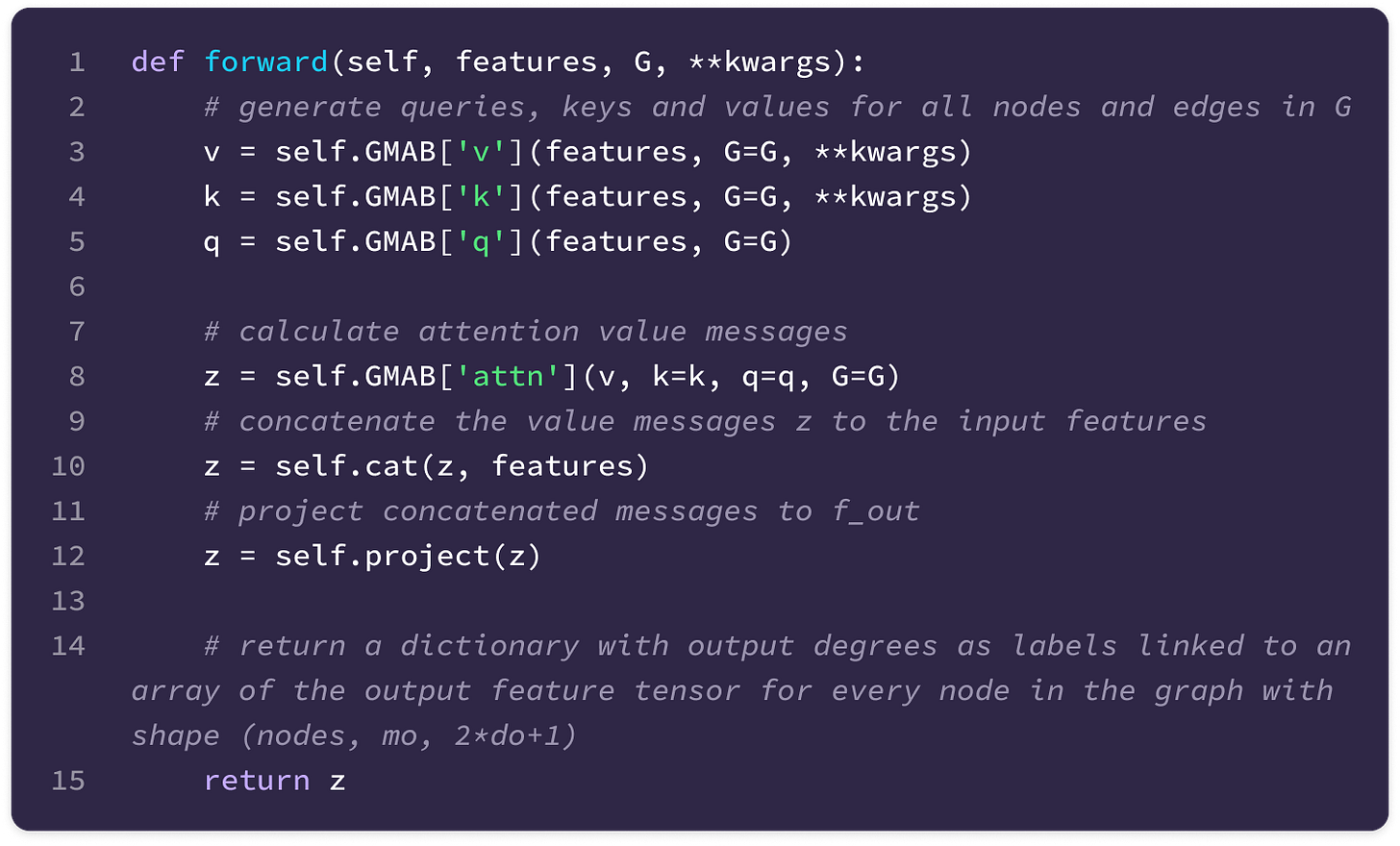

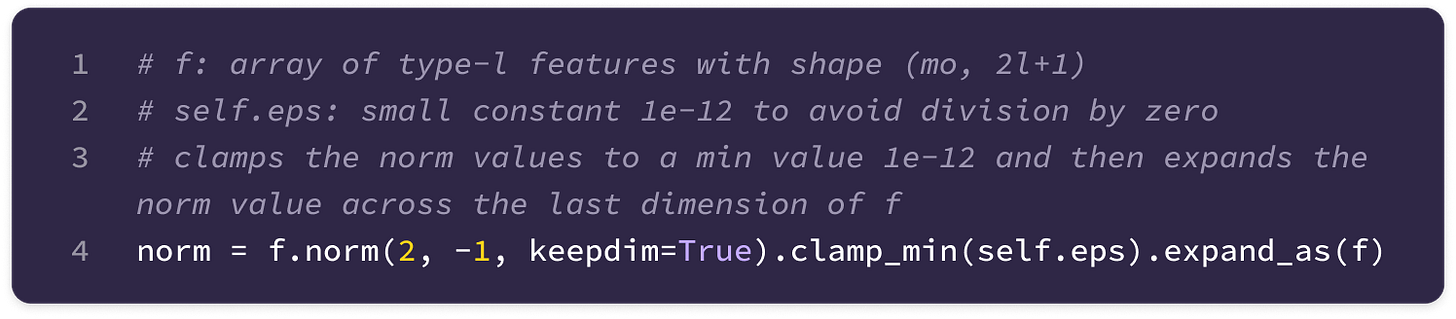

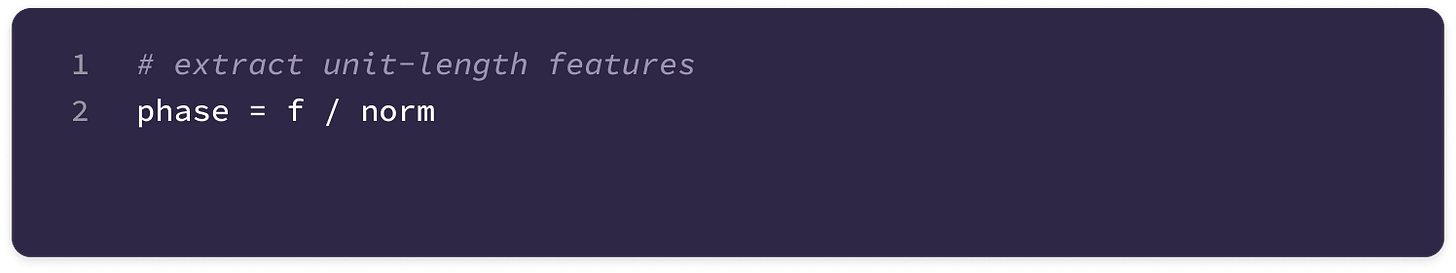

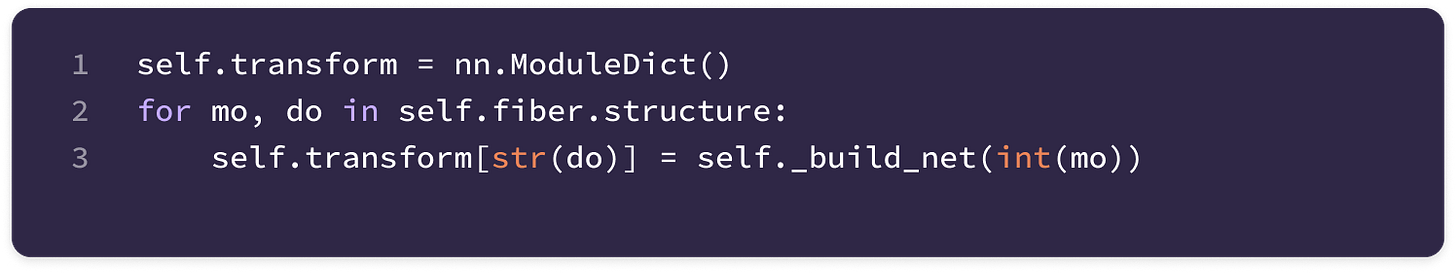

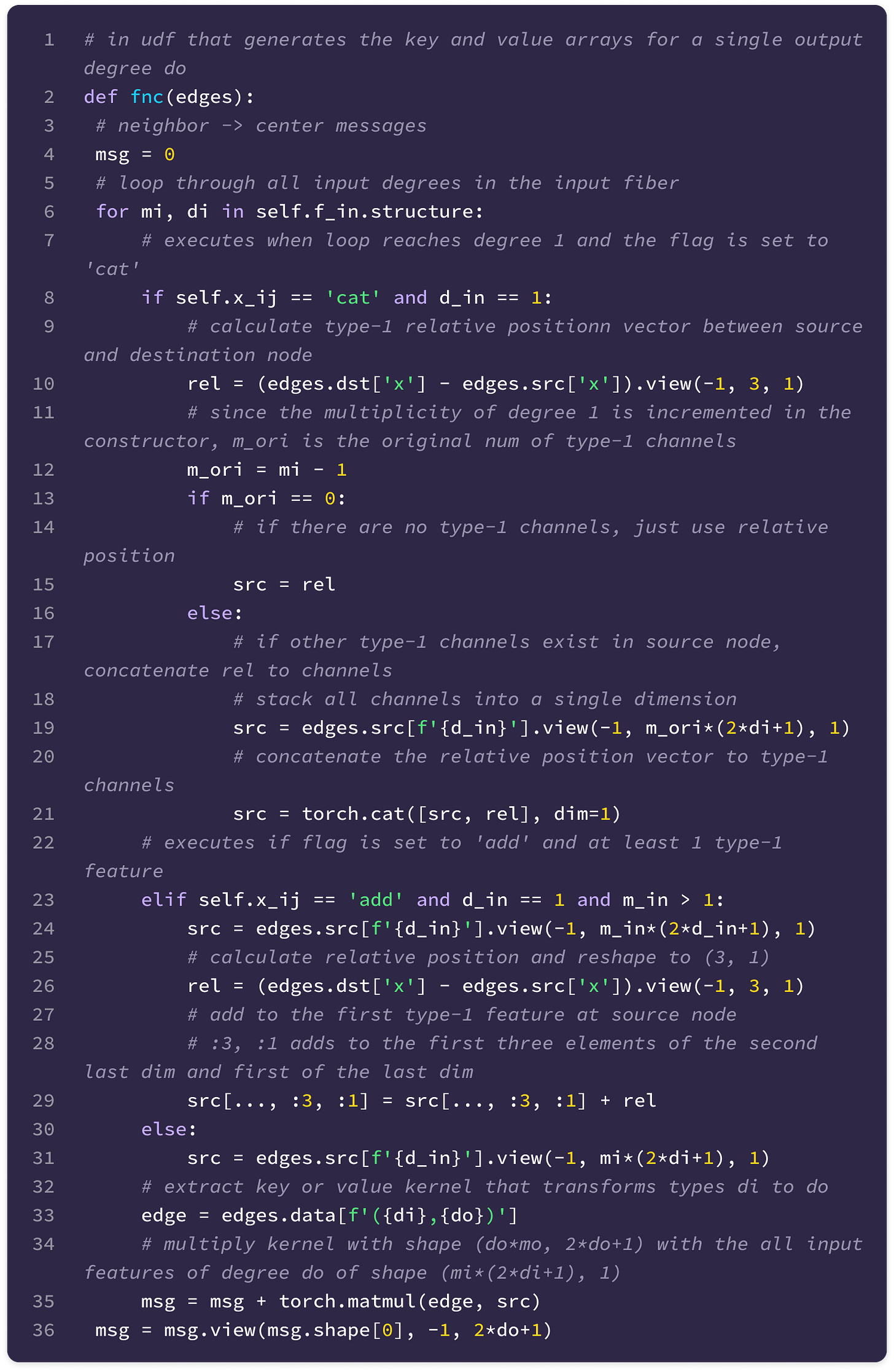

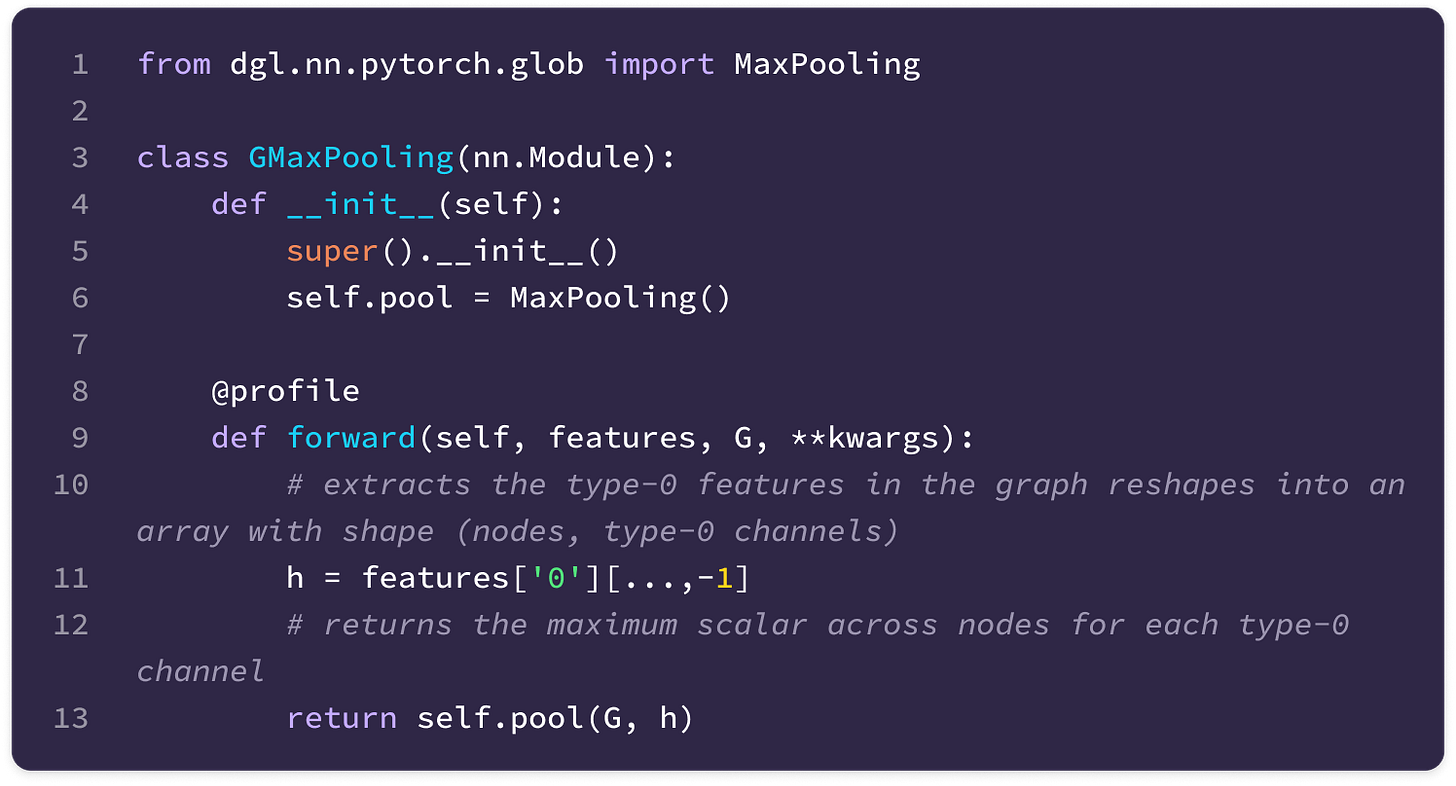

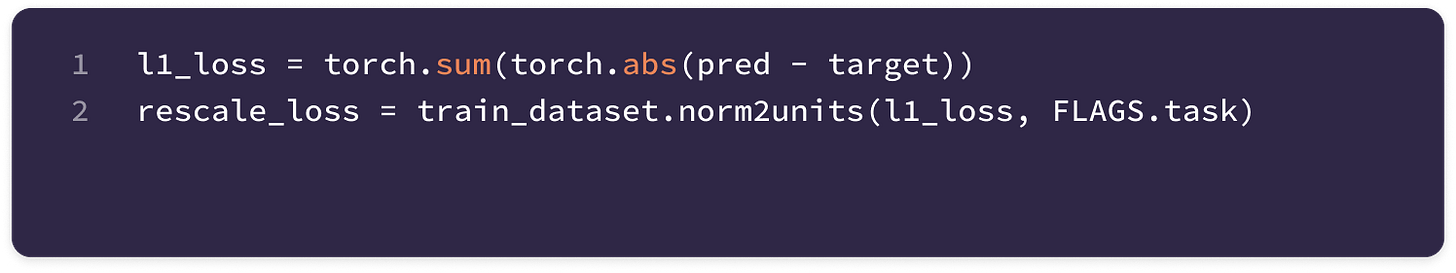

Throughout the article, I have included code from the full PyTorch implementation of the SE(3)-Transformer on GitHub that I fully annotated and made slight modifications for clarity. I’ll be breaking down most of the classes found in the modules file on GitHub, but placing more emphasis on the mathematics and intuition surrounding the code, so I encourage you to refer to the full implementation to connect the snippets and intuition you gain from this article to the full functions and classes.

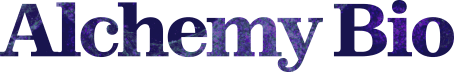

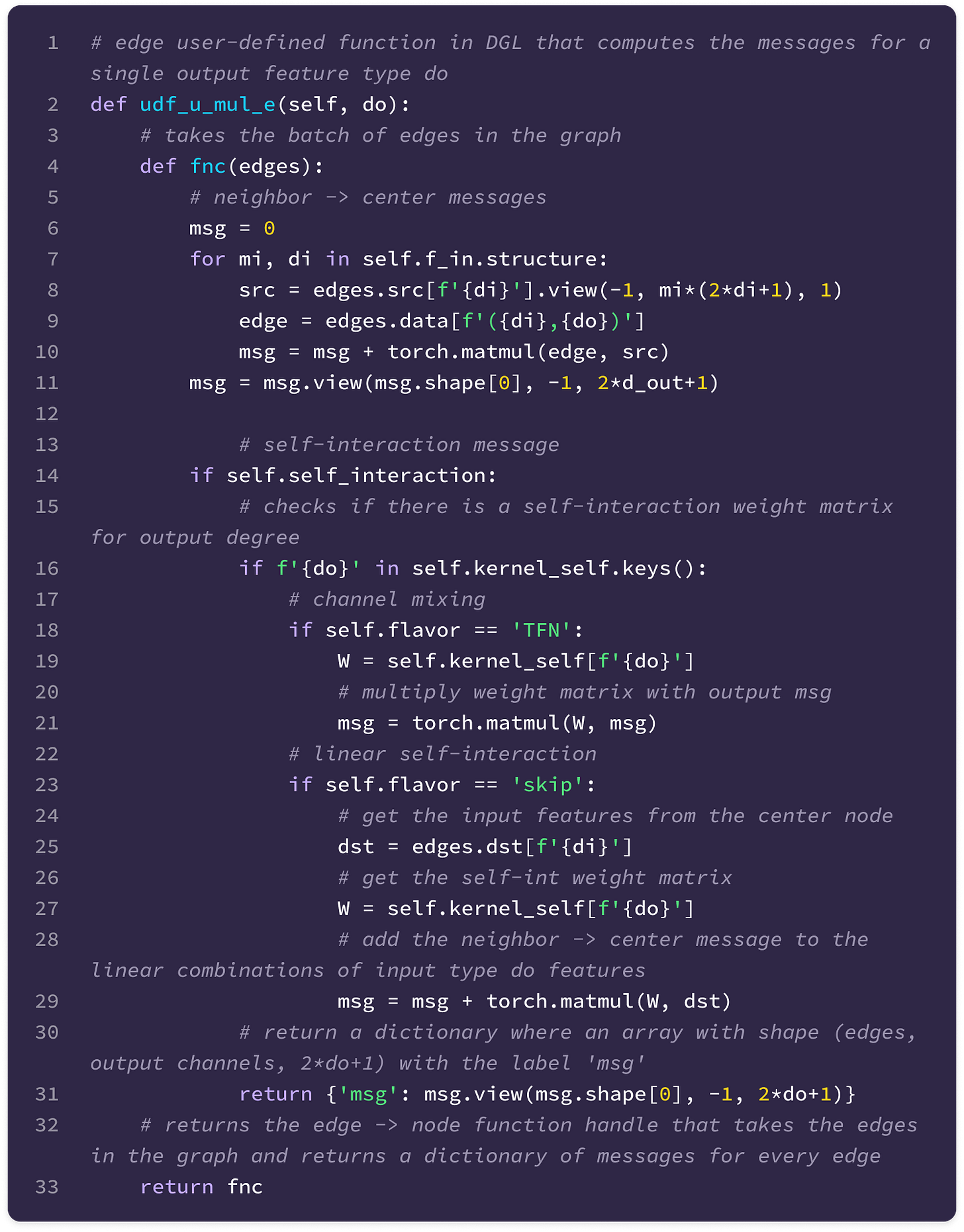

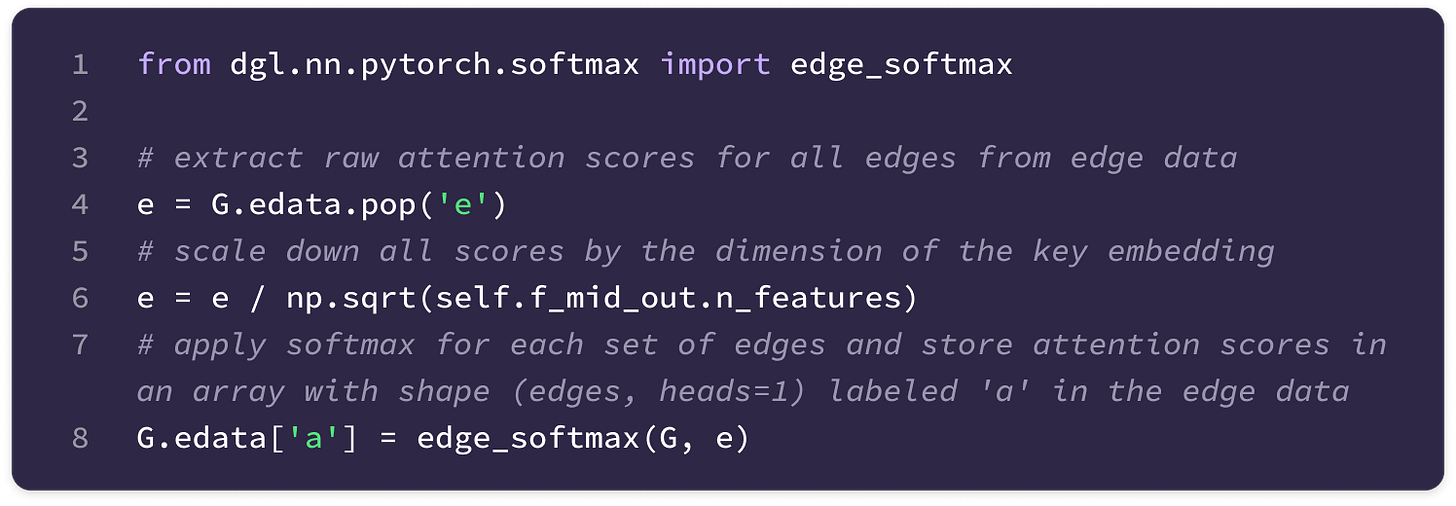

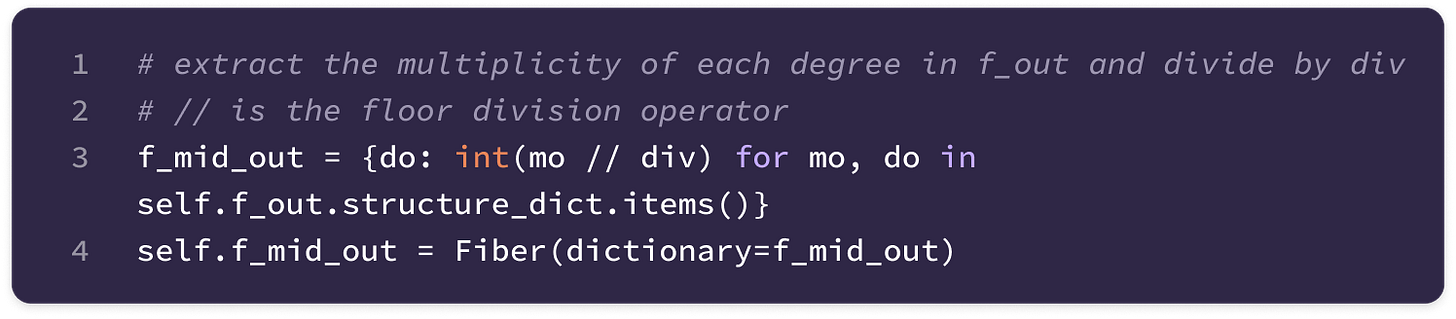

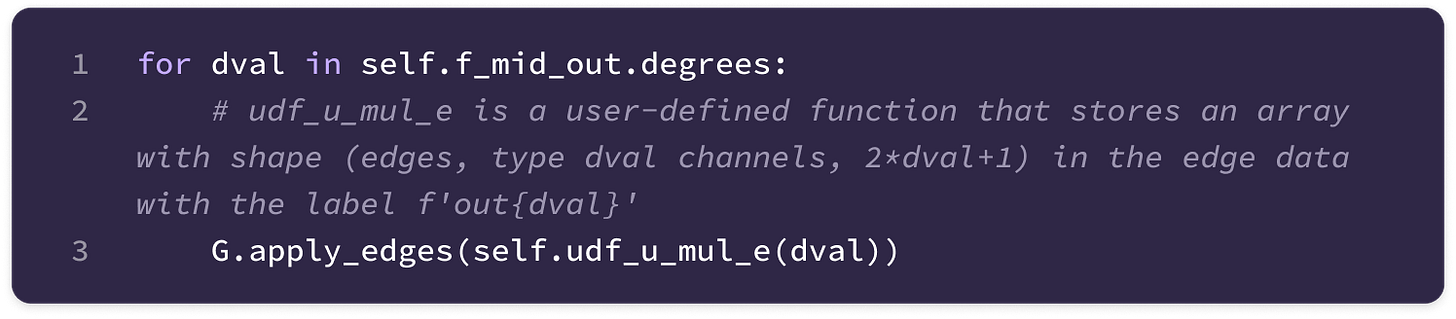

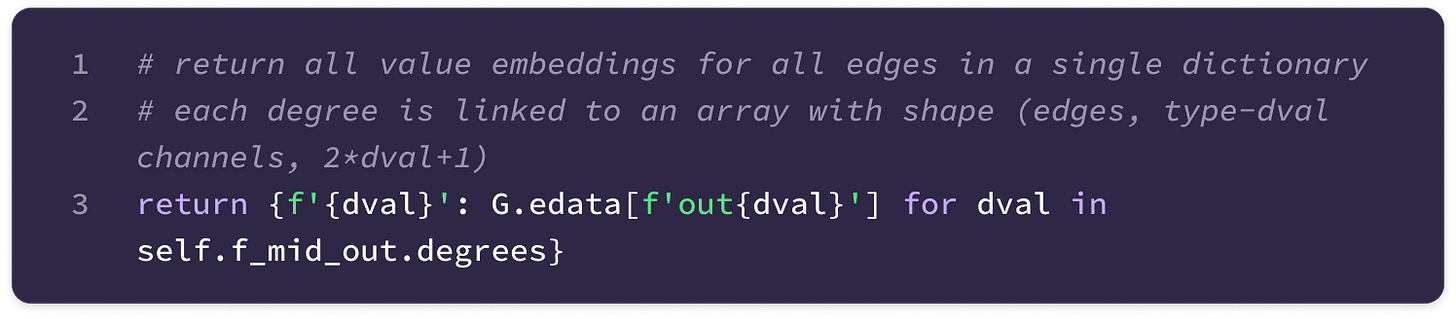

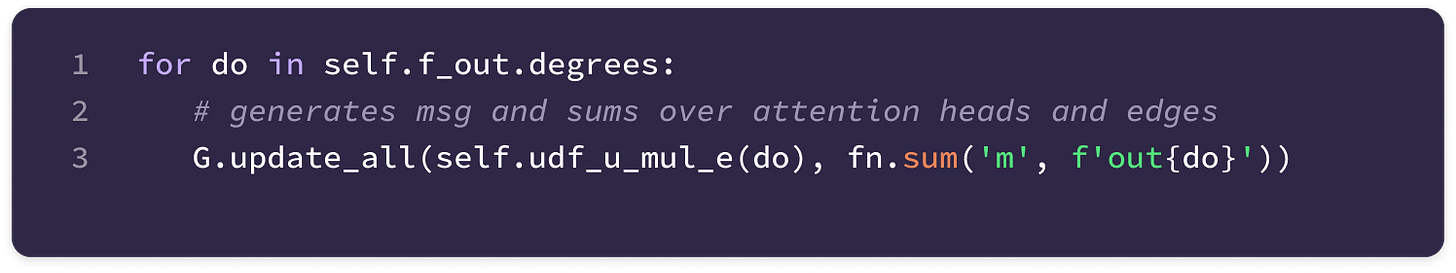

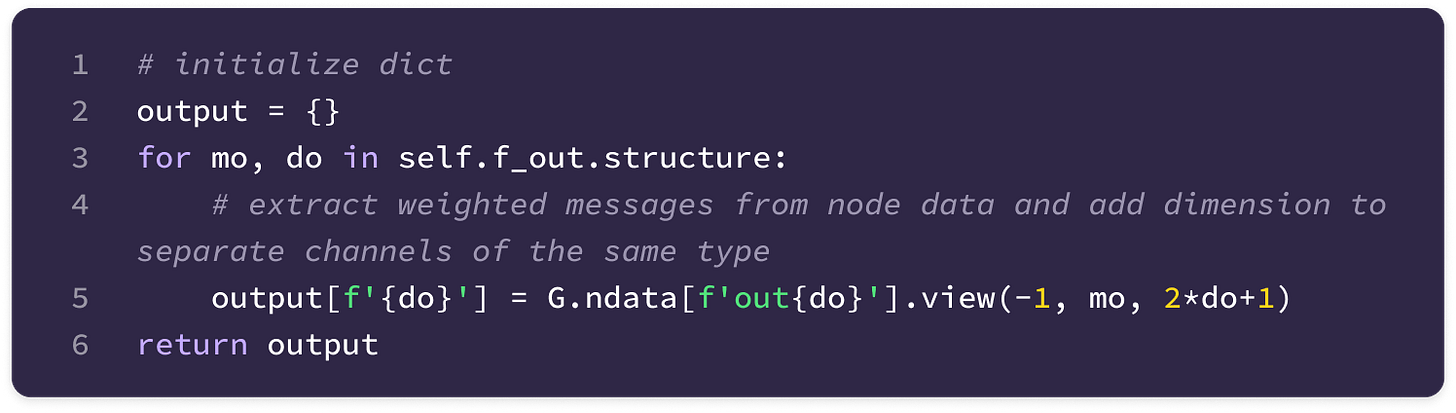

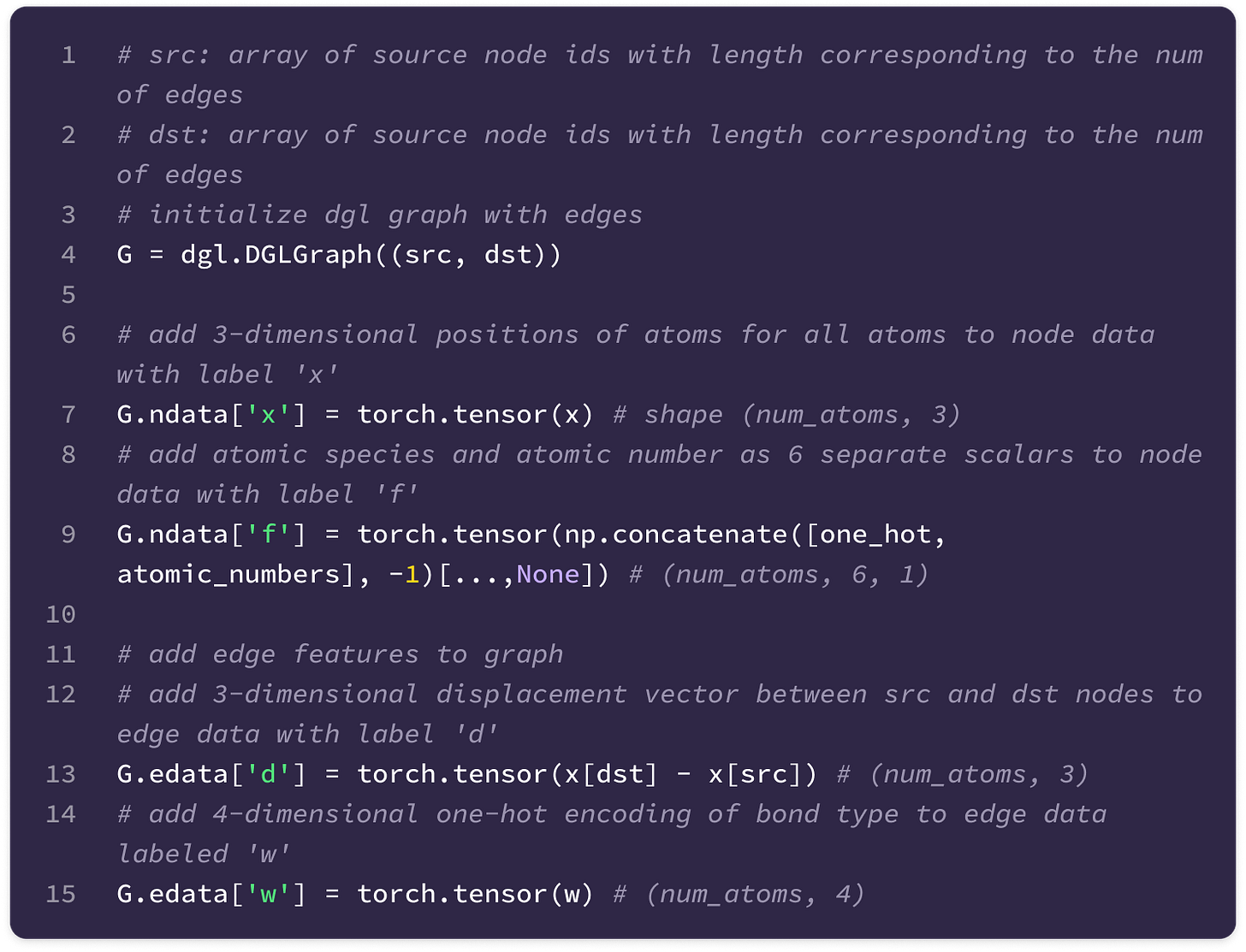

The code uses a Python library called Deep Graph Library (DGL) that allows the construction and handling of graph data. The library supports User-Defined Functions (UDFs) that enable users to construct novel functions that can be applied for message-passing across the entire graph (much of the code described here is encapsulated in a UDF). Here are some basic DGL notations that will be useful in interpreting the code throughout the article:

You can also find the documentation for the DGL library here.

In this article, we will be discussing geometric tensors (spherical tensors and Cartesian tensors) as well as working with the ‘tensor’ data structure in PyTorch, which refers to multidimensional arrays. To distinguish the two, I will use ‘tensor’ when referring to geometric tensors and ‘arrays’ when referring to the data structure.

When I state the shape of an array, I often omit the batch size (often the first dimension) used for smoother training and parallel computation, so the shape refers to the final dimensions of the tensor that are relevant to calculations.

Finally, since we will be discussing how attention works on geometric graphs, I highly recommend reading my previous article to get a foundational understanding of self-attention on sequence data before leveling up to three dimensions.

Preserving Rotational Equivariance

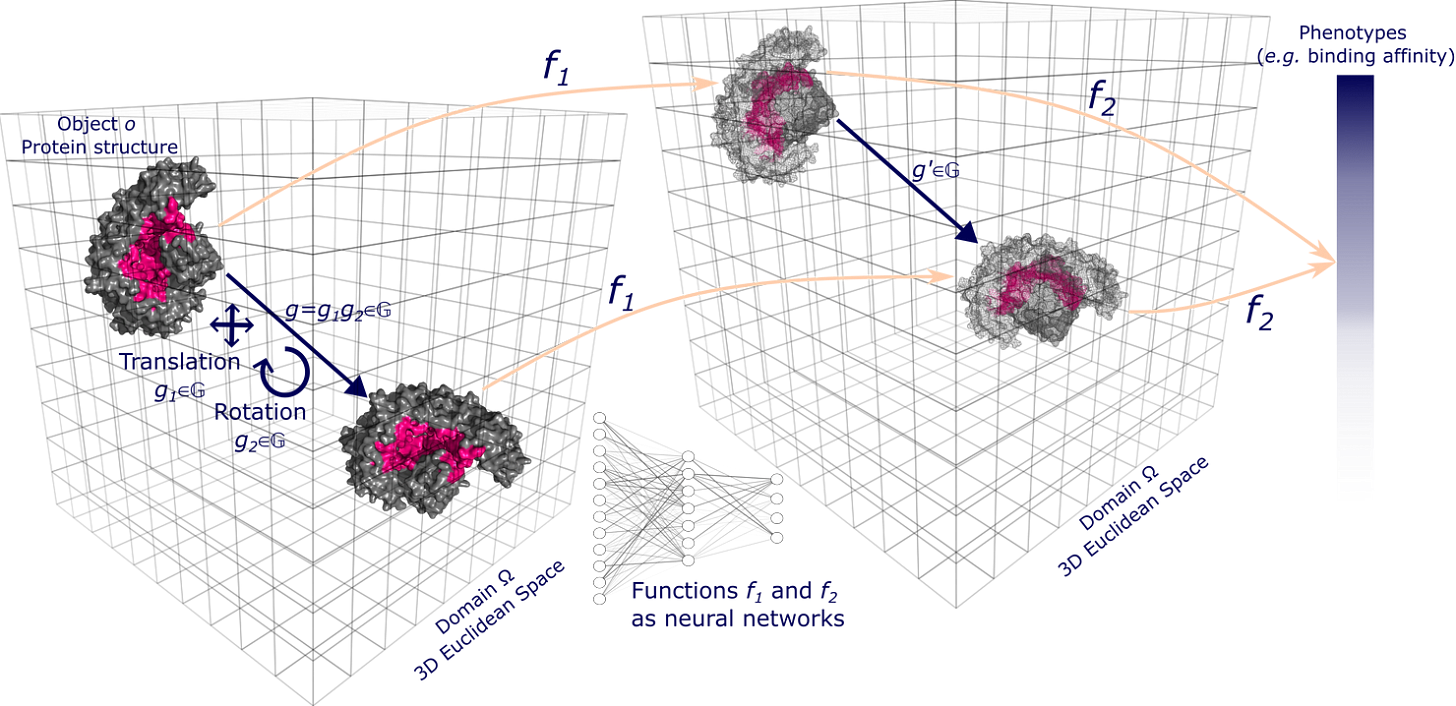

Understanding how to preserve rotational equivariance is the primary challenge of understanding geometric GNNs. Conventional deep learning models generate predictions based on learned features on a fixed reference frame but fail to detect those same features after transformations in space.

Rotational symmetries are rooted in all physical systems, especially on the molecular scale. Thus, constructing models that understand how interactions between nodes change under rotation is critical for tasks involving biomolecular systems.

Invariance and Equivariance

Invariance and equivariance are the cornerstones of geometric GNNs because they describe how convolutions or filters must be constructed to recognize patterns and generate predictions on a global reference frame where the graph can appear in any location or orientation in space but encode data that is the same or differing by a predictable transformation.

When a function produces the same output for a given input regardless of its orientation or position in space, it preserves invariance. A feature of a physical system can also be called invariant if it does not change with permutations or rotations (e.g., atomic number, bond type, number of protons). Only functions that preserve invariance should be applied to invariant features.

For instance, the potential energy of an isolated molecule in a vacuum is constant no matter its orientation or position in space; therefore, a function that calculates the potential energy given a molecule input should produce the same value no matter its position or orientation; in other words, it should preserve invariance.

When a function produces a predictable transformation of the original output as a direct consequence of a transformation on the input (i.e. translation or rotation), it preserves equivariance.

A node feature is equivariant if it transforms predictably under transformations in the node’s position, and similarly, an edge feature is equivariant if it transforms predictably under transformations in either node it connects. Features on the node level of a geometric graph are often equivariant as they change with changes to the relative position and orientation of nodes, whereas system-level properties across the entire system are generally invariant. Only functions that preserve equivariance should be applied to equivariant features.

All chemical features represented by vectors (e.g. position, velocity, external forces on individual atoms) are equivariant, as they should transform with transformations in the input. In a molecule, changing the position of a negatively charged atom changes the direction of the attractive force between it and nearby charged atoms.

Preserving equivariance is crucial for modeling molecular systems, as their behavior is governed by conserved quantities of quantum mechanics, like angular momentum and energy, that follow strict sets of physical laws with inherent rotational symmetries.

Transformation equivariant models ensure that three-dimensional spatial transformations (i.e. translations in x, y, and z directions and rotations around any axis) of the input graph structure and features result in predictable transformations in the model’s output using functions (called kernels) that learn patterns across positional and feature data and applies them equivariantly across the entire graph. These functions are applied across the graph and are constructed to handle location and orientation-invariant or equivariant features without needing to be trained on rotated or translated data.

Similar to how a convolutional neural network (CNN) applies position-invariant filters to detect two-dimensional motifs regardless of their position in the input (i.e. image, heatmap), equivariant GNNs apply the same transformation-equivariant filters to detect motifs regardless of translations and rotations in 3D space.

Group Representations and Transformation Operators

A group in mathematics is defined as a set, denoted as G, of abstract actions (e.g. rotations and translations) and a binary operation ab for all a, b ∈ G that operates on the elements in the set such that the following conditions hold:

Closure — for all a, b ∈ G, the output of the binary operator is also in the set, ab ∈ G.

Associativity — for all a, b, c ∈ G, the following equation holds: (ab)c = a(bc)

Identity Element — every group has an identity element e that returns the element unchanged when applied to any element in the set with the binary operator. In other words, for all a ∈ G, ea = ae = a.

Inverse Element — for all a ∈ G, there exists an inverse of a (denoted as a⁻¹) in G such that aa⁻¹ = a⁻¹a = e (identity element). Note that a is also the inverse of a⁻¹.

A group representation converts the abstract elements of a group into a set of N x N invertible square matrices, denoted as GL(N). A group representation is generated via a group homomorphism ρ: G → GL(N) that takes a group as input and outputs a set of N x N matrices corresponding to each group element while preserving the function of the binary operator:

These representations can also be interpreted as injective transformation operators that act on N-dimensional vectors in a specific subspace, mapping the vector from one point in the subspace to another point in the same subspace. With the idea of group representations as transformation operators, we can define invariant and equivariant functions.

A function W that acts on a vector f is invariant under a group G if the output is the same before and after the action g in G is applied to the input, for all actions in the group.

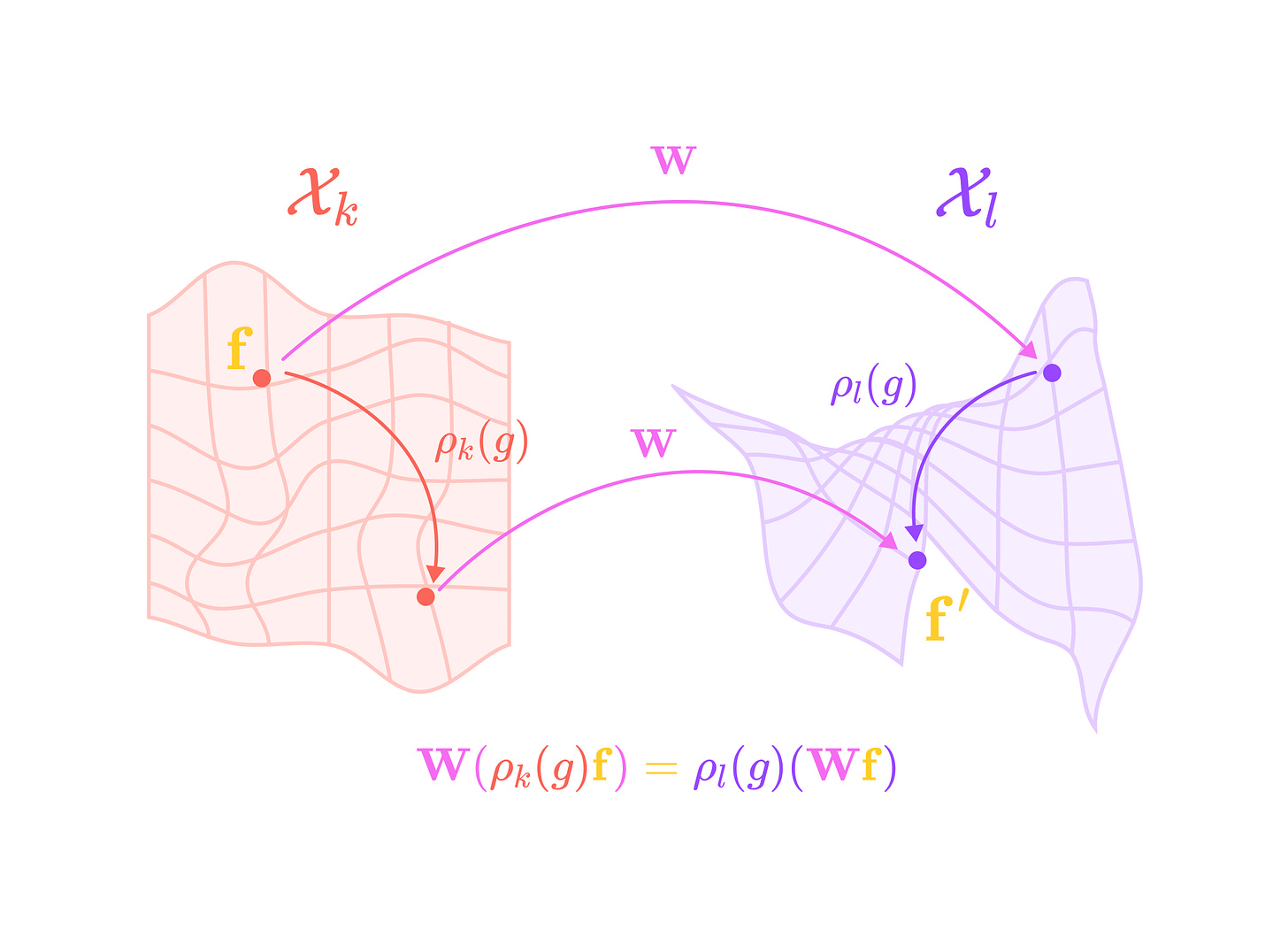

A function is equivariant under group G if the output of the function also undergoes the same action g when the input is transformed by g.

ρ_k and ρ_l are group representations of G, where ρ_k acts in the same subspace Xk as the vector f and ρ_l acts in the same subspace Xl as the output of the function W.

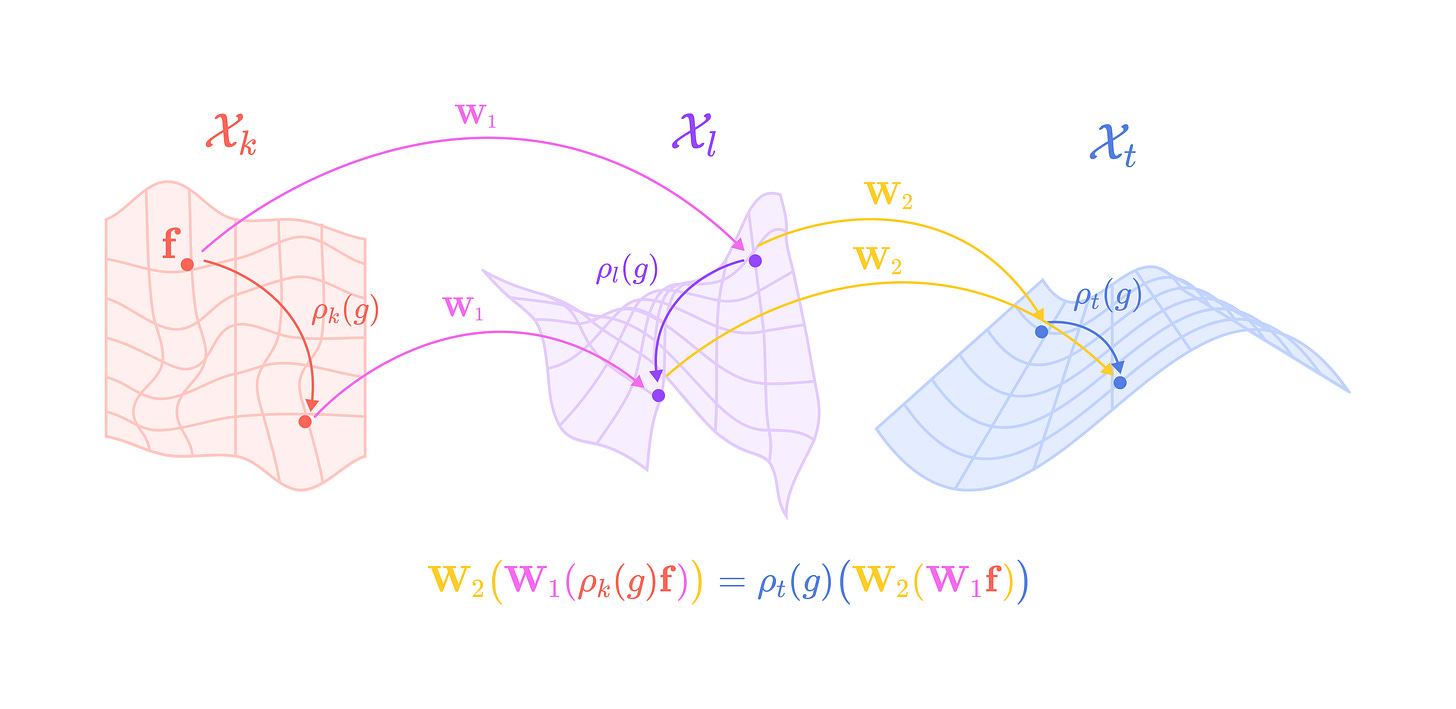

Applying two or more equivariant functions subsequently (or composing the functions) still satisfies the equivariance condition:

W2 is a second equivariant function applied after W1 that transforms the input from the subspace Xl to the subspace Xt.

This property allows us to compose as many equivariant functions as we want without worrying about breaking equivariance.

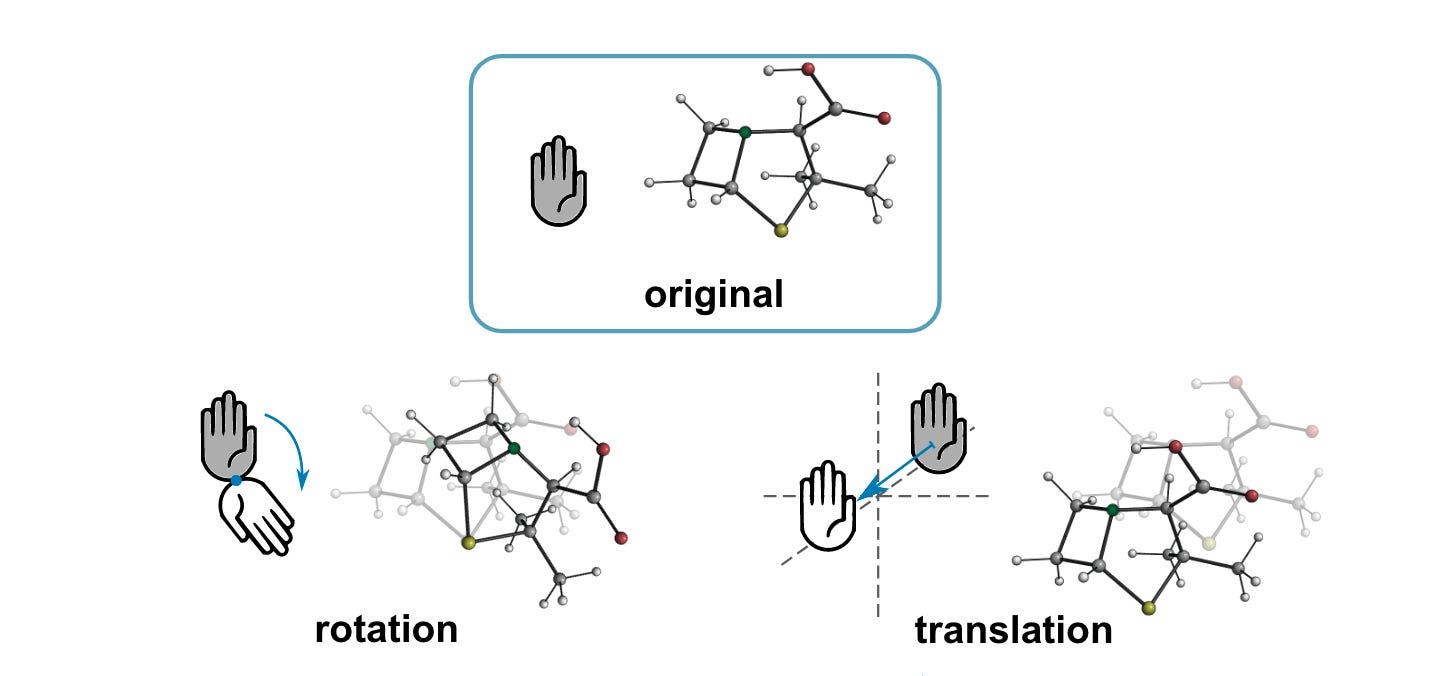

Functions in geometric GNNs should satisfy three types of equivariance in 3D: permutation equivariance, translation equivariance, and rotation equivariance. Permutation equivariance states that permuting the indices of nodes should permute the output or produce the same output (permutation invariance). In most GNNs, nodes are treated as sets of objects rather than an ordered list, so these models are inherently permutation invariant.

Since geometric graphs represent isolated systems defined by relative displacement vectors and not absolute spatial information, geometric GNNs are by default translation invariant, meaning shifting the position of all nodes in the graph by a displacement vector does not change the output. Unfortunately, satisfying rotational equivariance in 3D is a lot more challenging, making it the focus of advancements in equivariant models.

Spherical Tensors

The Special Euclidean Group in 3D, known as the SE(3) group, is the set of all rigid 3D transformations, including rotations and translations. We will be focusing on the subset of SE(3) called the Special Orthogonal Group in 3D, known as the SO(3) group, which is the set of all 3D rotations.

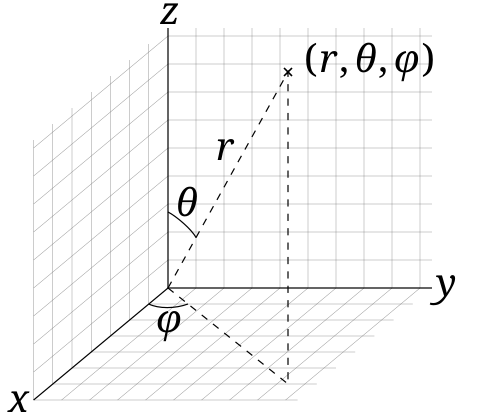

A representation of SO(3) is a set of invertible N x N square matrices that assign a specific matrix to every possible 3D rotation defined by the three Euler angles alpha α, beta β, and gamma γ. These angles define the rotation angles about the x, y, and z-axes, respectively.

These matrices or orthogonal and have a determinant of 1, meaning they preserve length and relative angles between vectors.

Since higher-dimensional tensors require more complex representations, we need a way of decomposing complex representations into smaller building blocks that can be used to rotate across tensors of increasing dimensions. These building blocks are called irreducible representations (irreps) of SO(3), which is a subset of rotation matrices that can be used to construct larger rotation matrices that operate on higher-dimensional tensors.

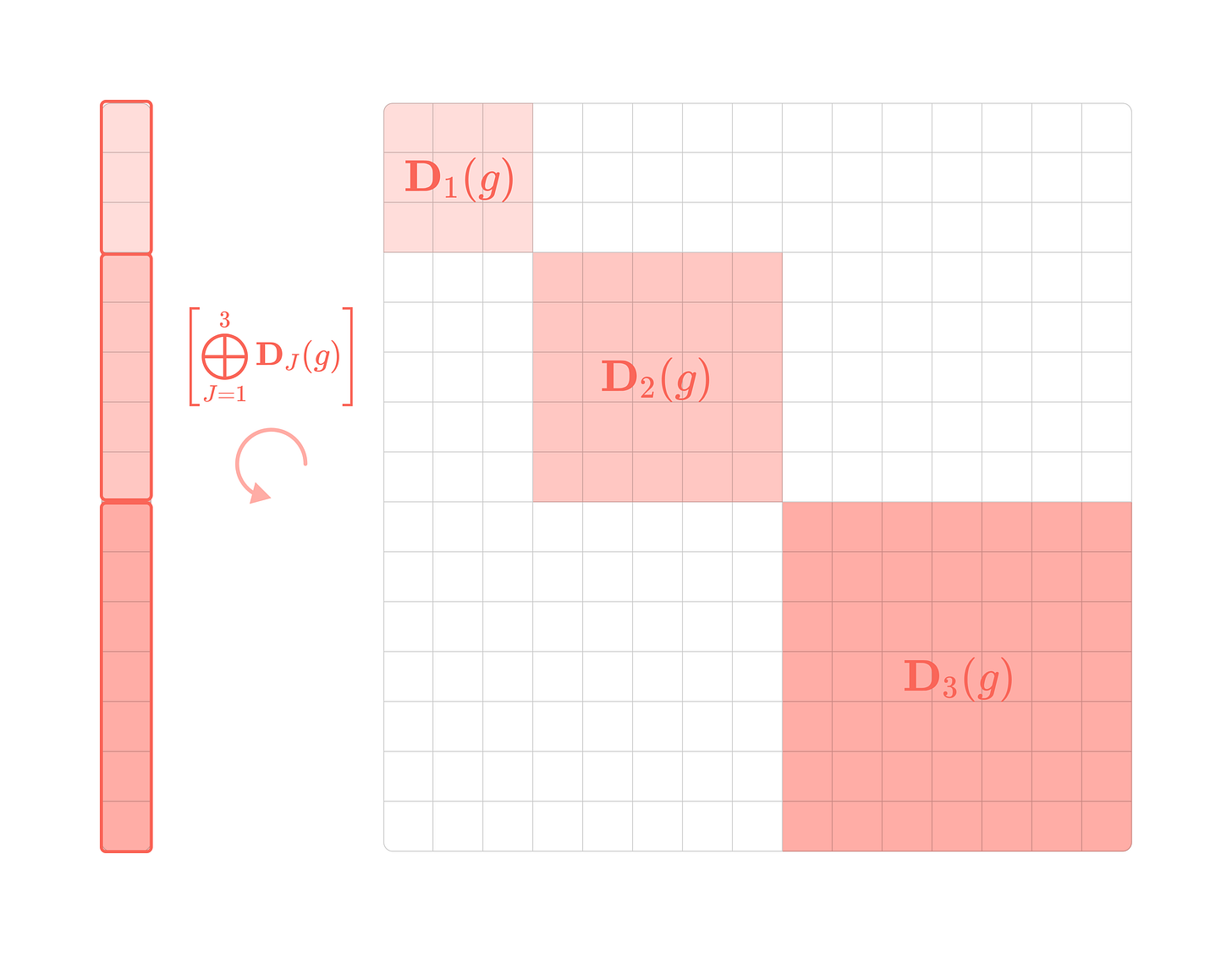

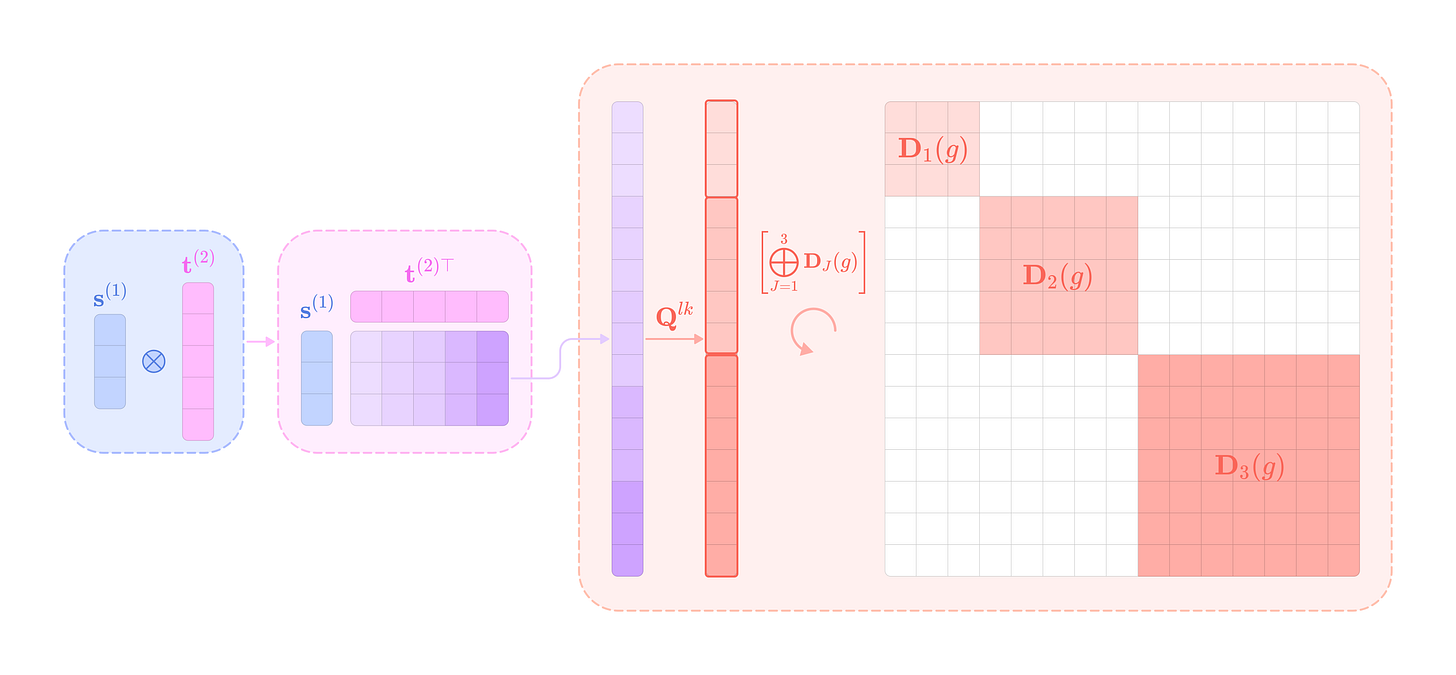

All group representations can be decomposed into the direct sum ⊕ (concatenation of matrices along the diagonal) of irreps. This block diagonal matrix can then be used to transform a higher-dimensional tensor after first applying an N x N change of basis matrix Q. Thus, we can write all representations of SO(3) in the following form:

In the equation above, Q is decomposes the input tensor into a direct sum of type-J spherical tensors aligned with each block in the block-diagonal Wigner-D matrix, and the transpose of Q converts the rotated spherical tensors back into their original basis.

There is a special subset of tensors called spherical tensors that transform directly under the irreps of SO(3) without the need for a change in basis.

Spherical tensors are considered irreducible types because all Cartesian tensors can be decomposed into their spherical tensor components, but spherical tensors cannot be decomposed further. Spherical tensors have degrees numbered by non-negative integers l that we call the tensor type. Type-l tensors are (2l + 1)-dimensional vectors that transform under a corresponding set of type-l irreducible representations of SO(3). We will describe both of these ideas more explicitly in the upcoming sections.

In quantum physics, spherical tensors are used to represent the orbital angular momentum of quantum particles like electrons. The degree of spherical tensors corresponds to the angular momentum quantum number (conventionally denoted with the letter l) that indicates the magnitude of angular momentum and the dimensions correspond to the (2l + 1) possible magnetic quantum numbers, which have integer values ranging from -l to l (denoted with the letter m) and is equal to the projected angular momentum on the z-axis relative to an external magnetic field.

Since angular momentum can be represented as a vector in 3D space, the value of m must be between -l (directly opposing the magnetic field) and l (perfectly aligned with the magnetic field), and since angular momentum is quantized, m must be an integer. In physics, |l,𝑚⟩ is used to denote a specific dimension m of a type-l spherical tensor which represents an eigenstate of a quantum particle, where the angular momentum is considered to be well-defined (more on this later).

Since the concepts of SO(3)-equivariance are deeply intertwined with quantum physics, specifically the coupling of angular momentum in quantum systems, I will continue to make connections to quantum mechanics throughout the article in these quotation blocks.

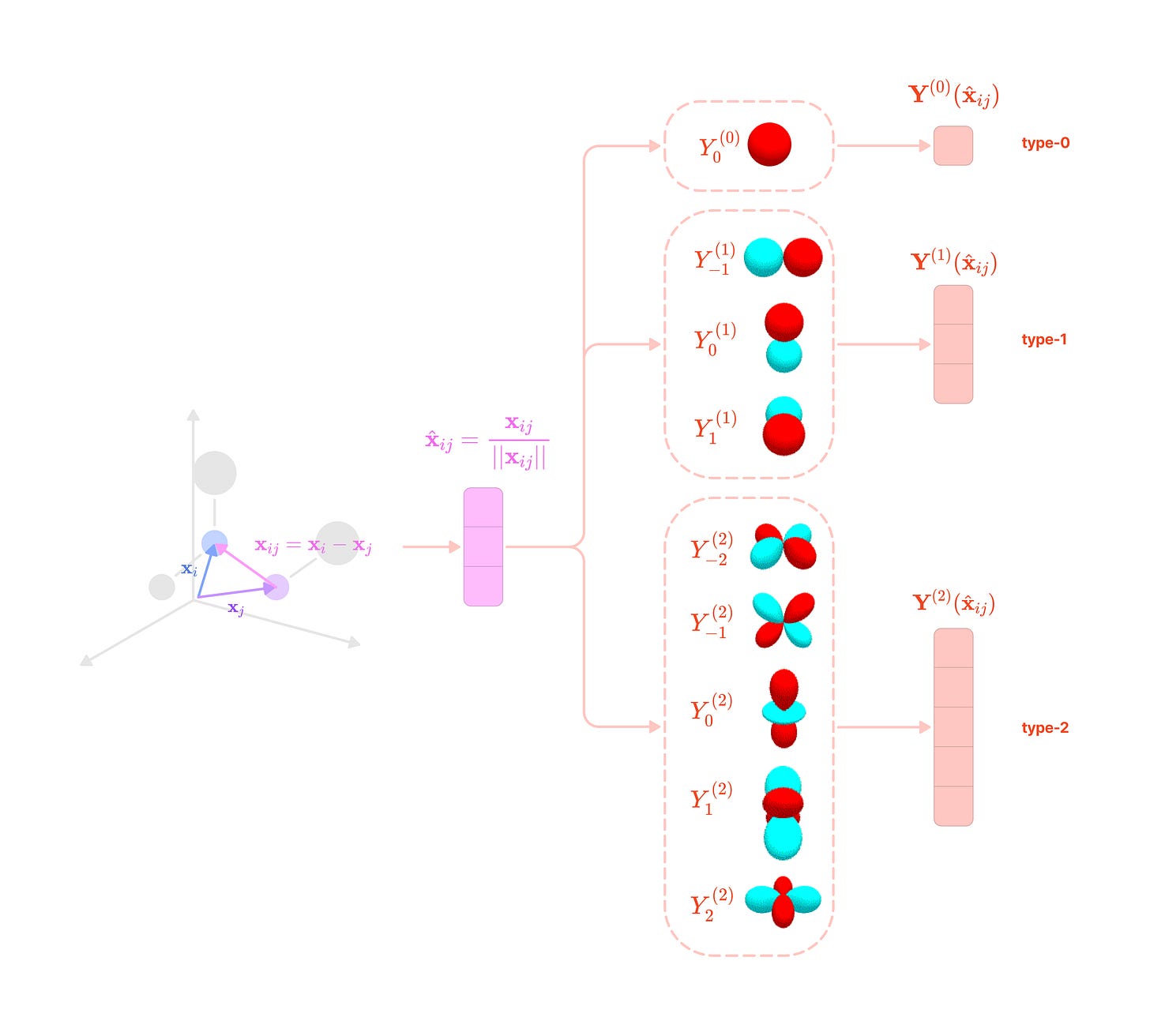

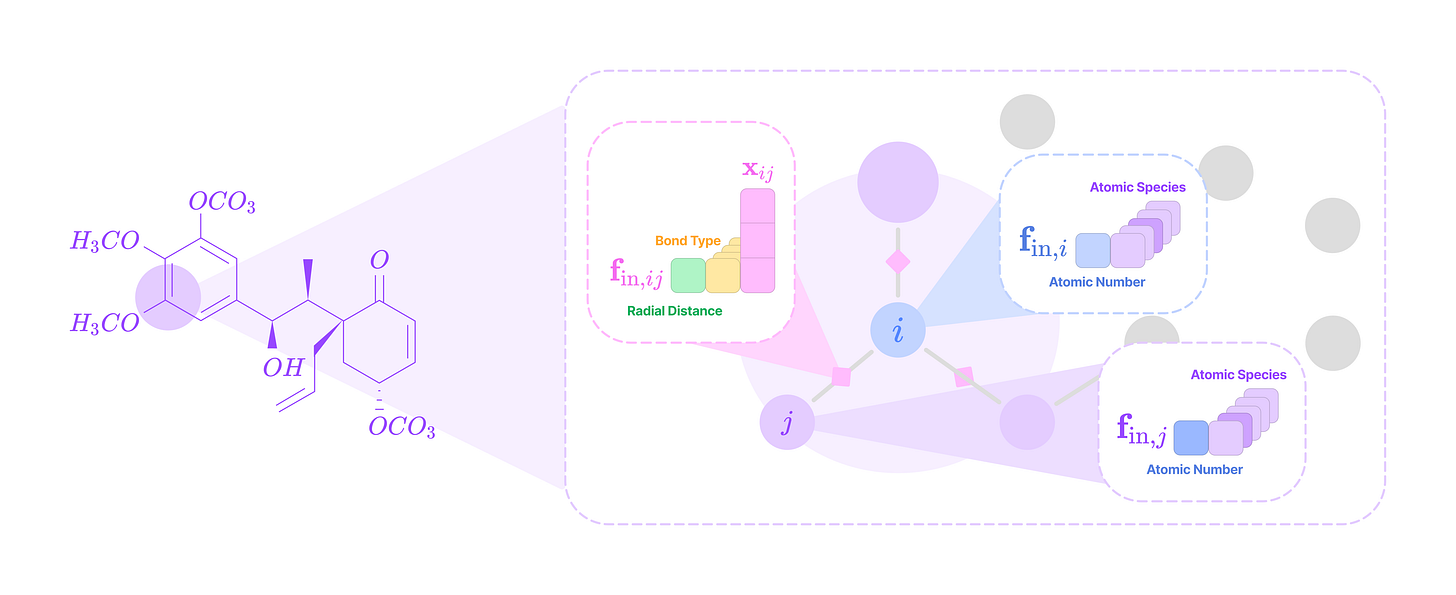

From Point Cloud to Geometric Graph

A point cloud is a finite set of 3D coordinates (or 3-dimensional vectors) where every point has a corresponding feature vector. Nodes can represent atoms in molecules, residues (C-alpha atoms) in proteins, or any unit in a system that carries information about itself in the form of feature tensors.

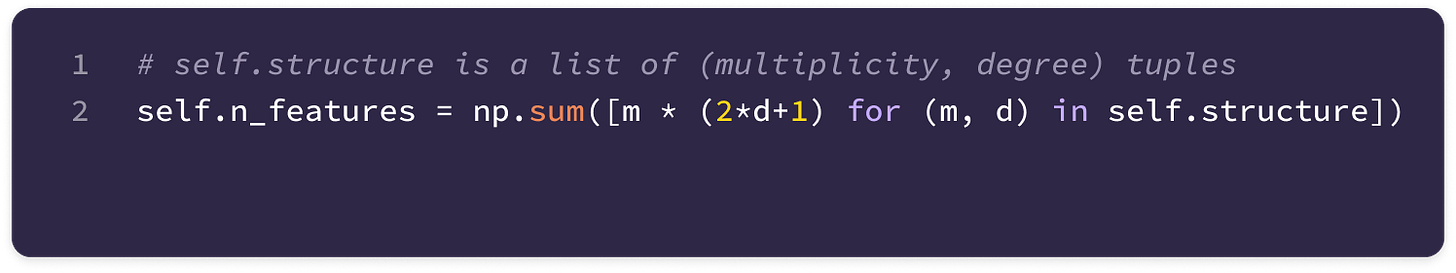

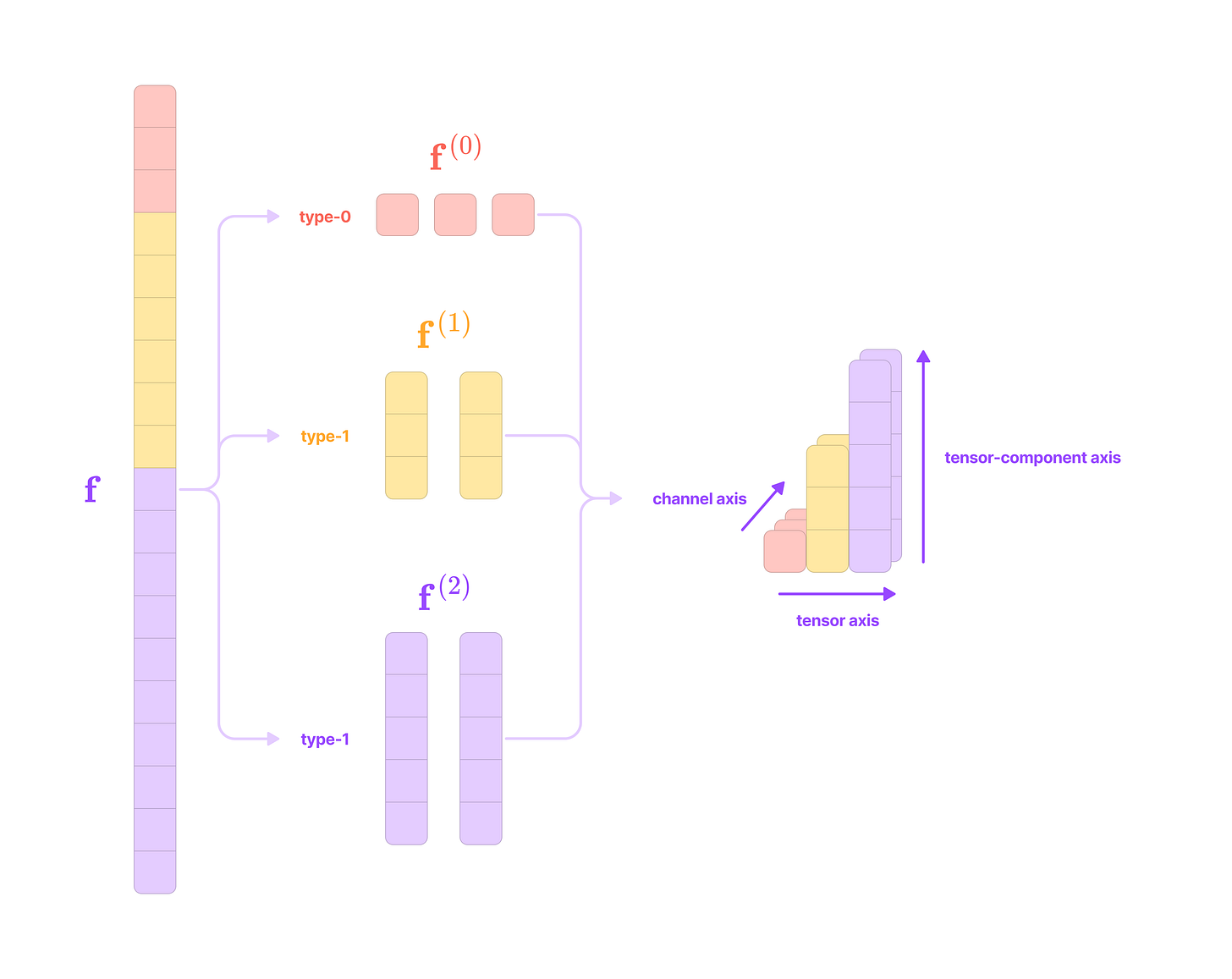

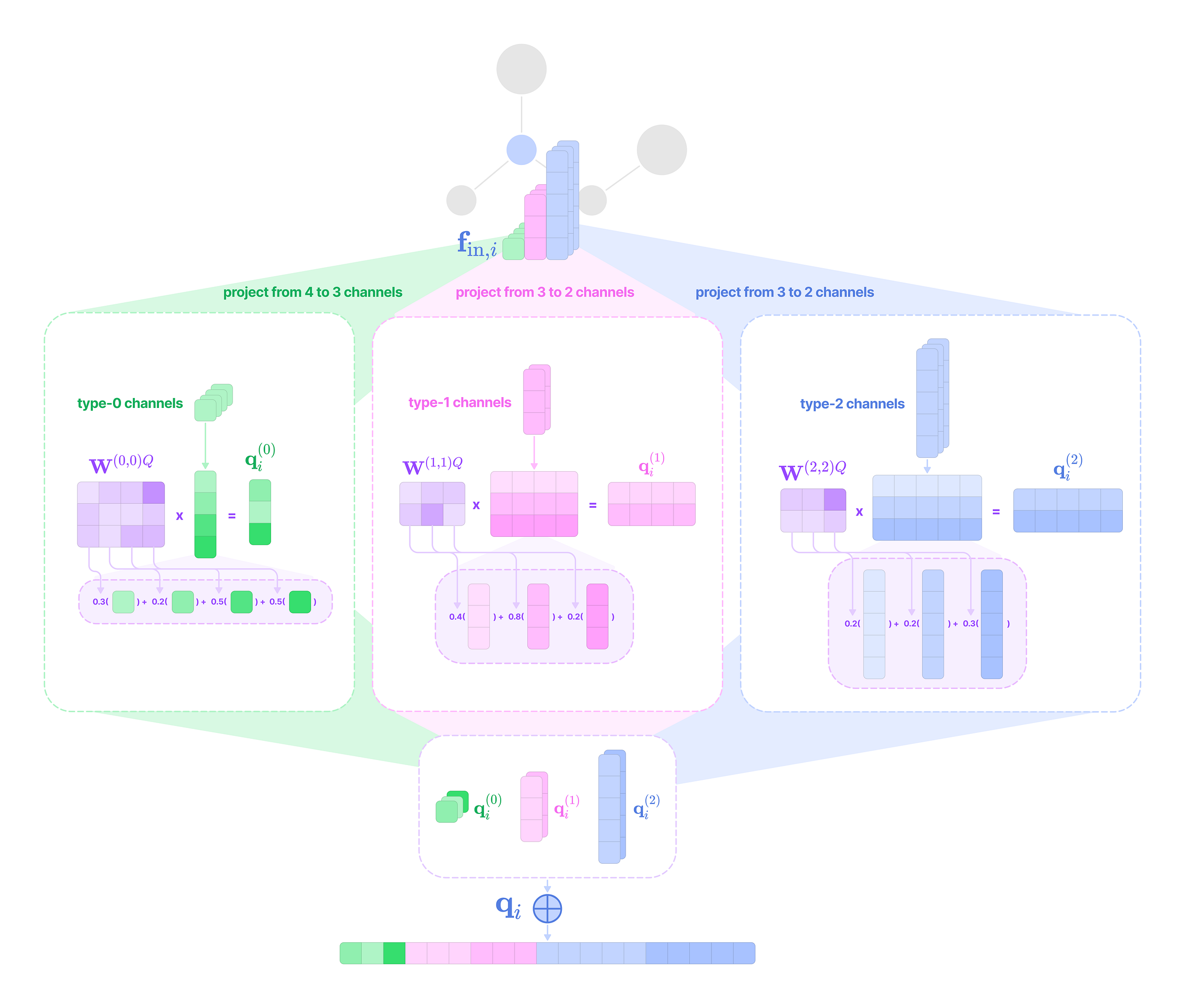

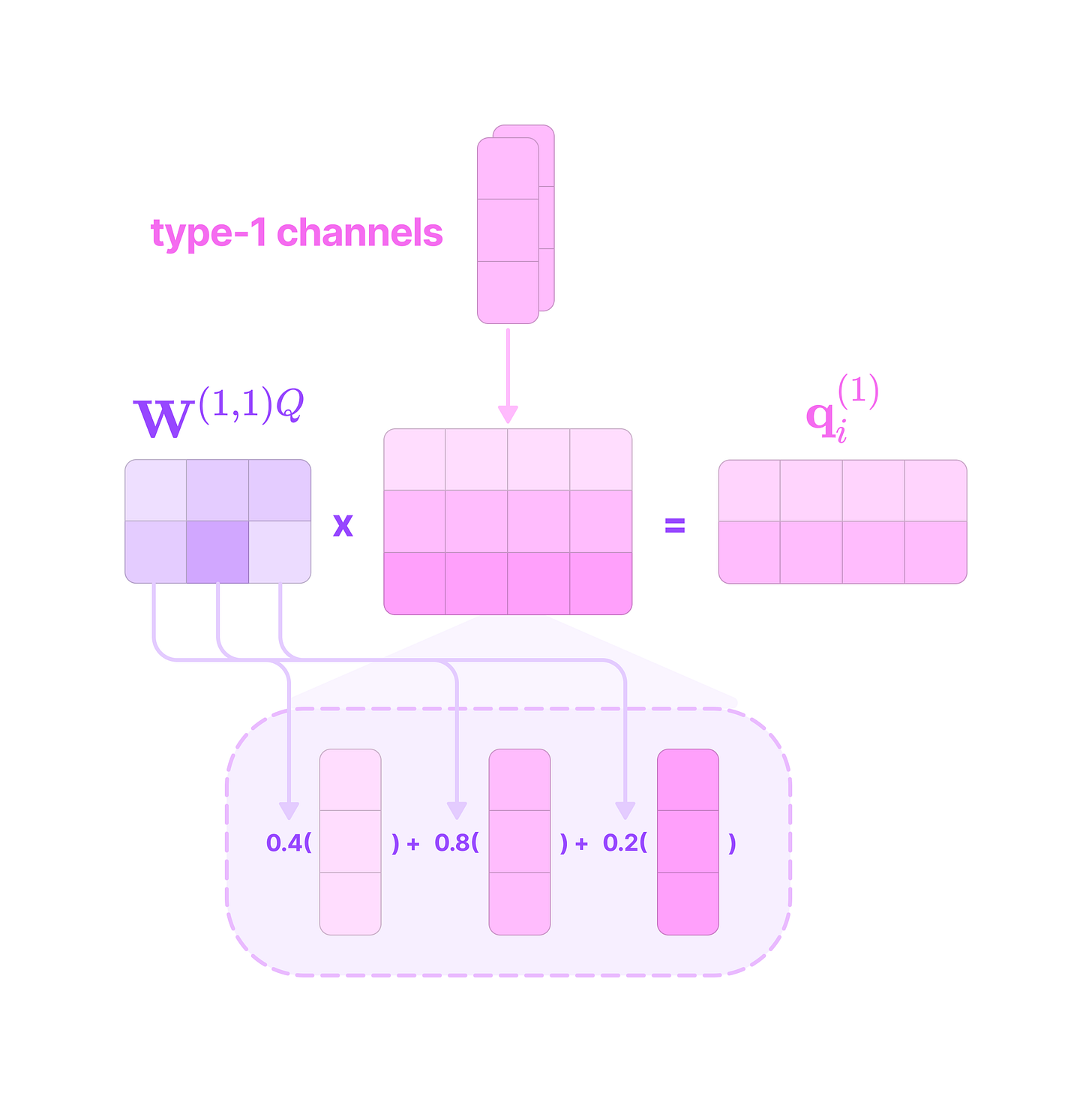

The feature vector f corresponding to each node in the point cloud contains data on its properties (e.g., atomic number, charge, hydrophobicity, etc.). The feature vector can be arranged into a tensor list, a multi-dimensional list of spherical tensors with three axes in the following order: a tensor axis, a channel axis, and a tensor-component axis.

The channel axis represents the number of features of each type at the node. If a node contains three type-2 features, that feature has 3 channels.

The tensor axis represents the number of different types of spherical tensor features at the node. If a node has type-0, type-1, and type-2 features, it has a tensor axis dimension of 3.

The tensor-component axis represents the dimensions of each type of spherical tensor. For the type-k spherical tensors at the node, the tensor-component axis has dimension 2k + 1. Type-0 tensors are 1-dimensional scalars, type-1 tensors are 3-dimensional vectors, type-2 tensors are 5-dimensional vectors, and type-k tensors are (2k+1)-dimensional vectors.

From the point cloud, we want to generate a directional graph that contains directional edges between nodes that point in the direction of message-passing. An edge in the point cloud is defined by a displacement vector that points from node j (source node or neighborhood node) to node i (destination node or center node).

This can be decomposed into the radial distance (scalar distance between nodes) and the angular unit vector (vector with length 1 in the direction of the displacement vector). In the upcoming sections, we will see how both components are incorporated in constructing the equivariant kernel for message-passing.

Note that in most geometric graphs, edges are bidirectional, meaning there is an edge from node i to node j and an edge from node i to node j. The displacement vector of bidirectional edges has the same radial distance and angular unit vectors pointing in opposite directions.

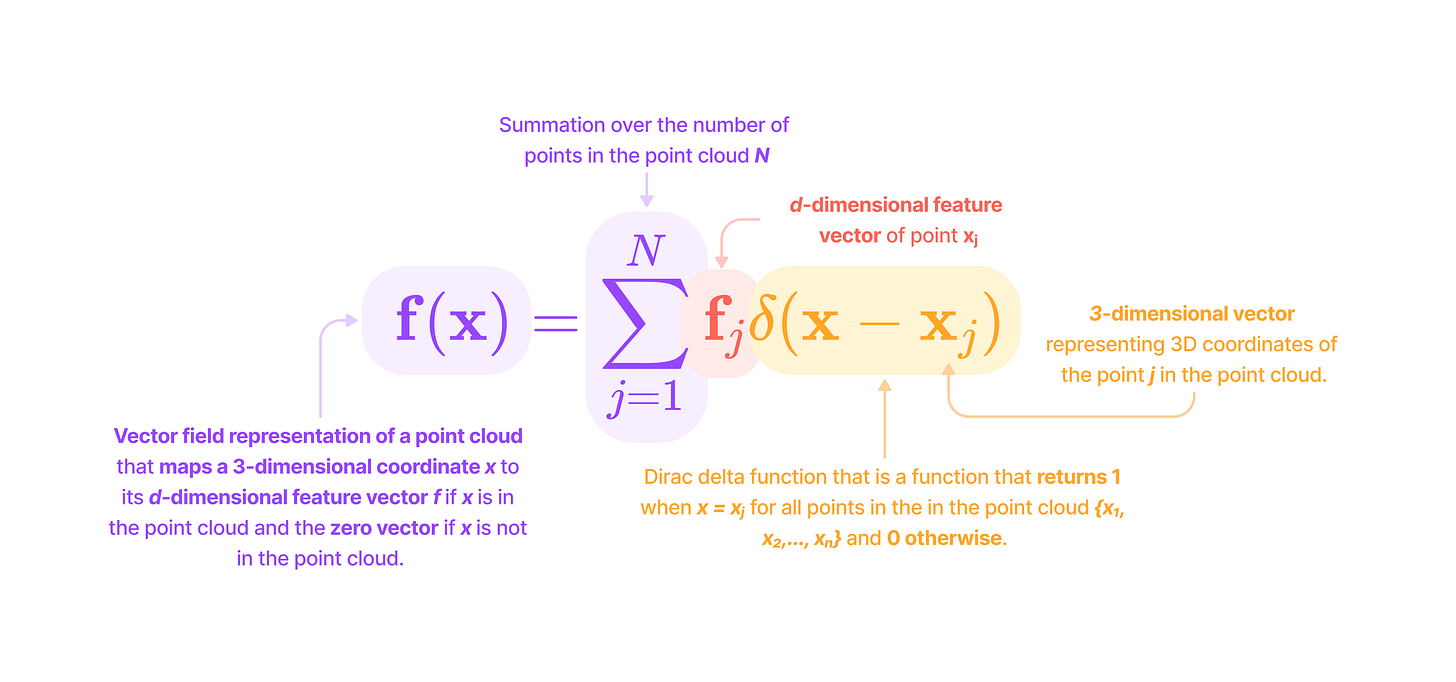

Another way of representing a point cloud is as a continuous function f that takes in a 3-dimensional vector representing a point in 3D space (x) and outputs a feature vector (f) if x is in the point cloud (x = xj) or the zero vector if x is not in the point cloud.

Since point clouds are defined by the relative spatial displacements between nodes in a global (non-fixed) reference frame, they often represent objects with rotational symmetries that must be handled equivariantly. This means that rotating the entire point cloud should generate rotated system-level outputs, and rotating individual nodes should generate rotated node-level updates.

Representing graphs as continuous functions facilitates continuous convolutions that are applied to every point in space, also known as point convolutions. However, for the sake of clarity, we will be considering graphs as a finite set of points on which the convolutions (which we will refer to as kernels) are applied. We do this by using summation notation, which will become clear in later sections.

Wigner-D Matrices

An irreducible representation (or irrep) of SO(3) defines how a type-l spherical tensor transforms under 3D rotation. The type-l irrep is a set of (2l + 1) x (2l + 1) matrices called Wigner-D matrices that rotate a type-l spherical tensor by an element g ∈ SO(3). For a specific 3D rotation g ∈ SO(3), the Wigner-D matrices for type-l tensors can be denoted as:

Type-0 tensors are scalars and remain unchanged under 3D rotation. The Wigner-D matrix for a type-0 vector is a 1 x 1 matrix with a single entry of 1.

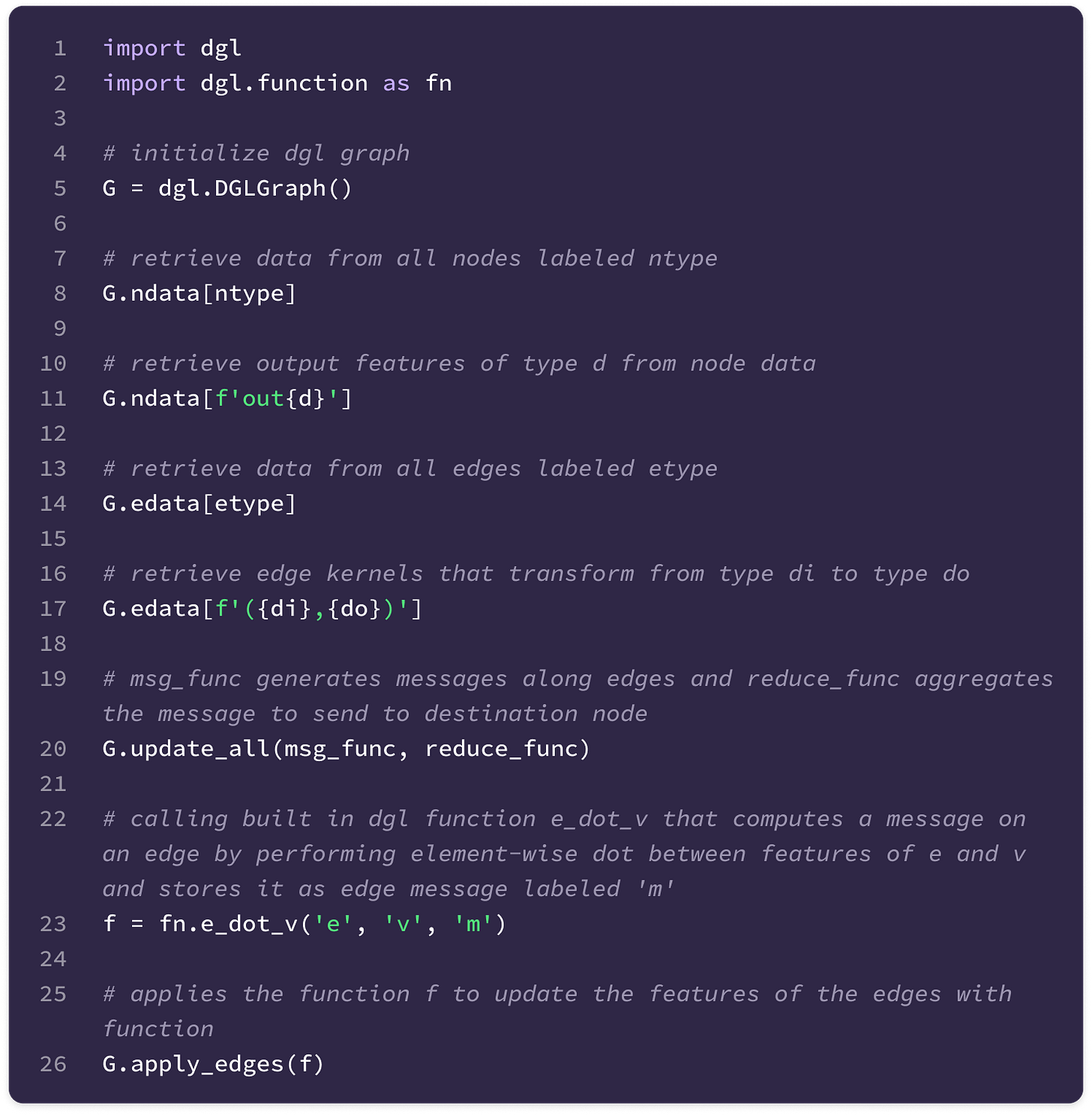

\(\mathbf{D}_0(g)=[1]\)Type-1 tensors are 3-dimensional vectors and simply transform by the standard 3 x 3 rotation matrices under 3D rotation. All rotation matrices R, and thus Wigner-D matrices for type-1 tensors, can be constructed by multiplying the basic rotation matrices that rotate by an angle α about the x-axis (Rx), β about the y-axis (Ry), and γ about the z-axis (Rz).

Higher-order tensors (l > 2) transform by the corresponding (2l + 1) x (2l + 1) type-l Wigner-D matrix that we denote with a subscript l.

Now, we can define the equivariance condition using Wigner-D matrices. A function (or kernel), which we will denote as W, that transforms a type-k spherical tensor to a type-l spherical tensor is equivariant if it satisfies the following equivariance condition:

Since the feature vector is a stack of spherical tensors, it can be transformed via a matrix composed of concatenated Wigner-D matrices along the diagonal.

In the later section on tensor products, we will learn how to decompose any Cartesian tensor into its spherical tensor components, enabling them to transform directly and equivariantly under the Wigner-D matrices.

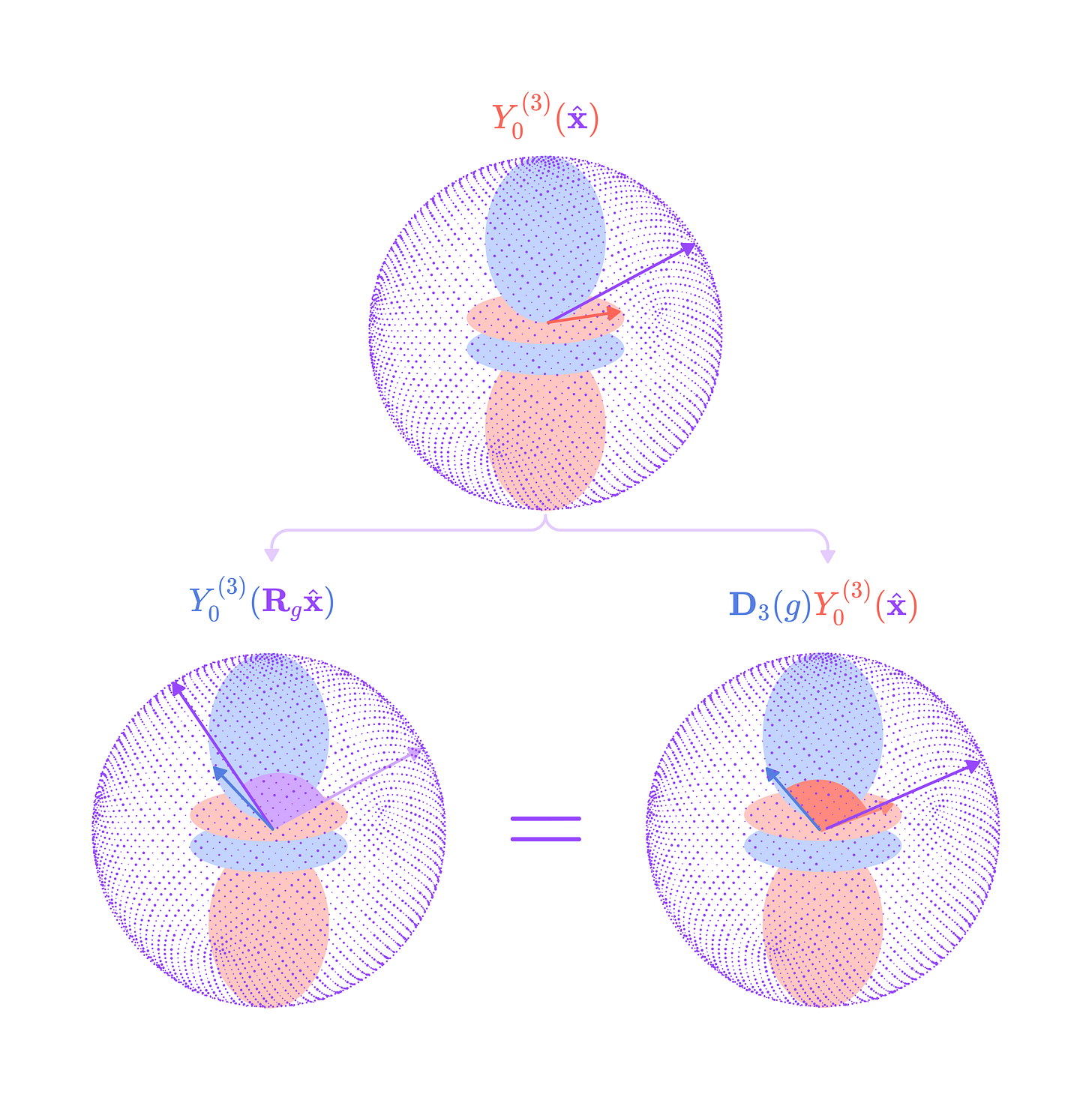

Spherical Harmonics

Spherical harmonics represent a complete and orthonormal basis for rotations in SO(3). They are functions that project 3-dimensional vectors into spherical tensors that transform equivariantly and directly under Wigner-D matrices, without requiring a change in basis. A spherical harmonic evaluated on a rotated 3-dimensional unit vector is equal to evaluating the spherical harmonic on the unrotated vector and transforming the output by an irrep of SO(3). Vectors of spherical harmonic functions are used to project the angular unit vector to spherical tensors, which are a fundamental building block of equivariant kernels.

The orthonormal basis functions with which all SO(3)-equivariant spherical tensors can be constructed are called spherical harmonics. We can also consider spherical harmonics as sets of functions that project 3-dimensional vectors onto orthogonal tensor subspaces that Wigner-D matrices operate in.

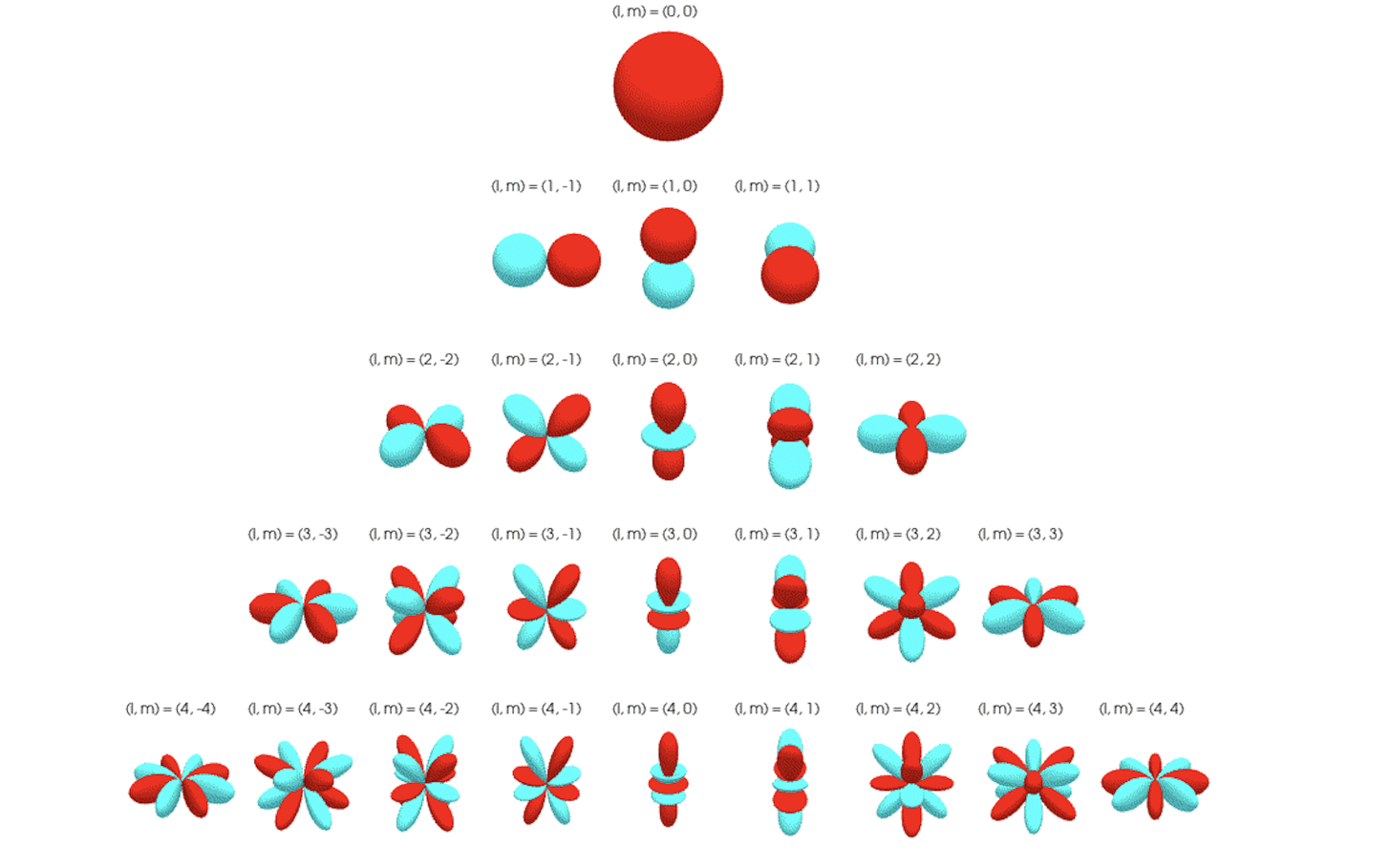

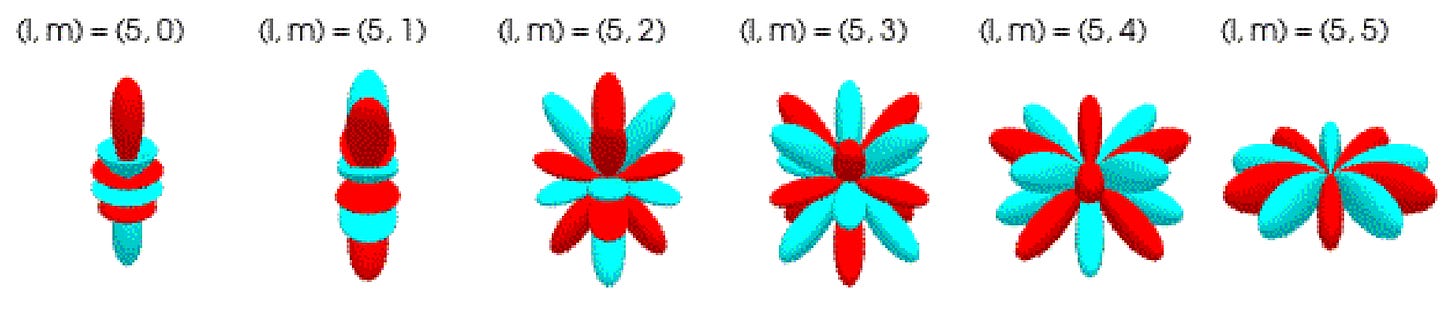

Each spherical harmonic function (indexed by its degree l and order m) takes a unit vector (length of 1) on the unit sphere (S²) and returns a real number.

For every type of spherical tensor l, there is a corresponding vector of 2l + 1 spherical harmonic functions indexed by m that transform points on the unit sphere into type-l spherical tensors that rotate directly under type-l Wigner-D matrices.

This spherical harmonic vector transforms a point on the unit sphere to a type-l spherical tensor:

The explicit expressions defining the real spherical harmonics1 (or tesseral spherical harmonics) can be written in terms of the angle from the z-axis (polar angle θ) and the angle from the x-axis of the orthogonal projection onto the xy-plane (azimuthal angle φ):

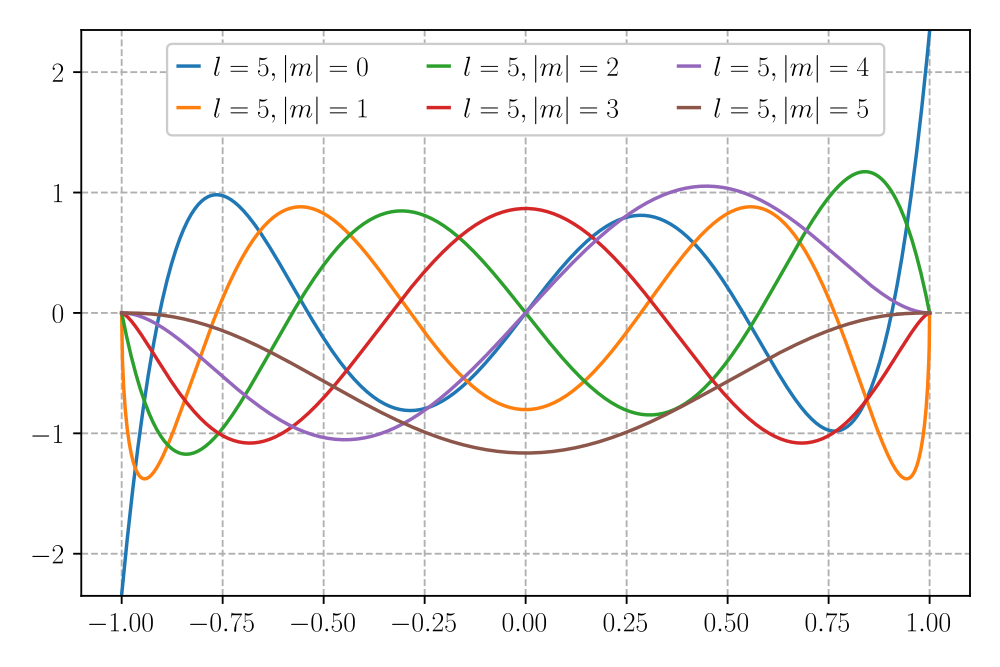

The function dependent on cos(θ) is the associated Legendre polynomial (ALP) with degree l and order m given by the following equation:

In the equation above, Pl denotes the Legendre polynomial with maximum degree l. Visually, you can think of the Legendre polynomial where x=cos(θ) as a wave on the unit sphere. The zeros of the polynomial between [-1, 1] are nodes on the spherical harmonic where the harmonic changes sign. The set of Legendre polynomials is mutually orthogonal over the interval [-1, 1], meaning that the inner product of any two polynomials in the set is 0.

The Legendre polynomials are also complete, such that all square-integrable2 functions on the interval [-1, 1] can be approximated as a linear combination of the Legendre polynomials, where the coefficients are independent of one another.

When x=cos(θ), the (1-x²) term becomes sin²θ, and we can rewrite the ALP as:

The associated Legendre polynomials (ALPs) are obtained by taking the mth derivative of the Legendre polynomials and scaling by a factor of (sinθ)ᵐ. Since the (sinθ)ᵐ factor is zero for θ = 0 (north pole) and θ = π (south pole) for all non-zero m, there is a node at the north and south poles. As the exponent m increases, the ALPs start to approach zero farther from the poles, and the peak between 0 and π becomes narrower, effectively decreasing the number of possible nodes between θ = 0 and π generated from the Legendre polynomial term. These properties are reflected in the graph below of all the ALPs of degree l=5 with non-negative orders of m.

The purpose of including the ALP in spherical harmonics as opposed to the unmodified Legendre polynomials is to allow dependence on the azimuthal angle (φ). Since the azimuthal angle is undefined at the poles, the (sinθ)ᵐ factor ensures that there is no contribution from the azimuthal term at the poles for all non-zero values of m.

When m = 0, the (sinθ)ᵐ factor is 1, and the polynomial is unbounded at the poles, and there is no azimuthal dependence in the spherical harmonic.

Including the sine or cosine function dependent on the azimuthal angle allows spherical harmonics to describe a larger range of functions on the sphere and orientations of the angular momentum vector in a magnetic field.

It is also worth noting that taking the lth derivative of a degree-l Legendre polynomial is a constant, and taking even higher order derivatives returns zero. This means that including the ALP term aligns with the condition that the magnitude of the angular momentum projected on the z-axis |m| must be less than the magnitude of the angular momentum vector |m| <= l.

Spherical harmonics inherit the properties of the Legendre polynomials and ALPs applied to the unit sphere, forming a complete and orthogonal basis for spherical tensors with the following properties:

The completeness of spherical harmonics means that all Cartesian tensors can be represented as a set of orthogonal projections on the spherical tensor subspaces that rotate directly under Wigner-D matrices via a change of basis.

The orthogonality of spherical harmonics means that each type of spherical tensor transforms independently under rotation by the type-l Wigner-D matrix. This means that the direct sum (or vector concatenation) of spherical tensors rotates under a block-diagonal matrix with Wigner-D matrices along the diagonal and zeros everywhere else. The zero entries indicate that there are no cross dependencies between the tensors under rotation, a crucial property for our upcoming discussion on constructing equivariant layers.

\(\int_{S^2}Y^{(l)}_{m_l}(\theta,\phi)Y^{(k)}_{m_k}(\theta,\phi)d\Omega=\delta_{lk}\delta_{m_lm_k}\)The integral of the product of spherical harmonics over the unit sphere (inner product) is equal to the product of two Kronecker delta δ, which is equal to 1 if l = k or m_l = m_k, and zero otherwise. This means that the inner product of two real spherical harmonics is non-zero only when they share the same degree l and order m, and is zero for all distinct pairs of l and m.

To gain some physical intuition, let’s describe the role of spherical harmonics in quantum mechanics, where they are used to describe the angular component of electron wavefunctions.

Spherical harmonics are eigenfunctions of the orbital angular momentum operators L² and Lz that act on electron wavefunctions. When the squared total angular momentum operator (L²) is applied to a spherical harmonic, it returns the function scaled by the eigenvalue (scalar) ℏ²l(l+1) consisting of the total angular momentum number l, where ℏ is the Planck constant.

\(\hat{L}^2Y_m^{(l)}(\theta,\phi)=l(l+1)\hbar^2Y_m^{(l)}(\theta,\phi)\tag{$l=0,1,\dots$}\)In addition, when the z-component angular momentum operator (Lz) is applied to a spherical harmonic, it returns the function scaled by the eigenvalue mℏ containing the magnetic quantum number m.

\(\hat{L}_zY^{(l)}_m(\theta,\phi)=m\hbar Y^{(l)}_m(\theta,\phi)\tag{$m=-l,\dots,l$}\)These eigenvalues give the quantized values of orbital angular momentum, that is the angular momentum of an electron orbital cannot take any value but is limited to certain quantities defined by the integer values of l and m.

The orbitals with defined angular momentum |l,𝑚⟩ are called eigenstates, and they have wavefunctions that map every point in space to a probability amplitude. The square of the wavefunction describes the probability of an electron existing in a specific quantum state. Wavefunctions can describe the probability distribution of position, angular momentum, spin, or energy of an electron. The spherical harmonic corresponding to |l,𝑚⟩ is the angular component of the wavefunction of an isolated electron which describes how the probability densities vary with orientation around the origin.

This illustrates how spherical harmonics capture the foundational rotational symmetries of physical systems, which make them the perfect basis for constructing functions on the sphere that detect rotationally symmetric patterns in graph features. Watch this video to learn how to derive the first few spherical harmonics.

Critically, the spherical harmonics are equivariant functions, meaning that a rotation of the input 3-dimensional vector by the 3 x 3 rotation matrix R for g ∈ SO(3) is equivalent to rotating the spherical harmonic projection by the type-l Wigner-D matrix for g.

The type-J vectors of spherical harmonic functions with elements m = -J to J are used to project angular unit vectors of edges into higher-degree spherical tensors that can model different frequencies of rotationally symmetric features. These higher-degree spherical tensors form the basis set of SO(3)-equivariant kernels that can combine to capture complex rotationally symmetric chemical properties with high precision, which we will discuss in depth later in the article.

Like how the Fourier series decomposes periodic signals into sine and cosine components with specific periodic frequencies, spherical harmonics decompose rotationally symmetric, or SO(3)-equivariant, features on the unit sphere into components that change with specific angular frequencies. This decomposition is crucial to model how SO(3)-equivariant features vary across positions and orientations on the unit sphere.

The degree or type of a spherical harmonic determines its frequency or how rapidly it oscillates on the sphere. Lower-degree harmonics can model features with broader, smoother variations under rotations, while higher-degree harmonics capture features with sharper, finer variations under rotation.

Just as low-frequency sinusoids fail to accurately approximate high-frequency functions in Fourier analysis, low-degree spherical harmonics are not sensitive enough to handle chemical properties that vary dramatically with subtle changes in atomic orientation and position.

In the later section on computing the basis kernels, we will deconstruct how to precompute the spherical harmonic functions using recursive relations.

Now that we have defined the basis for spherical tensors, let’s discuss how to combine and convert between tensors of different types using the tensor product.

Tensor Product

The tensor product is a bilinear and equivariant operation that combines two spherical tensors to produce a higher-dimensional tensor. Since the output higher-dimensional tensors are generally not spherical tensors themselves, we must decompose them into their spherical tensor components using change-of-basis matrices formed with Clebsch-Gordan coefficients.

Suppose we want to exchange information between type-k and type-l features. Since they are different types, they are transformed differently under 3D rotation. How do we exchange information between these features without breaking equivariance?

This is where the tensor product comes in, which is denoted by ⊗.

The tensor product converts the type-k and type-l spherical tensors into a (2l+1) x (2k+1) matrix by calculating the product of every pair of dimensions indexed by m_k and m_l.

We can flatten this matrix into a (2l+1)(2k+1)-dimensional tensor. However, this higher-dimensional tensor is not spherical, and we must define the representation D(g) under which it rotates equivariantly. Since the tensor product is equivariant, it satisfies the equivariance condition, which states that rotating the tensor product by g is equivalent to rotating the individual spherical tensors by their respective Wigner-D matrices and then taking the tensor product. This translates into the following equation:

Using the tensor product identity below:

We can manipulate the above equation to isolate the Wigner-D matrices:

This means that the tensor product of a type-k and a type-l tensor rotates under the representation of SO(3) derived from the Kronecker product (⊗) of the type-k and type-l Wigner-D matrices:

The Kronecker product operation is analogous to the tensor product for matrices that produces a ‘matrix of matrices’ where each block of the outer matrix is an inner matrix derived from scaling the second matrix in the product by the corresponding element of the first matrix.

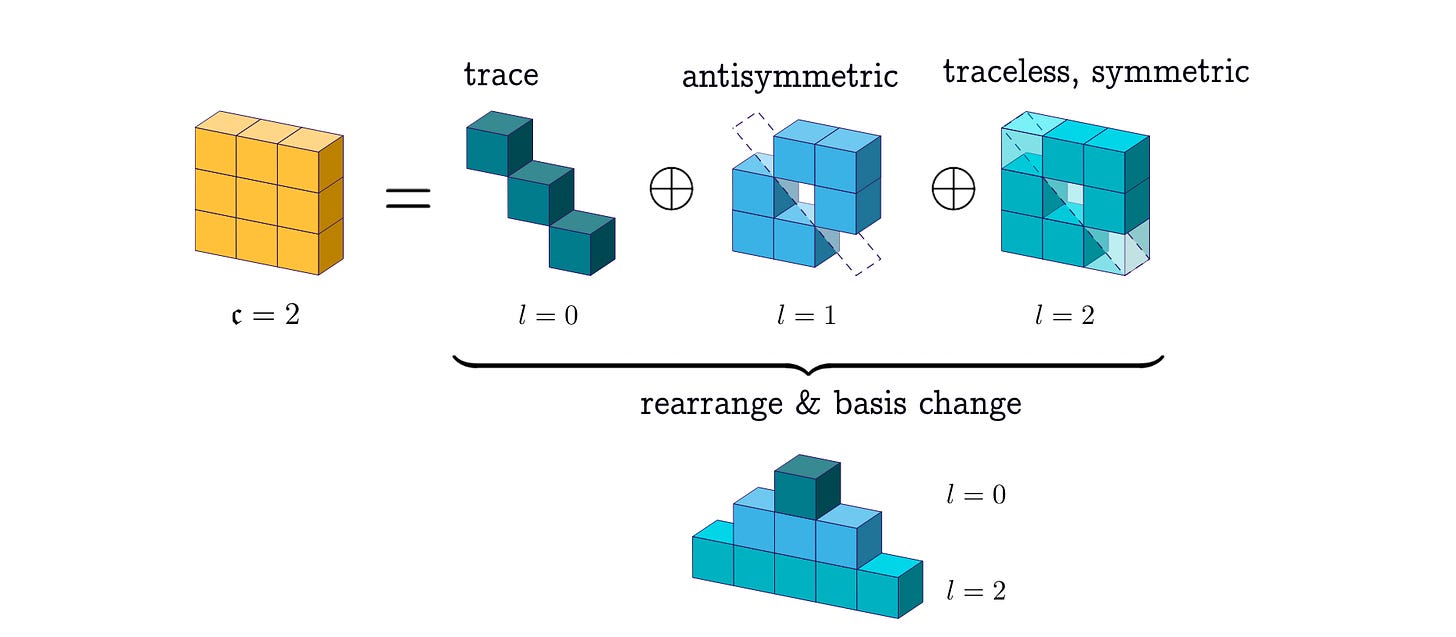

The Kronecker product of two SO(3) representations is another representation; but since every group representation can be written as the direct sum of irreps (where each block along the diagonal is an irrep and the remaining entries are zero) coupled with an orthogonal change-of-basis matrix and its transpose, we can decompose the Kronecker product of the type-k and type-l Wigner-D matrices into the direct sum of Wigner-D matrices coupled with the change of basis matrix composed of a special set of coefficients called the Clebsch-Gordan coefficients (which we will dive into in the next section).

where Q is a (2l+1)(2k+1) x (2l+1)(2k+1) orthogonal change-of-basis matrix where each element is a Clebsch-Gordan coefficient.

From this equation, we see that the Clebsch-Gordan change of basis matrix can be used to transform the (2l+1)*(2k+1)-dimensional tensor product into the direct sum of exactly one spherical tensor of each type ranging from |k-l| to k+l stacked into a single vector that rotates under the direct sum of Wigner-D matrices ranging from |k-l| to k+l. We can think of the change-of-basis operation as projecting the tensor from the combined space into several orthogonal subspaces that rotate under defined representations of SO(3).

Let’s solidify this abstract idea with a familiar example. Consider the tensor product of two type-1 tensors (3-dimensional vectors) a and b, which gives a 3 x 3 matrix (or 9-dimensional tensor):

We can extract some familiar values from this matrix:

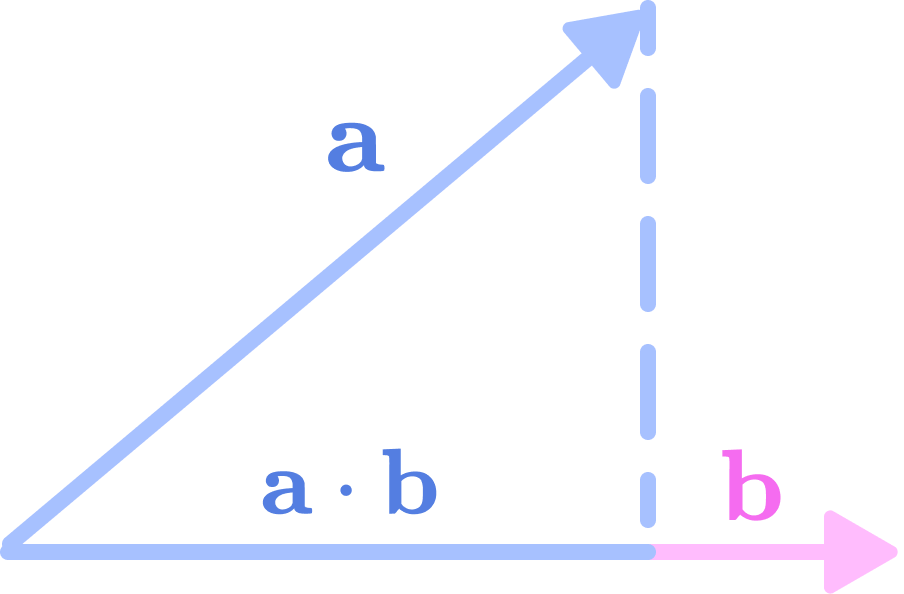

We can see that the trace of the matrix (sum of values along the diagonal) is equal to the dot product of a and b.

\(\mathbf{a}\cdot \mathbf{b}=a_xb_x+a_yb_y+a_zb_z\)

The dot product of two 3-dimensional vectors a and b is the length of the projection of a onto the line spanned by b. The dot product is invariant to rotation. (Source: Alchemy Bio) The dot product can be interpreted as the length of the projection of the vector a on the line created by vector b. If we rotate both a and b, the length of the projection shouldn’t change because the lengths of the individual vectors and the angle between them remain constant under rotation. So we can think of the trace as the type-0 spherical tensor component of the 9-dimensional Cartesian tensor that transforms invariantly under rotation.

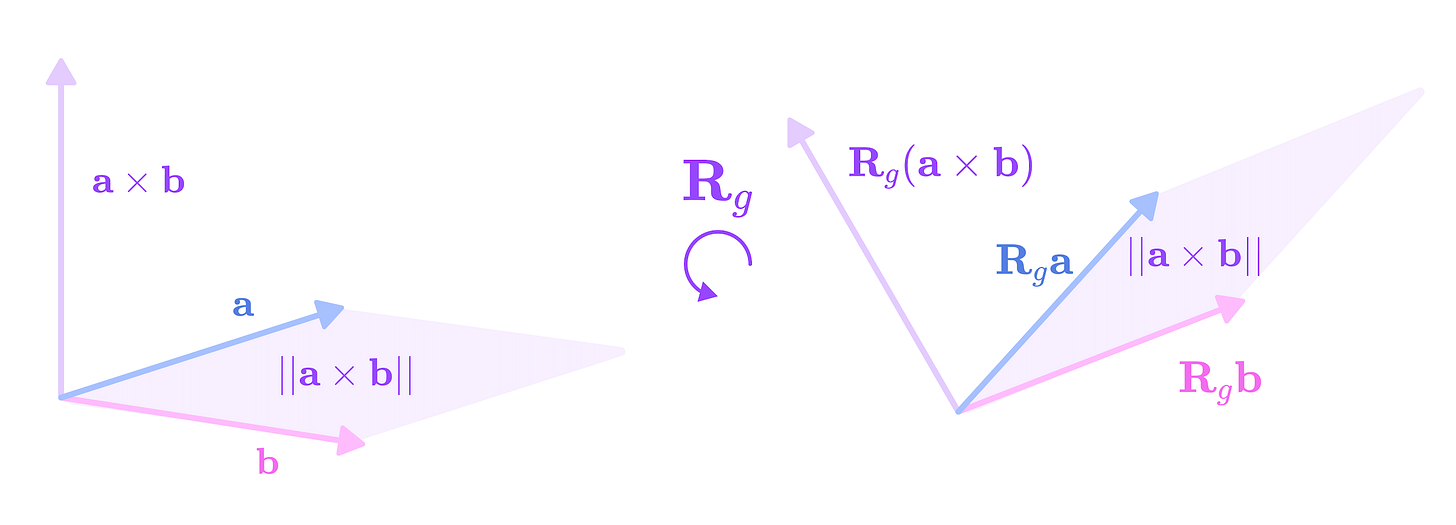

We can also extract the cross-product from this 3 x 3 matrix from the antisymmetric elements:

\(\mathbf{a}\times \mathbf{b}=\begin{bmatrix}a_yb_z-a_zb_y\\a_zb_x-a_xb_z\\a_xb_y-a_yb_x\end{bmatrix}\)

The cross product between two 3-dimensional vectors a and b is the vector perpendicular to both a and b (direction obtained from the right-hand rule) with a magnitude equal to the area of the parallelogram formed by the two vectors. The cross product is equivariant under rotation by the type-1 Wigner-D matrices. (Source: Alchemy Bio) The cross-product can be interpreted as the vector perpendicular to both vectors a and b with length equivalent to the area of the parallelogram formed by the two vectors. If we rotate a and b by the rotation matrix R, the cross-product should rotate by the same matrix R but the length would remain constant since rotations preserve lengths and angles. Thus, we can think of the cross product as the type-1 spherical tensor component of the 9-dimensional Cartesian tensor that transforms under the type-1 Wigner-D matrices (standard 3 x 3 rotation matrices).

Unfortunately, the type-2 spherical tensor component of the 3 x 3 matrix does not have a concrete physical interpretation. However, we can think of it as the traceless, symmetric part of the matrix that rotates under the type-2 Wigner-D matrices.

\(\begin{bmatrix}c(a_xb_z+a_zb_x)\\c(a_xb_y+a_yb_x)\\2a_yb_y-a_xb_x-a_zb_z\\c(a_yb_z+a_zb_y)\\c(a_zb_z+a_xb_x)\end{bmatrix}\)

The decomposed 9-dimensional Cartesian tensor is the direct sum of the type-0 (trace), type-1 (asymmetric), and type-2 (traceless, symmetric) spherical tensor components.

If we stack all three representations into a (1+3+5)-dimensional tensor, the resulting vector concatenation rotates under the direct sum of the type-0, type-1, and type-2 Wigner-D matrices:

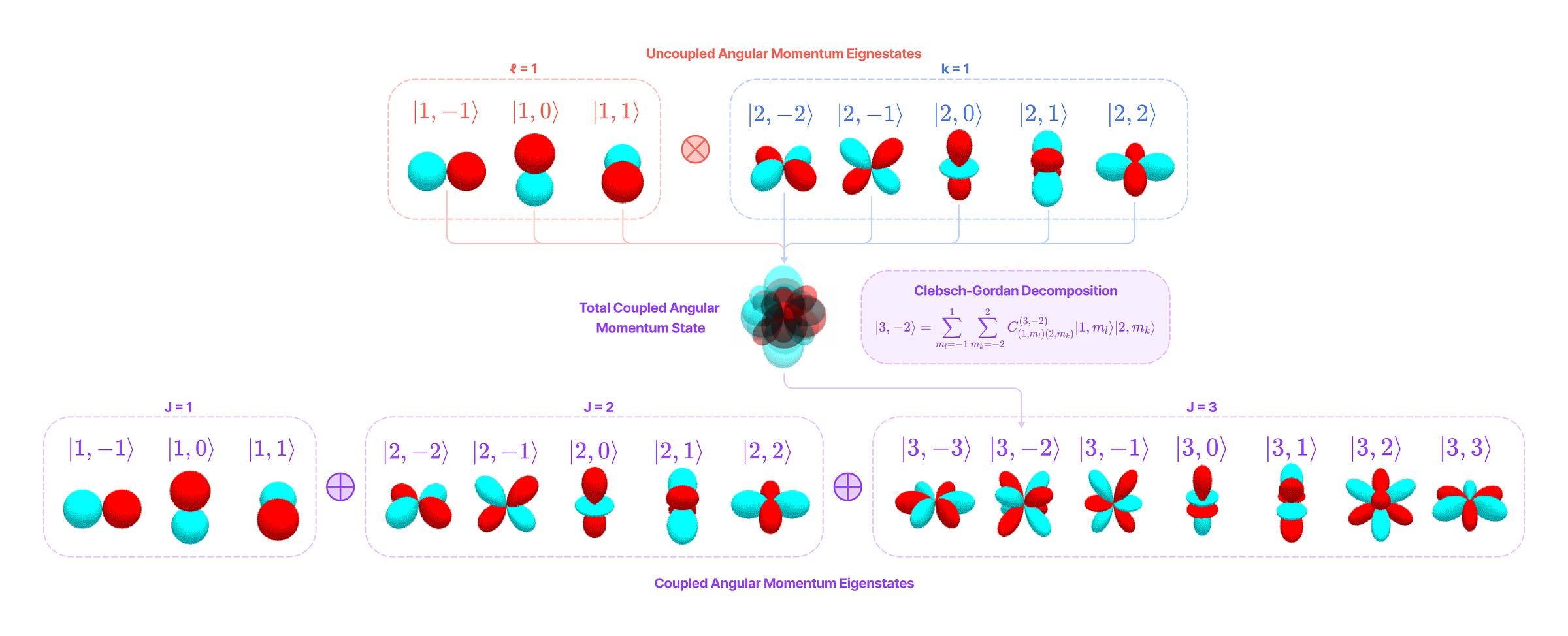

The tensor product in quantum mechanics is used to describe the overlap (or coupling) of two electron orbitals with well-defined angular momentum states |l, 𝑚_l⟩ and |k, 𝑚_k⟩. These are considered angular momentum eigenstates, because the values the total angular momentum (l and k) and magnetic quantum number (m_l and m_k) are eigenvalues of the angular momentum operators with their eigenfunction being the corresponding spherical harmonic function. Since angular momenta are vector quantities, we can consider the uncoupled angular momentum vectors of each eigenstate as a 3-dimensional vector and the coupled angular momentum as the vector addition of the eigenstate vectors.

When the angular momentum vectors of both uncoupled eigenstates are perfectly aligned, their coupled state has a maximum momentum of k + l and when they are perfectly disaligned, their coupled state has a minimum magnitude of |k - l|. The two eigenstates can overlap in any relative orientation, so the magnitude of the angular momentum vector of the coupled state can theoretically be anywhere between these two boundaries.

However, we discussed earlier that angular momentum is quantized, and can only have discrete values corresponding to non-negative integer values of l and integer values of m from -l to l. This means that we can think of the coupled state as a probability distribution of coupled eigenstates with well-defined angular momenta corresponding to integer values of l between |k - l| to k + l. The m value of the coupled eigenstates must be equal to the sum of the uncoupled eigenstates (m = m_l + m_k) since the projection of angular momentum on the z-axis is a scalar value without directionality. The probabilities of finding each coupled eigenstate in the total coupled state are represented by the Clebsch-Gordan coefficients, which can be used to decompose tensor products (total coupled state) into their spherical tensor components (coupled eigenstates).

Clebsch-Gordan Decomposition

Now, we will define the Clebsch-Gordan coefficients (CG coefficients) that form the change-of-basis matrices transforming tensors from the combined tensor product space into their spherical tensor components.

First, let’s develop some intuition about the purpose of the CG coefficients in the context of angular momentum coupling.

As mentioned earlier, the coupled angular momentum state is a probability distribution of coupled eigenstates with well-defined angular momenta. Each Clebsch-Gordan coefficient indicates the amplitude of the wavefunction corresponding to an eigenstate |J, 𝑚⟩ in the wavefunction of the coupled state |l, 𝑚_l⟩|k, 𝑚_k⟩. The square of the absolute value of the CG coefficients is the probability of finding the eigenstate |J, 𝑚⟩ in the coupled state |l, 𝑚_l⟩|k, 𝑚_k⟩, which means the probabilities across all coupled eigenstates must sum to 1:

\(\sum_{J=|k-l|}^{|k+l|}|C^{(J, m)}_{(l, m_l)(k, m_k)}|^2=1\)Furthermore, we can obtain the wavefunction of the coupled eigenstate |J, 𝑚⟩ for a defined value of J as a linear combination of coupled states |l, 𝑚_l⟩|k, 𝑚_k⟩ for different values of m_l and m_k scaled by the CG coefficients.

\(|J, m⟩=\sum_{m_l=-l}^l\sum_{m_k=-k}^kC^{(J, m)}_{(l, m_l)(k, m_k)}|l, m_l⟩|k, m_k⟩\)

There are (2l + 1)(2k + 1)(2J + 1) CG coefficients needed for the decomposition from the tensor product to one type-J spherical tensor component, which can be represented as a (2l + 1)(2k + 1) x (2J + 1) matrix. This is only a slice of the (2l + 1)(2k + 1) x (2l + 1)(2k + 1) matrix needed for the decomposition of the tensor product for all values of J.

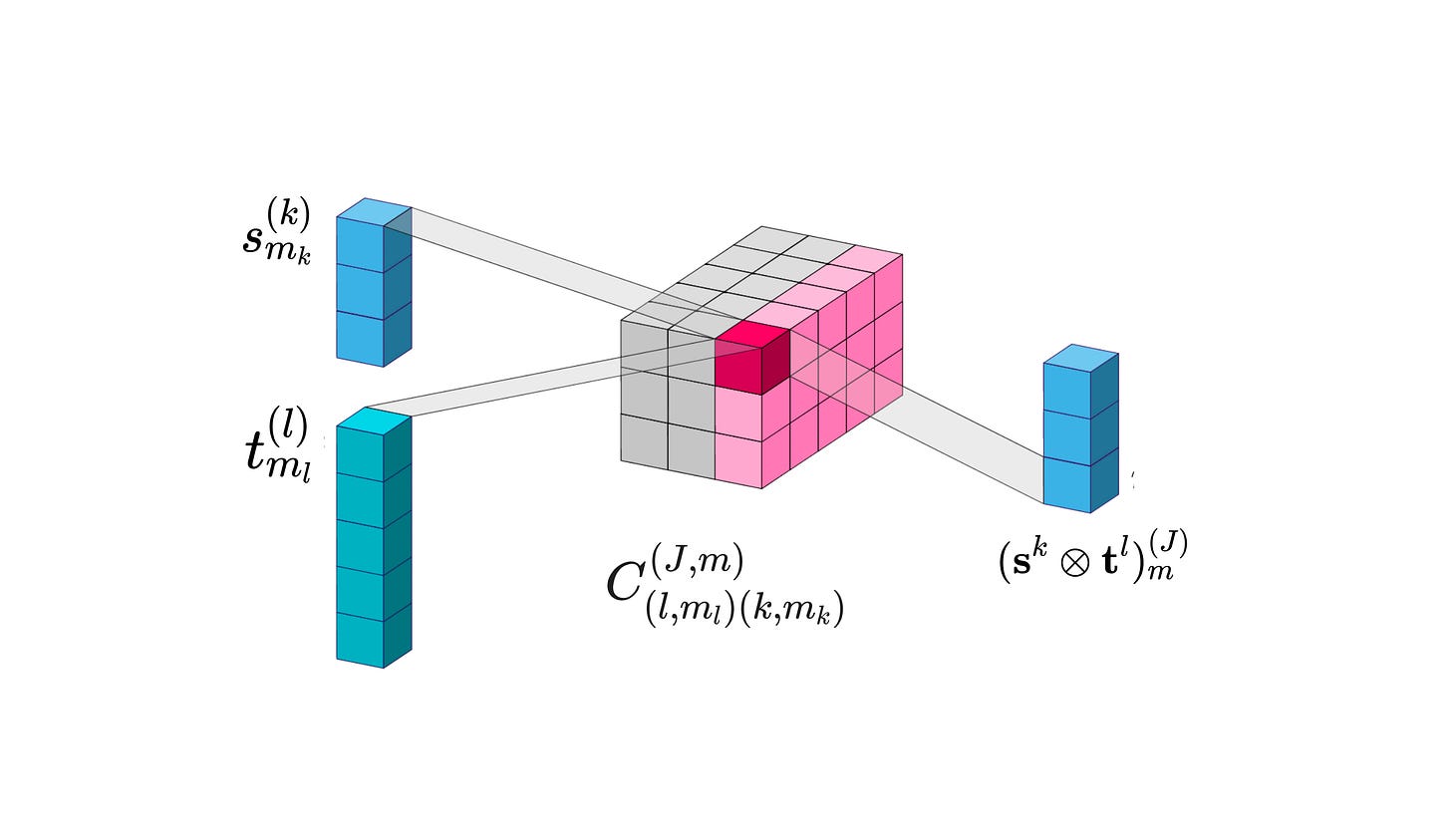

When decomposing the tensor product of a type-k and type-l tensor, each CG coefficient scales the product of the m_l dimension of the type-l tensor and the m_k dimension of the type-k tensor to give the mth dimension of the type-J spherical tensor component.

We can consider each CG coefficient C as a scaling factor that projects the (m_k, m_l) element of the tensor in the combined k ⊗ l tensor space to the orthogonal mth element of the orthogonal type-J spherical tensor subspace.

In a later section on computing the basis kernels, we will be breaking down how to calculate the Clebsch-Gordan change-of-basis matrices using the Sylvester equation.

Parameterizing the Tensor Product

A core mechanism of spherical equivariant geometric GNNs is combining tensors of various types with learnable weights and training them to learn rotationally symmetric relationships between nodes. To do this, we must introduce learnable parameters into the tensor product without breaking equivariance.

As we defined earlier, the tensor product of a type-k and type-l spherical tensor can be decomposed into k + l - |k - l| + 1 = 2min(l, k)+1 spherical tensors of types ranging from J = |k - l| to k + l. Since the output of the tensor product is no longer a spherical tensor, directly applying learnable weights to the tensor product will break equivariance since the elements do not transform predictably under rotation.

Instead, we can apply learnable scalar weights separately to each component of the decomposed spherical tensor components, since they are orthogonal and rotate independently under the irreps of SO(3).

The equivariance condition holds since the weight w is a type-0 scalar that is invariant to rotations.

We can define the parameterized tensor product of a type-k spherical tensor s and a type-l spherical tensor t as the direct sum of the type-J spherical tensor components each scaled by a weight indexed by the type of first input (k), the type of the second input (l), and the type of the component it is applied to (J) from the tensor product decomposition.

where the subscript J denotes the type-J component of the tensor product decomposition.

When taking the tensor product between two lists of spherical tensors of multiple channels of multiple feature types, we can think of the combination of a single tensor type from the first tensor list, a single type from the second tensor list, and the type of the decomposed tensor product component (k, l, J) as a path in the tensor product and assign a learnable parameter to each path. In the case above where we took the tensor product between a single type-k and type-l spherical tensor, the number of learnable weights equals the 2min(l, k) +1 possible values of J.

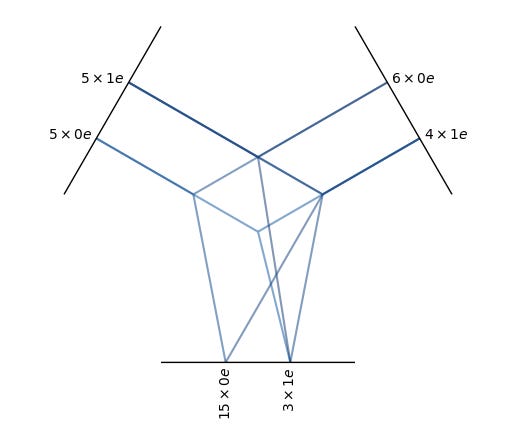

First, we will generalize to multi-type input tensors with a single channel of each type before extending to multiple channels in the section on Tensor Field Networks. The tensor product of the tensor list s of types ranging from k = 0 to K with the tensor list t of types ranging from l = 0 to L can be written as follows:

For every combination of input types (k, l) where k = 0 to K and l = 0 to L where J is in the range |k-l| to |k+l|, we extract the type-J component of the decomposed tensor product between the type-l channel and the type-k channel of the tensor list. Since each extracted type-J tensor corresponds to a single path (k, l, J), we scale the output with a unique learnable weight w.

Then, we take the element-wise sum of the weighted type-J tensors from every path (k, l, J) that share the same value for J, since they are all (2J+1)-dimensional and transform with the same irreps under rotation.

By repeating steps 1 and 2, we get a single tensor for every possible degree J from 0 to K+L, each of which is the weighted sum of all the decomposed type-J components generated from the tensor products between different combinations of degrees l and k from the input tensor lists.

These vectors can be concatenated into a single vector containing subvectors of types J = 0 to K+L.

Now, we can explicitly define each entry of the type-J spherical tensor component (m = -J to J) of the tensor product decomposition using the Clebsch-Gordan coefficients:

Let’s break down how to calculate the parameterized tensor product with an example that applies to equivariant kernels: the tensor product between the list of feature tensors and the list of spherical harmonics projections of the angular unit vector.

Suppose we have a feature list f that contains a type-0 and type-1 tensor. We also have the angular unit vector x̂ between nodes i and j projected into type-0 and type-1 tensors using the type-0 and type-1 spherical harmonics. We can denote each tensor list as a vector of stacked spherical tensors:

First, we can determine every path (l, k, J) that results in a type-J spherical tensor component for all possible values of J ranging from |0-0|=0 to 1+1=2.

Then, we compute the tensor products between every pair of tensors in the input lists, decompose them into their spherical tensor components, scale each path by a weight, and take the sum over all the outputs with the same degree.

The type-0 (J = 0) sum of the paths (0, 0, 0) and (1, 1, 0) is a scalar:

The type-1 (J = 1) sum of the paths (0, 1, 1), (1, 0, 1), and (1, 1, 1) is a 3-dimensional vector:

The type-2 (J = 2) output of the path (1, 1, 2) is a 5-dimensional vector:

Finally, we can concatenate each weighted sum into a single 9-dimensional vector to get the output of the tensor product between the feature tensor list and the list of spherical harmonics projections of the angular unit vector.

Review

Since we’ve covered quite a lot of concepts, let’s synthesize these concepts in the context of SO(3)-equivariance:

The group of rotations in three dimensions is called the SO(3) group and the group representations are N x N orthogonal matrices that can be decomposed into irreducible representations (irreps) called Wigner-D matrices.

Wigner-D matrices can act on any tensors after applying a change-of-basis matrix; however, they act directly on special types of tensors called spherical tensors that are generated by spherical harmonics. Spherical harmonics form a complete, orthonormal basis of functions on the unit sphere that can project vectors on the unit sphere to spherical tensors that can be transformed directly and equivariantly with Wigner-D matrices.

These special spherical tensors are divided into types (or degrees) that are denoted by a non-negative integer (l = 0, 1, …) and are 2l+1-dimensional. The Wigner-D matrices that act directly on type-l tensors have dimensions (2l+1) x (2l+1) and there is a set of 2l +1 spherical harmonic functions that project vectors into type-l tensors. Higher-degree spherical tensors change more rapidly under rotation and are represented by higher-frequency spherical harmonic functions on the unit sphere.

The tensor product is a tensor operator that transforms two lower-degree tensors into a higher-degree tensor. The tensor product of two spherical tensors is no longer a spherical tensor but can be separated into exactly one spherical tensor of each type, ranging from |k - l| to k + l by multiplication with a change of basis matrix containing Clebsch-Gordan coefficients.

The tensor product allows us to pass messages from a type-k feature from a neighborhood node to a type-l feature at the center node without breaking equivariance. This is done by extracting the type-l spherical tensor component of the tensor product between the type-k feature with a spherical tensor generated from spherical harmonics.

Weights or learnable parameters can only be applied after decomposing the tensor product into its spherical tensor components, which means a maximum of one parameter can be applied for every set of values (l, k, J) corresponding to the two input types and the tensor product component type, respectively.

Now, we will be putting these ideas into practice to generate an equivariant kernel that transforms between degrees of spherical tensors and generates messages between nodes.

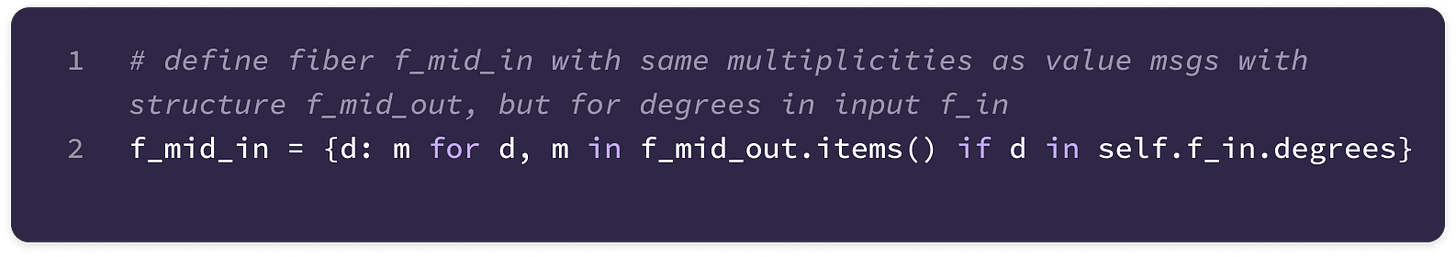

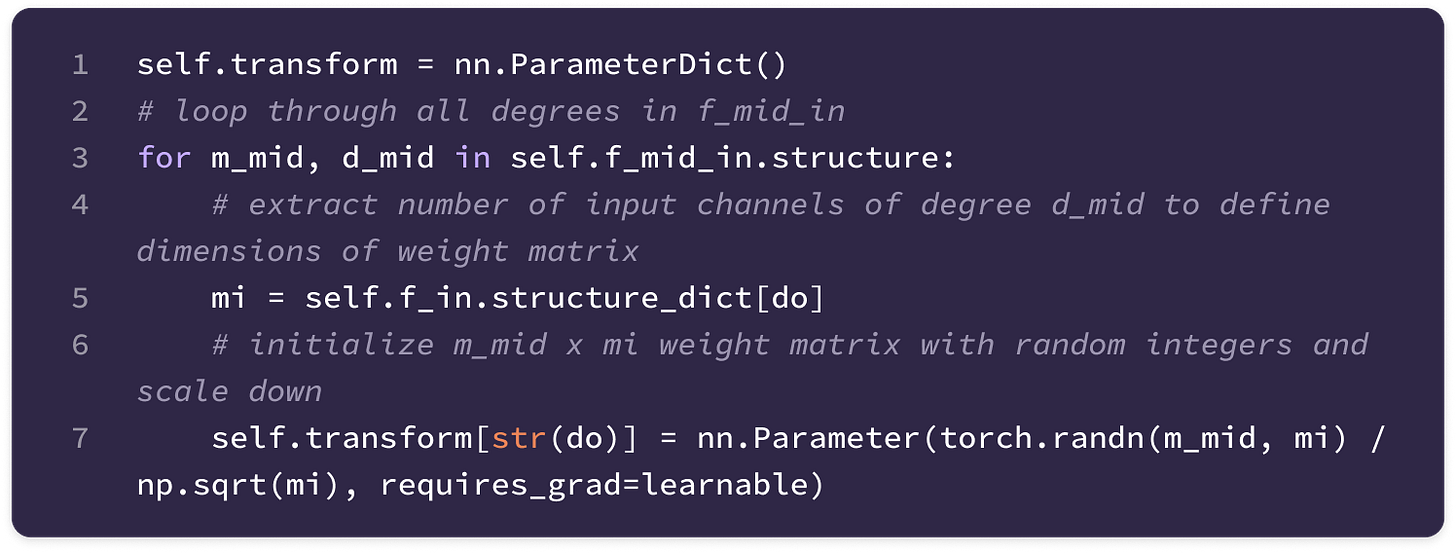

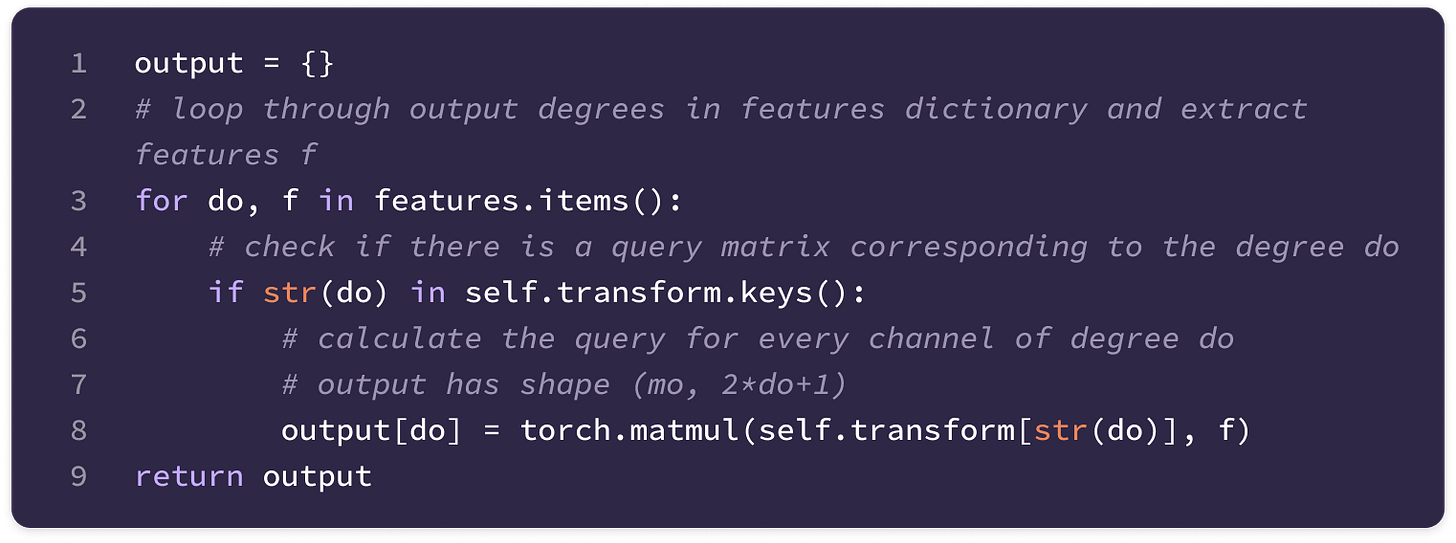

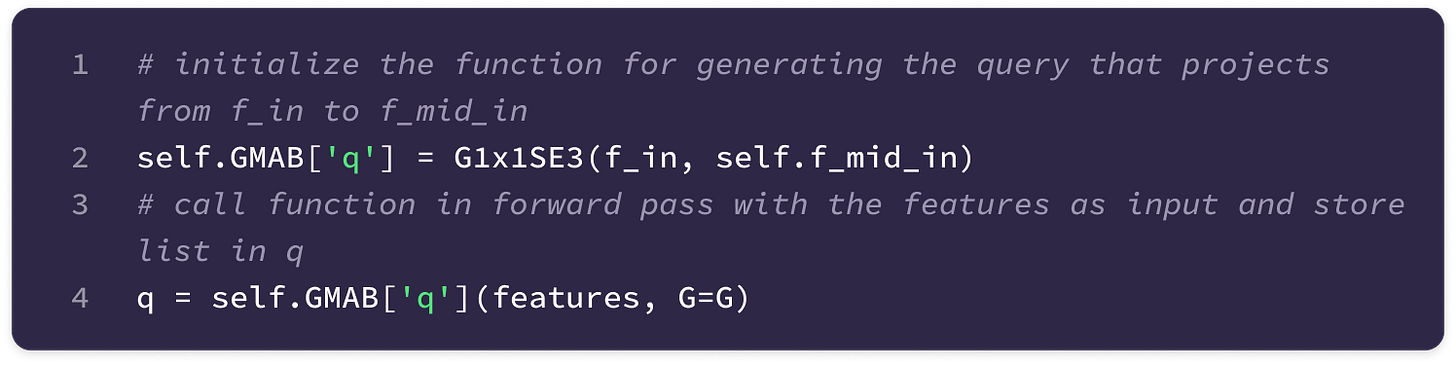

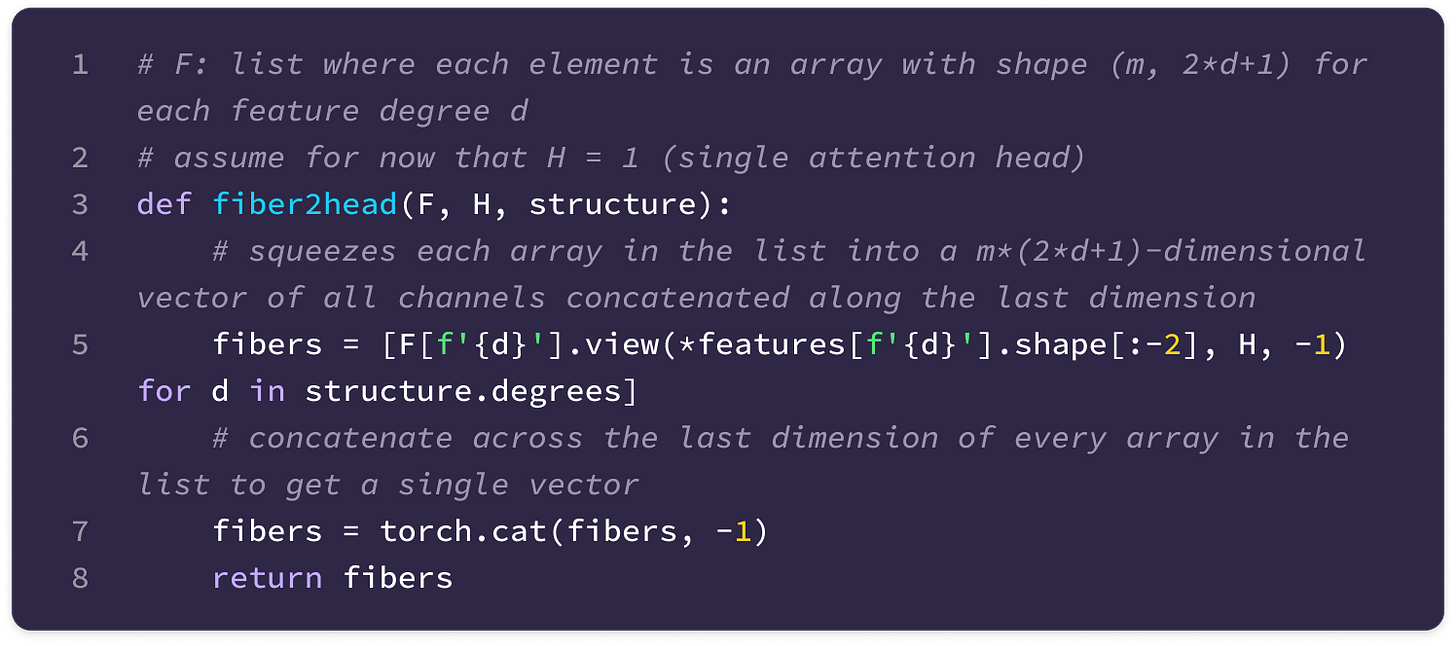

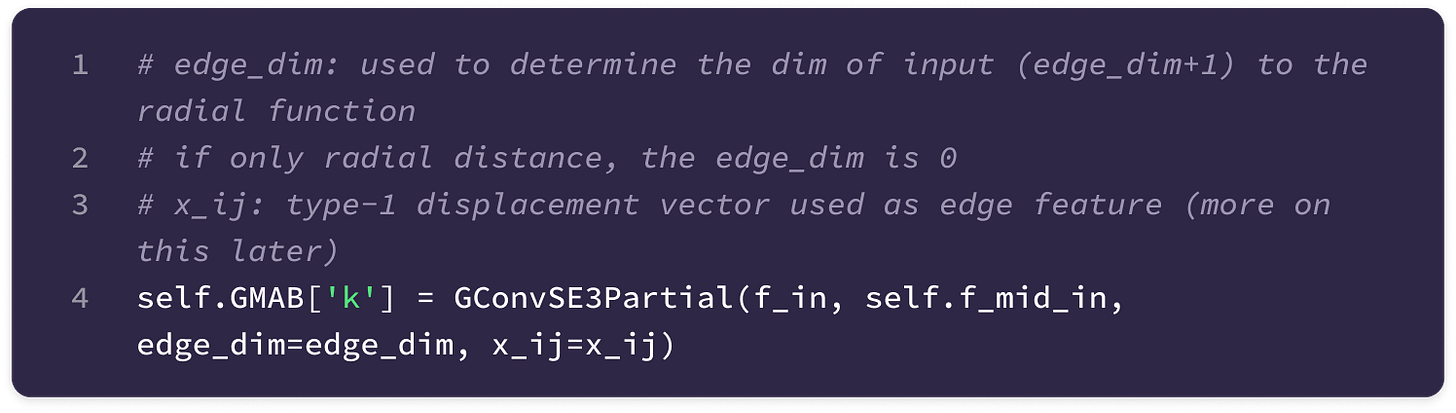

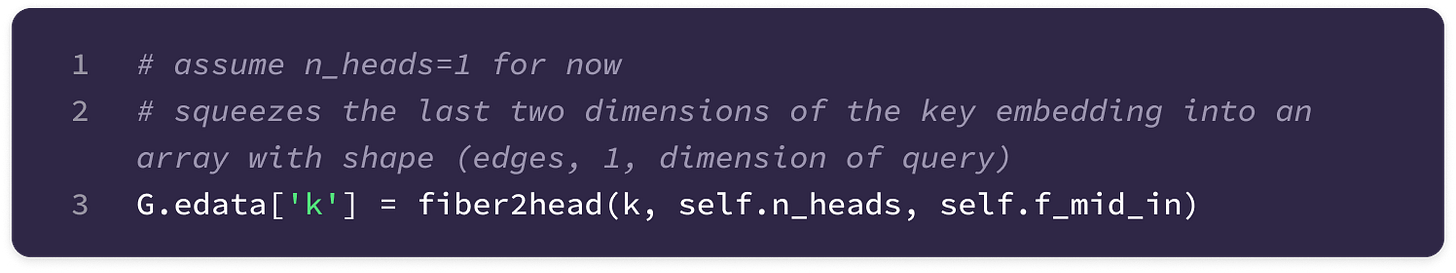

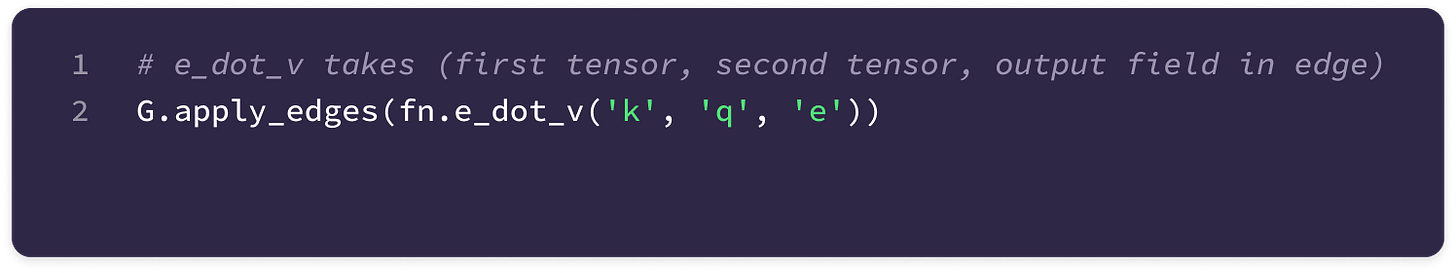

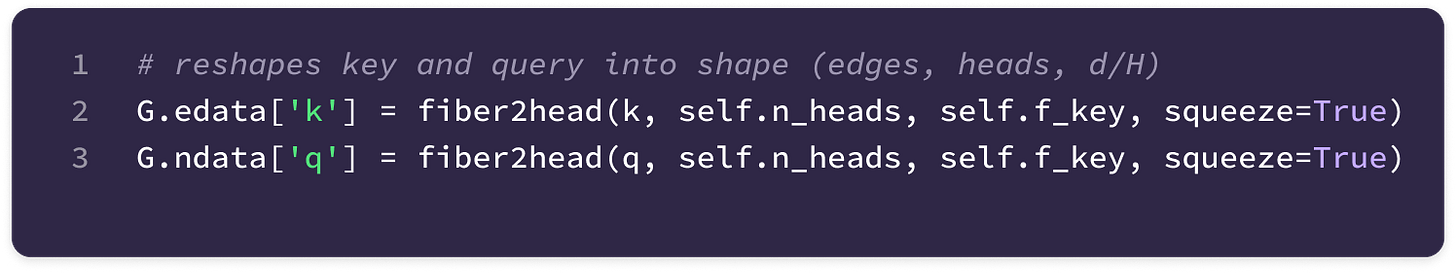

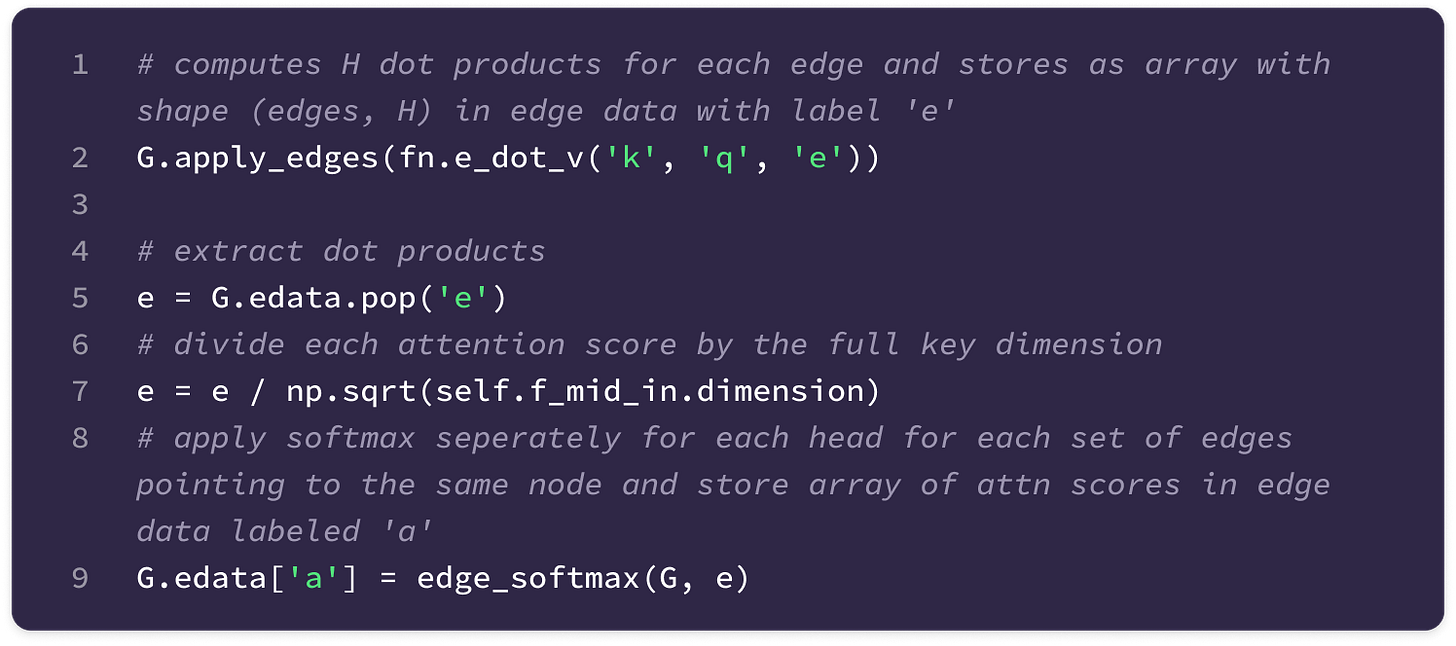

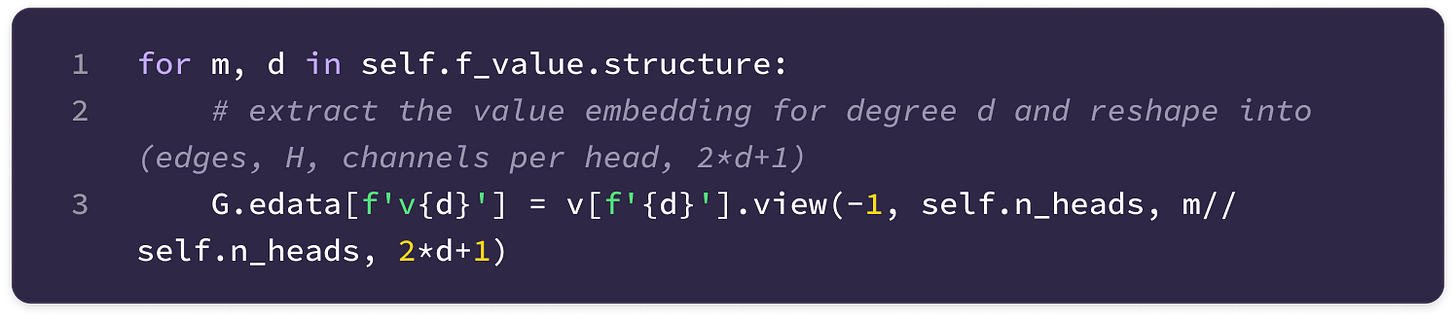

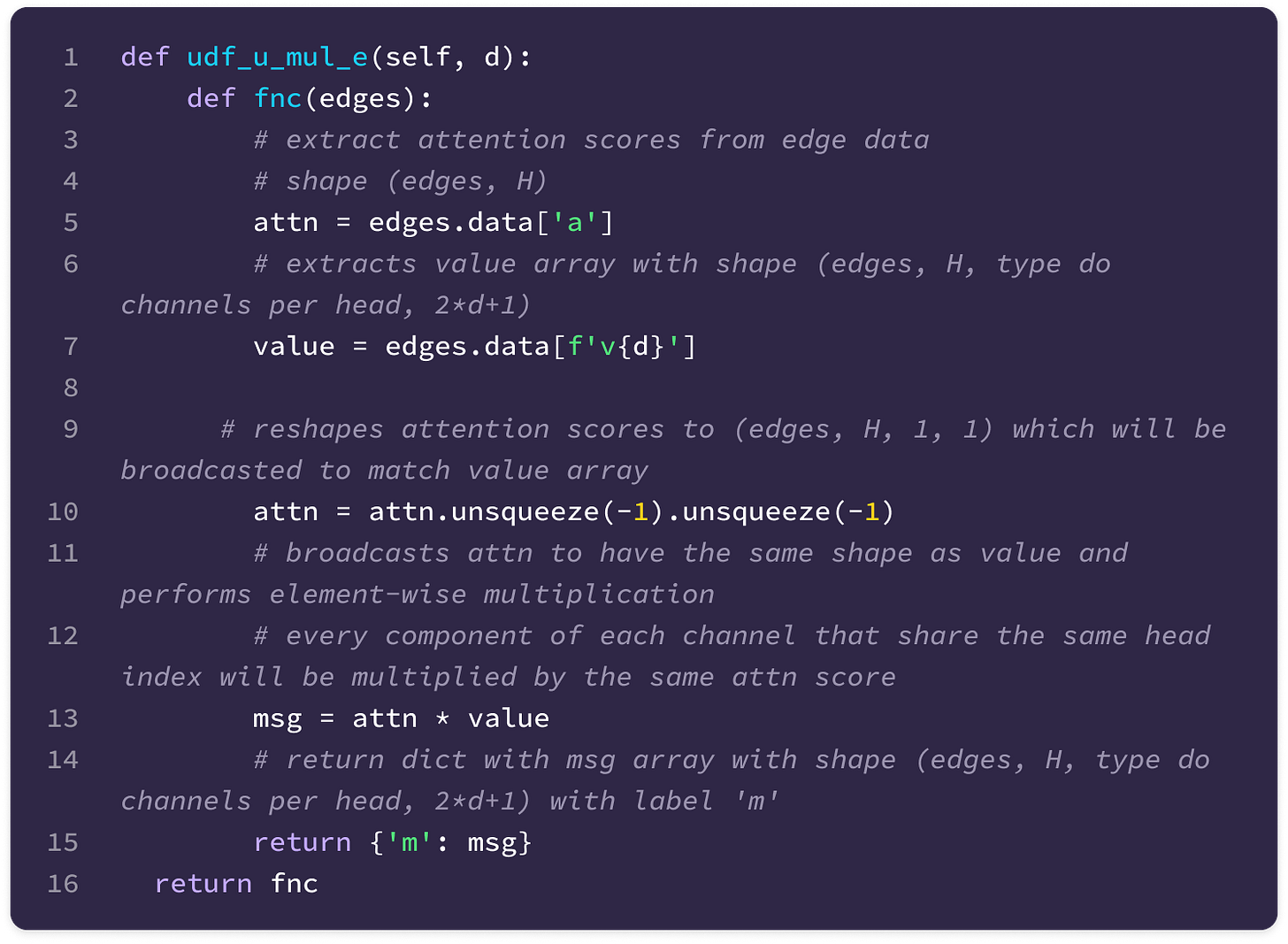

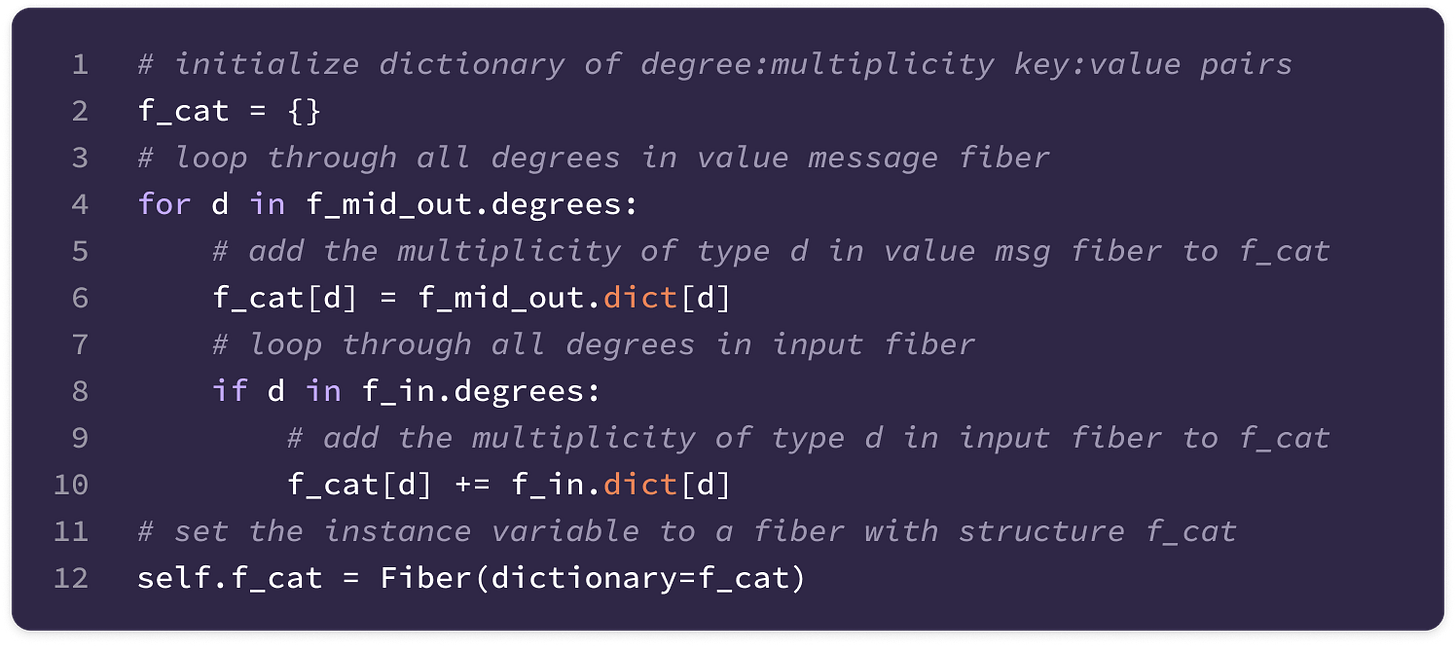

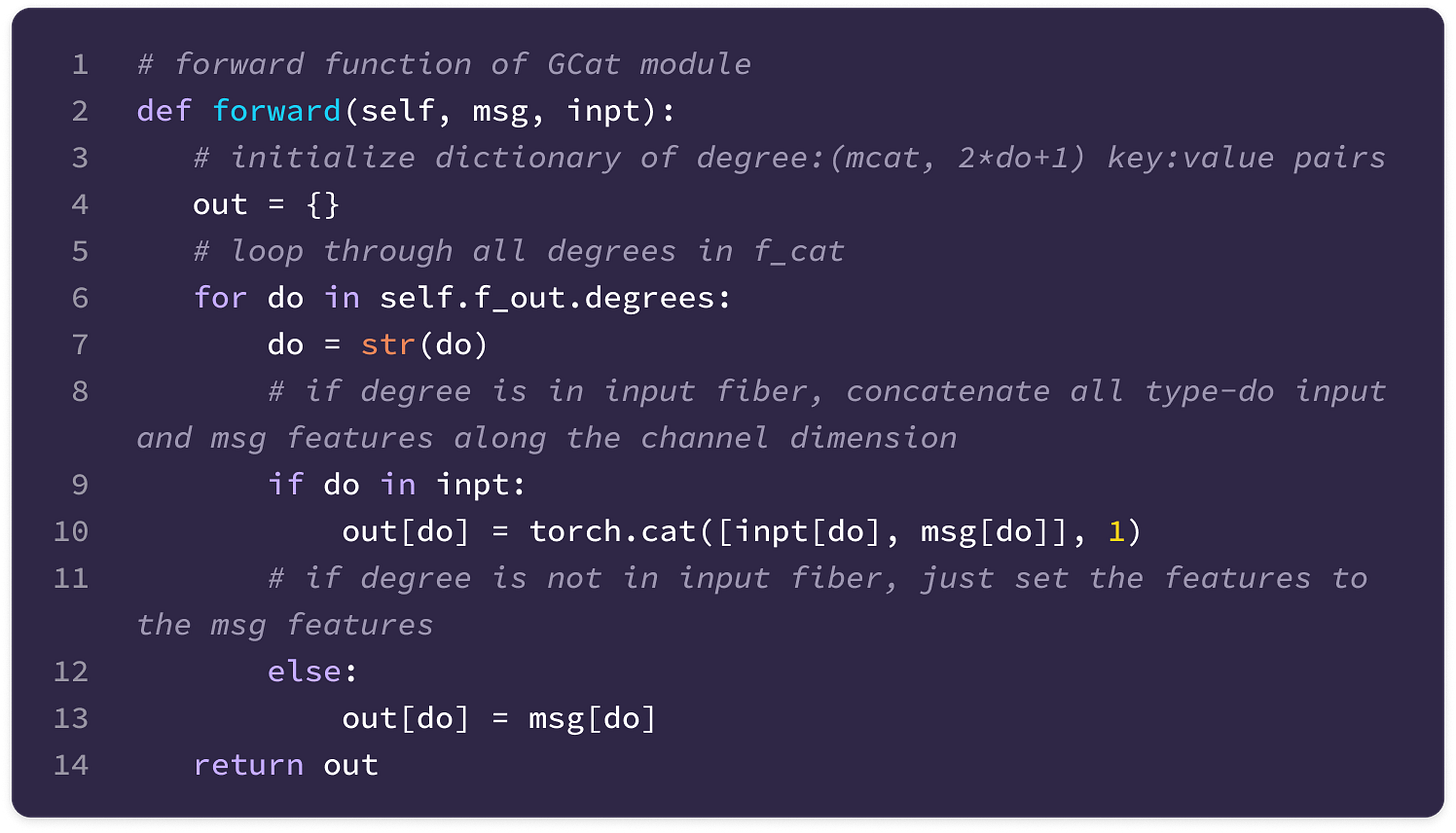

Before getting started, the code implementation of SE(3)-Transformers uses a novel data structure called fibers to keep track of node and edge features. The structure of a fiber is a list of tuples that are used to define the degrees and number of channels that are inputted and outputted from equivariant layers in the form [(multiplicity or number of channels, type or degree)]. The code implementation will often extract a (multiplicity, degree) pair from a fiber structure in the following way:

You can find the full implementation of the data structure here.

Constructing an Equivariant Kernel

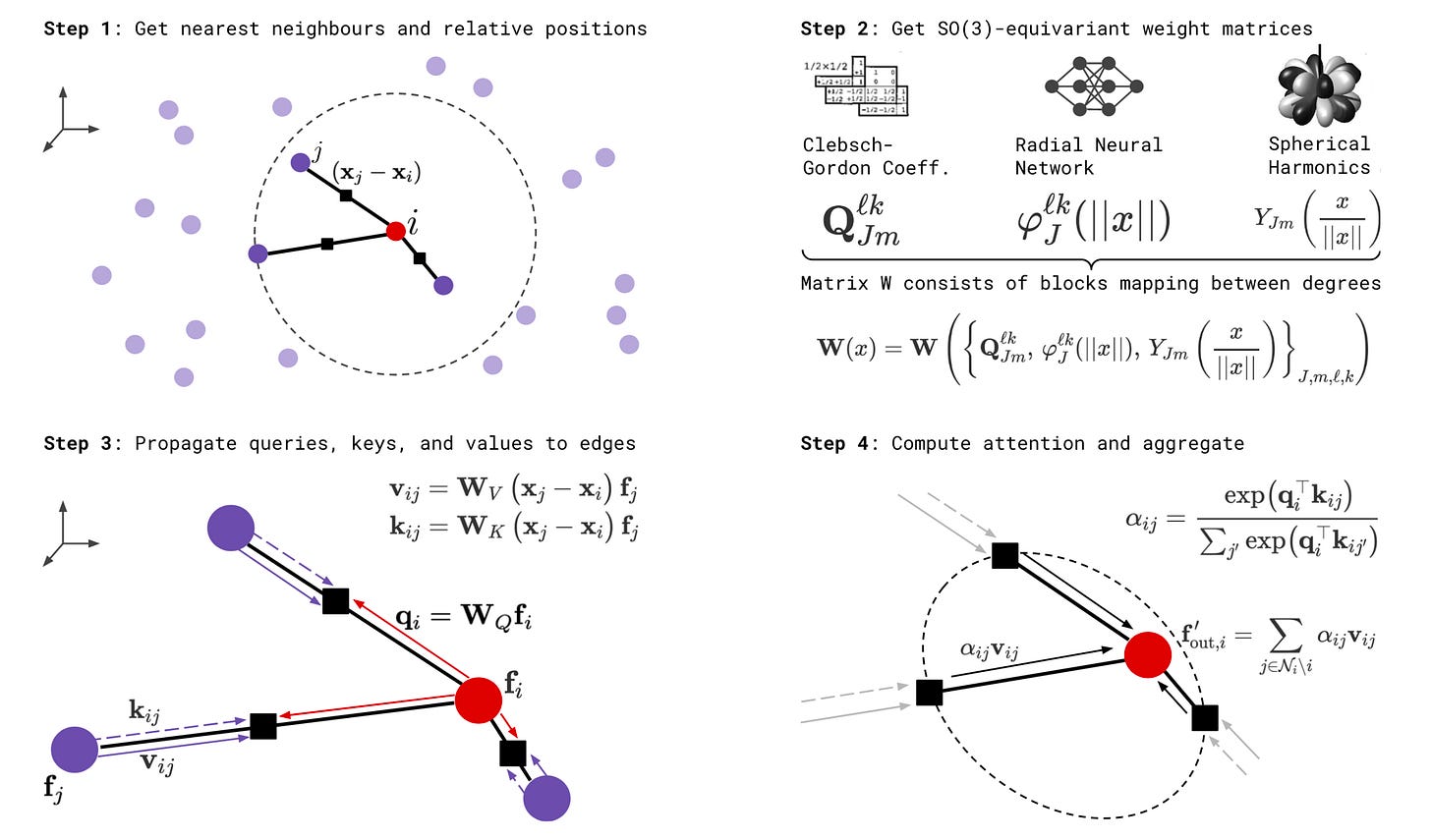

To facilitate message-passing between spherical tensors of different types, we want to construct a kernel that can take a single type-k input feature and directly transform it into a type-l feature.

In a non-equivariant setting, this is simple. All we need is to multiply by a (2l + 1) x (2k + 1) kernel of learnable weights to transform the (2k + 1)-dimensional type-k tensor to a (2l + 1)-dimensional type-l tensor. However, multiplying a randomly initialized kernel would break equivariance, so we must carefully define how to construct a kernel W of the same dimensions that linearly transforms type-k to type-l tensors while preserving SO(3)-equivariance.

This kernel should also be dependent on the displacement vector from node j to i which encodes the distance and relative angular relationship between the two nodes.

With these ideas in mind, let’s define the kernel W as a function that takes the displacement vector as input and outputs a (2l + 1) x (2k + 1) linear transformation matrix.

Deriving the Equivariant Kernel Constraint

To construct an equivariant kernel, we must first define how it must operate under rotations. I will be deriving the kernel constraint from scratch since it is the fundamental building block of equivariant GNNs. This section took a while for me to write as it forced me to wrestle with various notation-heavy derivations from publications, but I have broken it down to help you develop an intuitive understanding of the underlying concepts.

We know that W transforms a type-k input feature from node j to type-l features for message passing to node i:

This must satisfy the equivariance constraint on SO(3):

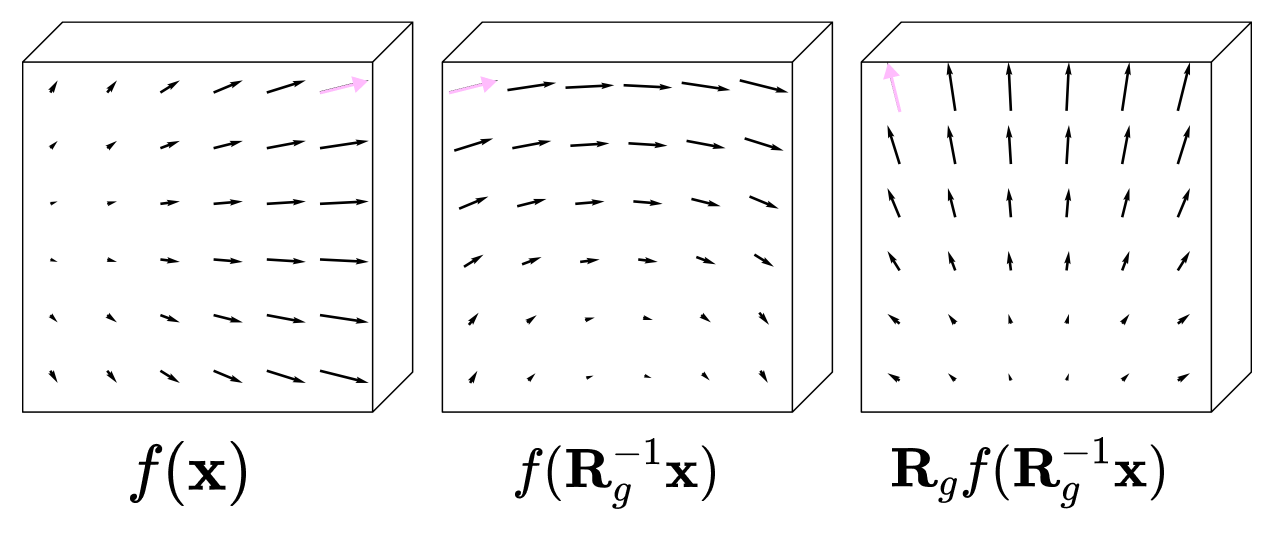

We can think of the kernel applied to a specified type-k feature as a tensor field. A field is a mathematical object that assigns a mathematical object to every point in space. In this case, for every point in 3-dimensional space (defined by the vector x), there is an assigned type-l tensor:

Rotating a tensor field is not as simple as rotating the tensors themselves since both the orientation and position of the tensors must rotate.

We can visualize this idea with an example: consider rotating the vector field f(x), which assigns a 3-dimensional vector to every point in 3D space by 90 degrees counterclockwise.

To rotate the vector field, we must perform two operations:

First, we shift the vector assigned to the point R⁻¹x to a new, rotated point 90 degrees counterclockwise from the original point x without changing its orientation. This is done by applying the inverse rotation matrix to the point x so that the vector field f(x) outputs the same vector as it would at the unrotated point.

Then, we rotate the vector at point x itself using the 3 x 3 rotation matrix R.

The rotated tensor field can be written with the following expression, where g denotes the 90-degree counter-clockwise rotation:

Let’s apply the same idea to rotate the tensor field expression by g.

First, we shift the tensor assigned to the unrotated point R⁻¹x to a new point rotated by g without changing its orientation by applying the inverse 3 x 3 rotation matrix to the point x. This sets the tensor assigned to the rotated point to be the same as the unrotated point. Then, we rotate the output type-l feature of the expression by the type-l Wigner-D matrix for g.

This gives us the definition of rotating the type-l output of the kernel by g in terms of the type-k input and the displacement vector:

Intuitively, when we rotate the point cloud, the kernel also changes since it is dependent on the displacement vector. However, we can only apply the equivariant condition on a ‘fixed’ function. This means that when applied in the same way to both an unrotated and rotated point cloud, the function can recognize rotated input features and operate on them equivariantly, such that the output is rotated accordingly. So we have to rotate the displacement vector back to its original frame to ensure that the kernel is defined the same way when operating on the rotated feature.

Now that we have defined how to rotate the entire tensor field expression by g, we can use it to rewrite the equivariance constraint defined earlier using our new definition for the rotated type-l output feature.

We can substitute x with the vector rotated by g:

and multiply the inverse of the type-k Wigner-D matrix on both sides to get:

The last line of the derivation above is called the kernel constraint because a kernel is SO(3)-equivariant if and only if it is a solution to the constraint, which is rewritten below for clarity:

We can convert the constraint into an equivalent matrix-vector form by vectorizing both sides and using the tensor product identity defined earlier with the property that Wigner-D matrices are orthogonal (its inverse is equal to its transpose).

As discussed previously, the Kronecker product of the type-k and type-l Wigner-D matrices (in this order) is a reproducible representation of SO(3) that acts on (2l+1)(2k+1)-dimensional tensors in a way that is equivalent to (1) applying a change-of-basis matrix Q that converts the tensor into the direct sum of spherical tensors of degree ranging from |k - l| to |k + l|, (2) applying the block diagonal matrix of the Wigner-D irreps corresponding to every degree ranging from |k - l| to |k + l|, and (3) changing the tensor back to its original basis. Q is an orthogonal (2l+1)(2k+1) x (2l+1)(2k+1) matrix that is composed of Clebsch-Gordan coefficients.

By multiplying both sides by Q and denoting the Clebsch-Gordan decomposition of the vectorized kernel with η, we can rewrite the equation as:

where:

Since η can be directly transformed by the block diagonal matrix of type |k - l| to |k + l| Wigner-D irreps without a change-of-basis, we know it must be the direct sum of spherical tensors of degrees ranging from J = |k - l| to |k + l| that rotate independently under the corresponding type-J Wigner-D block:

These properties are exactly what define the spherical harmonic projections of the angular unit vector to spherical tensors that directly rotate under Wigner-D matrices. This means we can set η equal to the direct sum of spherical tensors of types ranging from |k-l| to |k+l| derived from evaluating the type-J spherical harmonic functions on the unit angular displacement vector.

Since the spherical harmonics only restrict the orientation of the angular component of the displacement vector, we can modulate the radial distance without breaking equivariance. This allows us to incorporate a uniquely defined radial function (which we will define in the next section) for each value of J that maps the radial distance to a weight that scales the independently equivariant type-J spherical harmonic.

Now, we can set the two expressions for η equal to each other to derive an expression for the equivariant kernel:

Multiplying the transpose of the full (2l+1)(2k+1) x (2l+1)(2k+1) Clebsch-Gordan change-of-basis matrix with the direct sum of all the types of spherical harmonics and unvectorizing the product is equivalent to taking the matrix-vector product of each transposed (2l+1)(2k+1) x (2J+1) type-J slice of the full CG matrix with the type-J spherical tensor component, unvectorizing the product, and taking the sum across all values of J. So, we can rewrite the equation for the equivariant kernel as:

Now, we denote the expression containing the unvectorization operation as the type-J basis kernel that transforms the input tensor with the type-J projection of the angular displacement vector and projects the output back to its original basis through the type-J slice of the CG change-of-basis matrix.

The Jth slice of the transpose of Q corresponds to the Clebsch-Gordan coefficients that project the orthogonal basis J back to the coupled basis of type-l and type-k spherical tensors.

The Jth basis kernel can also be written as a linear combination of (2l + 1) x (2k + 1) Clebsch-Gordan matrices corresponding to fixed values of m_l and m_k and all values of m between -J and J scaled by the spherical harmonic function with degree J and order m evaluated on the angular unit vector.

We have shown that every equivariant kernel lies in the orthogonal basis spanned by the basis kernels defined for each type of spherical harmonic, ranging from |k - l| to k+l and can be constructed by taking the linear combination of the basis kernels.

Equivariant kernels are also called intertwiners in literature, which refer to functions that are linear and equivariant.

We can think of the equivariant kernel as transforming the type-k input tensor in orthogonal type-J spherical harmonics bases of varying degrees and transforming it back to its original basis via the transposed CG matrices.

The equivariant kernel can detect rotationally symmetric patterns of varying frequencies from the input feature relevant to the prediction task, which is analogous to how a set of convolutional filters in a CNN is applied to generate multiple feature maps that enhance the signal of various patterns in the input images for object detection. The signals across the different frequencies are then reduced to a single type of output tensor that can be aggregated with other messages, similar to how the set of feature maps produced by convolutional filters are aggregated into a single feature map for further processing.

Computing The Basis Kernels

Since each basis kernel is used to construct every equivariant kernel transforming between types k and l for a given edge across all SE(3)-equivariant layers in a model, it is convenient to calculate and store them for repeated use. This involves two steps: precomputing the change-of-basis matrices and the spherical harmonic projections of the angular unit vector for every edge up to a maximum spherical tensor degree J.

First, we want to calculate the (2l+1)(2k+1) x (2J+1) change-of-basis matrix Q transpose for all values of J, such that the following equation holds:

Instead of constructing Q from the Clebsch-Gordan coefficients directly, the implementation of the 3D-steerable CNN and the SE(3)-Transformer (which adopts the same method) compute Q by solving the Sylvester equation, which I will be deconstructing below.

Q converts a tensor into the direct product of spherical tensor components that each transform independently in orthogonal subspaces via multiplication with the corresponding Wigner-D block in the block diagonal matrix. Then, the transpose of Q projects each of the rotated spherical tensors back to the original coupled basis. Since the operation of the left-hand side for each value of J is independent, they must all satisfy the following equation:

Since Q is an orthogonal matrix where the inverse is equal to its transpose, we can rewrite the above equation as:

This is in the form of a homogeneous Sylvester equation:

In this equation, A and B are matrices, and we want to solve for the matrix X. In our case, these matrices are defined below:

To simplify the computation of the matrix X, we can convert it into a standard linear algebra problem in the form of the homogeneous equation Mx = 0, where we can solve for the vector x by finding the null space of the matrix M. To do this, we vectorize the matrix X and write the matrix-matrix product into an equivalent matrix-vector product.

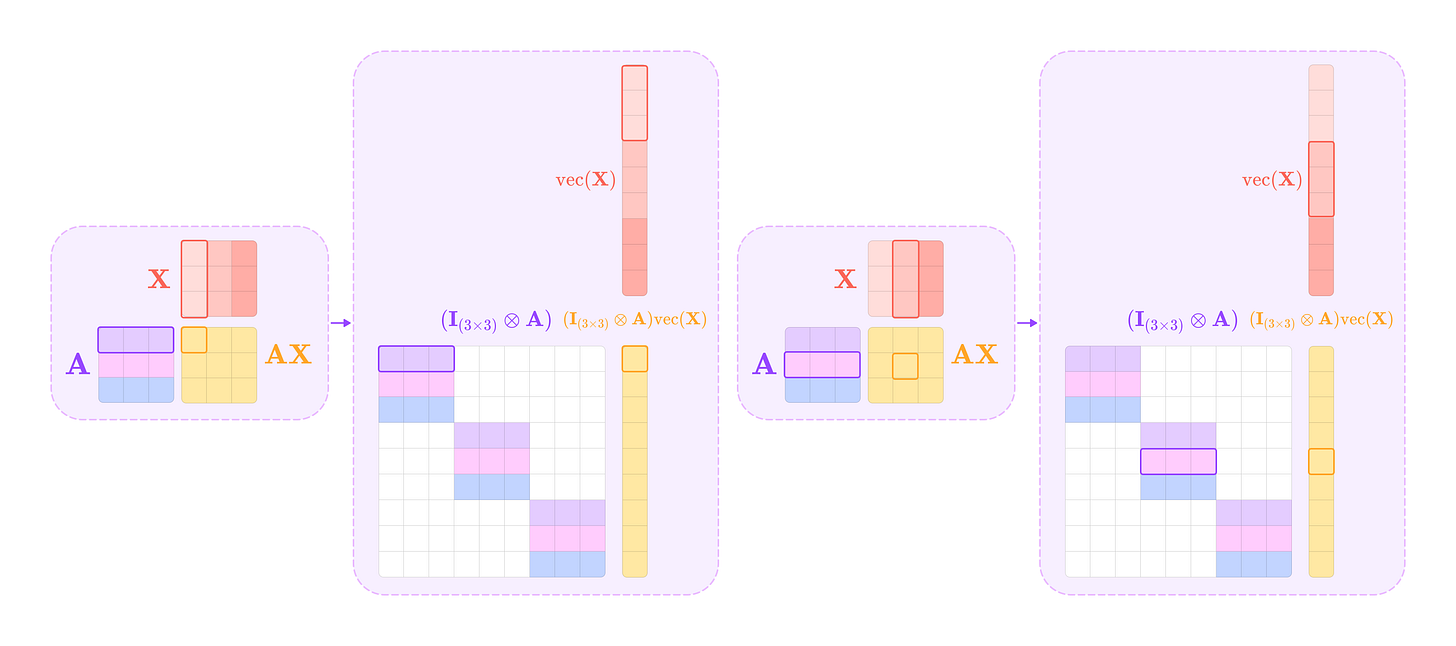

The matrix product AX is equivalent to the matrix-vector product below:

The Kronecker product between the identity matrix I and the matrix A produces a square block diagonal matrix where every block along the diagonal is the matrix A repeated by the dimension of I. Taking its matrix-vector product with the matrix X stacked into a vector produces the vectorized equivalent to the matrix product AX.

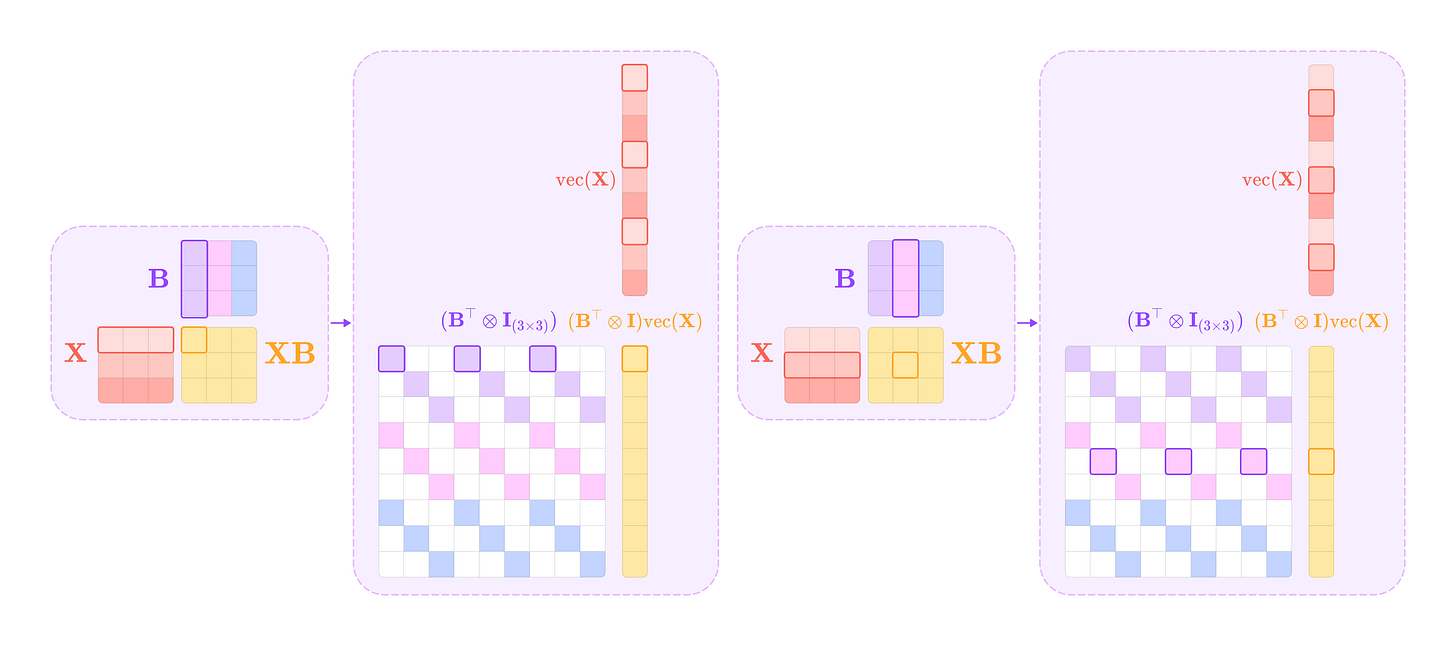

The matrix product XB is equivalent to the following matrix-vector product:

The Kronecker product between the transpose of B and the identity matrix I produces a matrix of matrices where every inner matrix has the element of B transpose corresponding to its position in the outer matrix repeated along the diagonal with the same dimension as I. Taking its matrix-vector product with the vectorized matrix X gives the vectorized equivalent to the matrix product XB.

Making these substitutions allows us to isolate the vectorized matrix X:

This is in the form of the homogenous matrix-vector product Mx = 0, where we can solve for the vector x by finding the non-zero vectors in the null space of M. In the equation above, we can solve for the vectorized matrix X, by finding the null space of the following matrix:

In our case, we want to solve the following homogeneous equation:

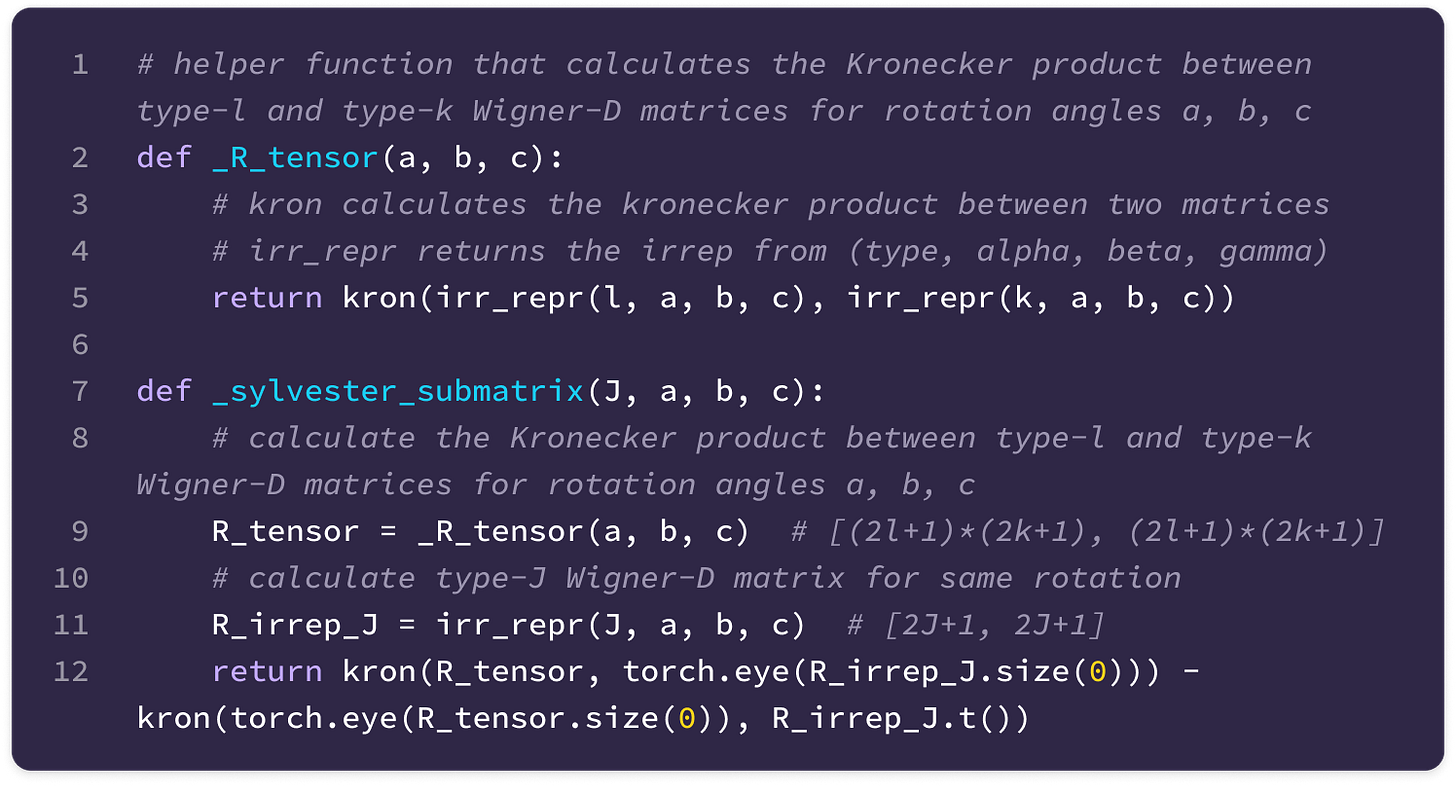

Let’s break down how the type-J slice of the change-of-basis matrix Q transpose is computed in the code implementation:

First, we calculate the type-l, type-k, and type-J Wigner-D matrices on randomly defined Euler angles alpha, beta, and gamma. For the type-l and type-k matrices, we take their Kronecker product, producing a (2l+1)(2k+1) x (2l+1)(2k+1) matrix. Note that the implementation of the kron function takes the second matrix in the Kronecker product as the first input and the first matrix as the second input.

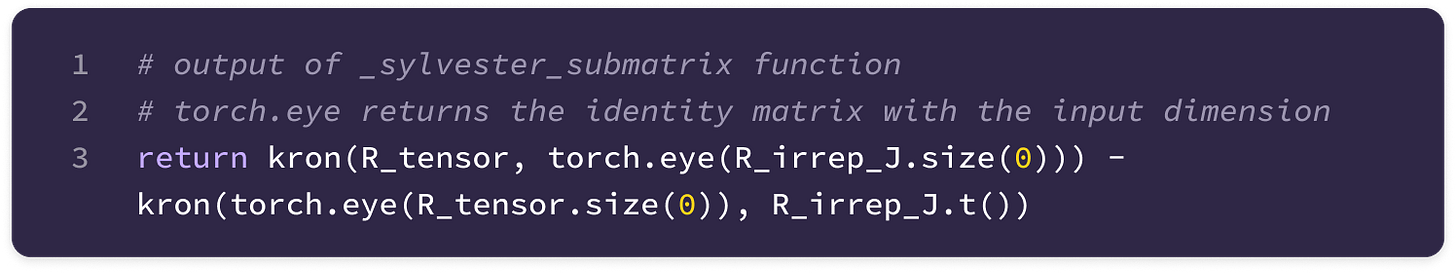

Now, we take the Kronecker product of the (2J+1) x (2J+1) identity matrix with the (2l+1)(2k+1) x (2l+1)(2k+1) Kronecker product of types l and k Wigner-D matrices and subtract the Kronecker product between the (2J+1) x (2J+1) transposed type-J Wigner-D matrix and the (2l+1)(2k+1) x (2l+1)(2k+1) identity matrix.

The complementary dimensions of the identity matrices turn A and B into (2l+1)(2k+1)(2J+1) x (2l+1)(2k+1)(2J+1) matrices that can subtracted element-wise from each other and used to solve the Sylvester equation.

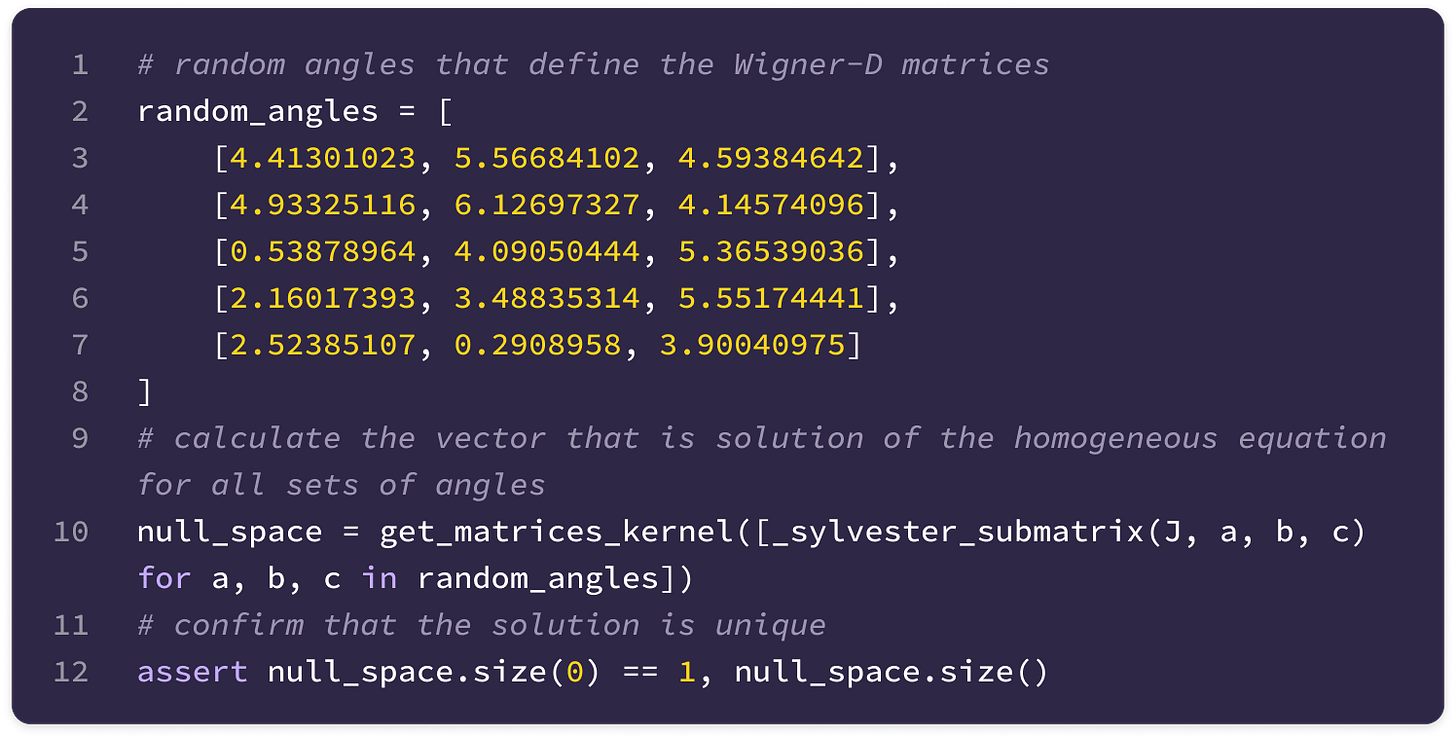

\((\mathbf{I}_{(2J+1)\times(2J+1)}\otimes (\mathbf{D}_k(g)\otimes\mathbf{D}_l(g)))-(\mathbf{D}_J(g)^{\top}\otimes\mathbf{I}_{(2l+1)(2k+1)\times (2l+1)(2k+1)})\)Next, we call the function defined in steps 1 and 2 to generate the Kronecker product matrix for five sets of random angles and calculate the vectors in the null space of all five matrices. Using multiple sets of random angles ensures the solution is unique and can be used as the change-of-basis Q across all rotations in SO(3).

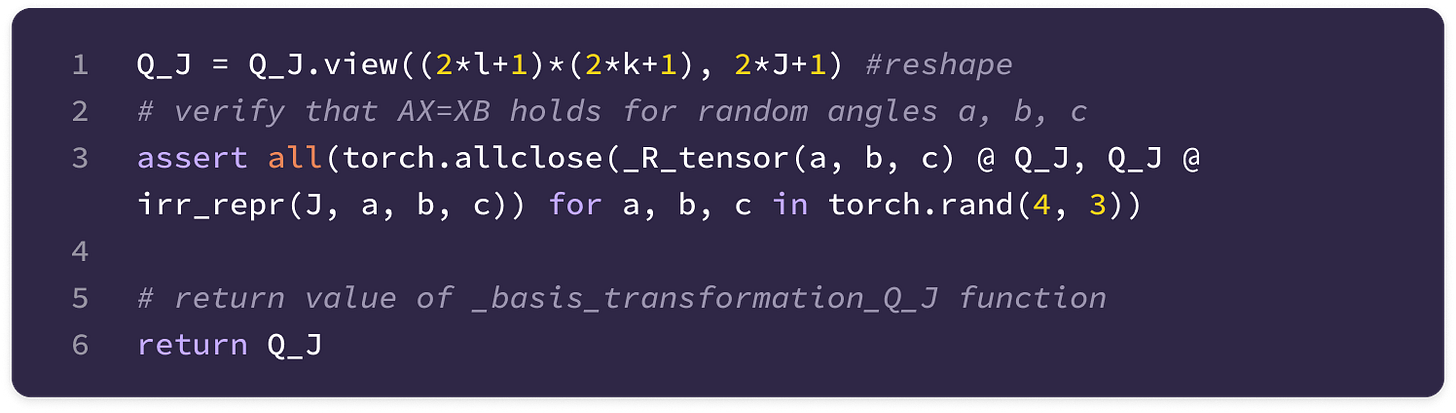

Finally, we reshape the vector into the matrix Q transpose with dimensions (2l+1)(2k+1) x (2J+1) and verify that it satisfies the homogeneous equation below for randomly generated angles.

\((\mathbf{D}_k(g)\otimes\mathbf{D}_l(g))\mathbf{Q}^{lk\top}_J-\mathbf{Q}^{lk\top}_J\mathbf{D}_J(g)=0\)

Now, we must compute the spherical harmonics projections of the angular unit displacement vector. Given that the spherical harmonic function must be computed for all 2J+1 values of m corresponding to all 2min(l,k)+1 values of J needed for all transformations between pairs of input and output degrees for every edge in the graph, the number of computations increases quickly with larger and more complex graphs.

To speed up computation, the SE(3)-Transformer precomputes the spherical harmonic projections for values of J up to double the maximum feature degree (since J has a maximum value of k + l) for every edge in the graph using recursive relations of the associated Legendre polynomials (ALPs).

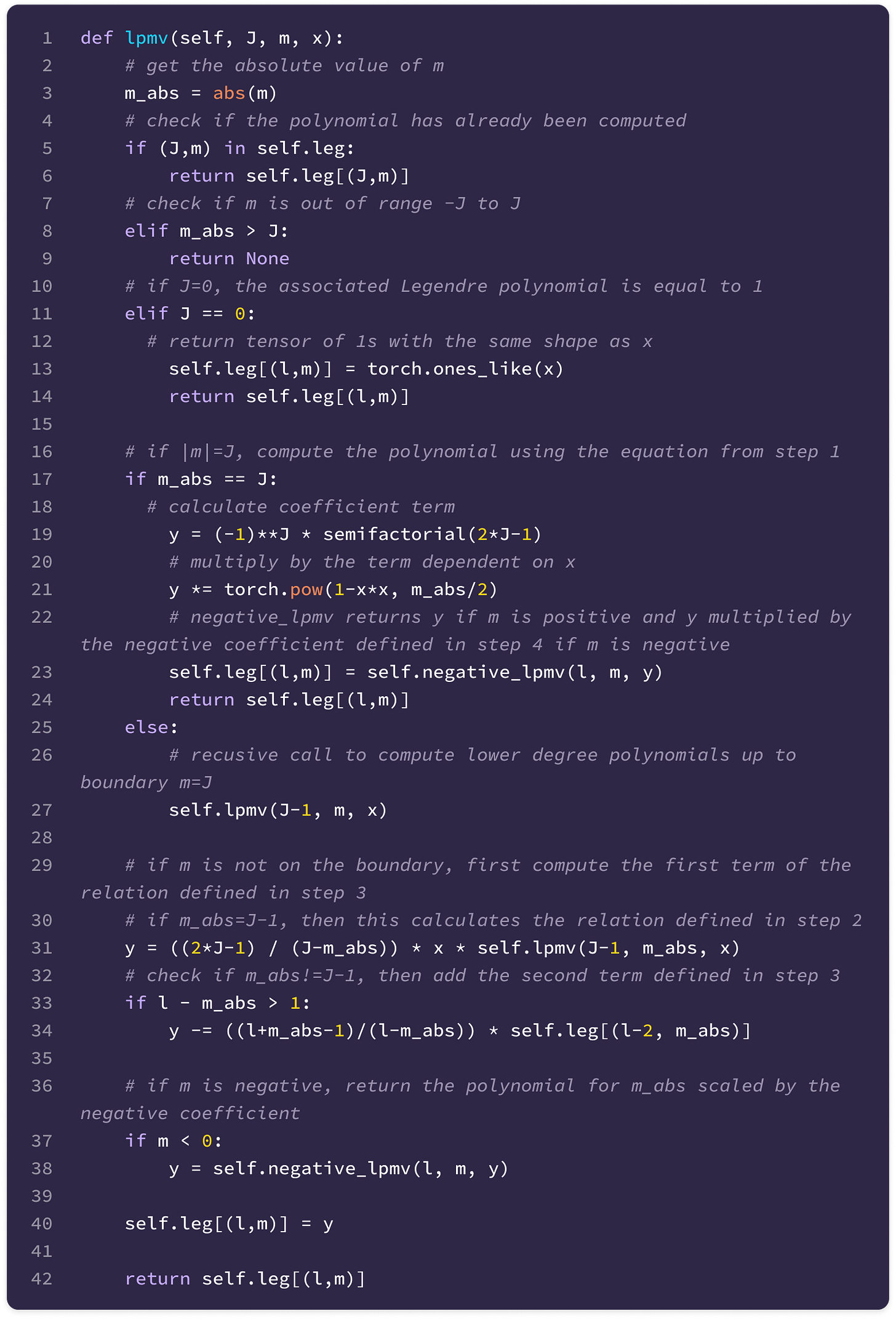

The ALP term of the spherical harmonic equation is the most computationally intensive and needs to be recomputed for every edge of the graph since it is dependent on x=cos(θ), where θ is the polar angle of the angular unit vector. Using recursive relations, we only need to compute the ALP for boundary values of m=J, and the remaining polynomials can be derived from recursively combining the previously computed polynomials and storing them to compute the next set of polynomials.

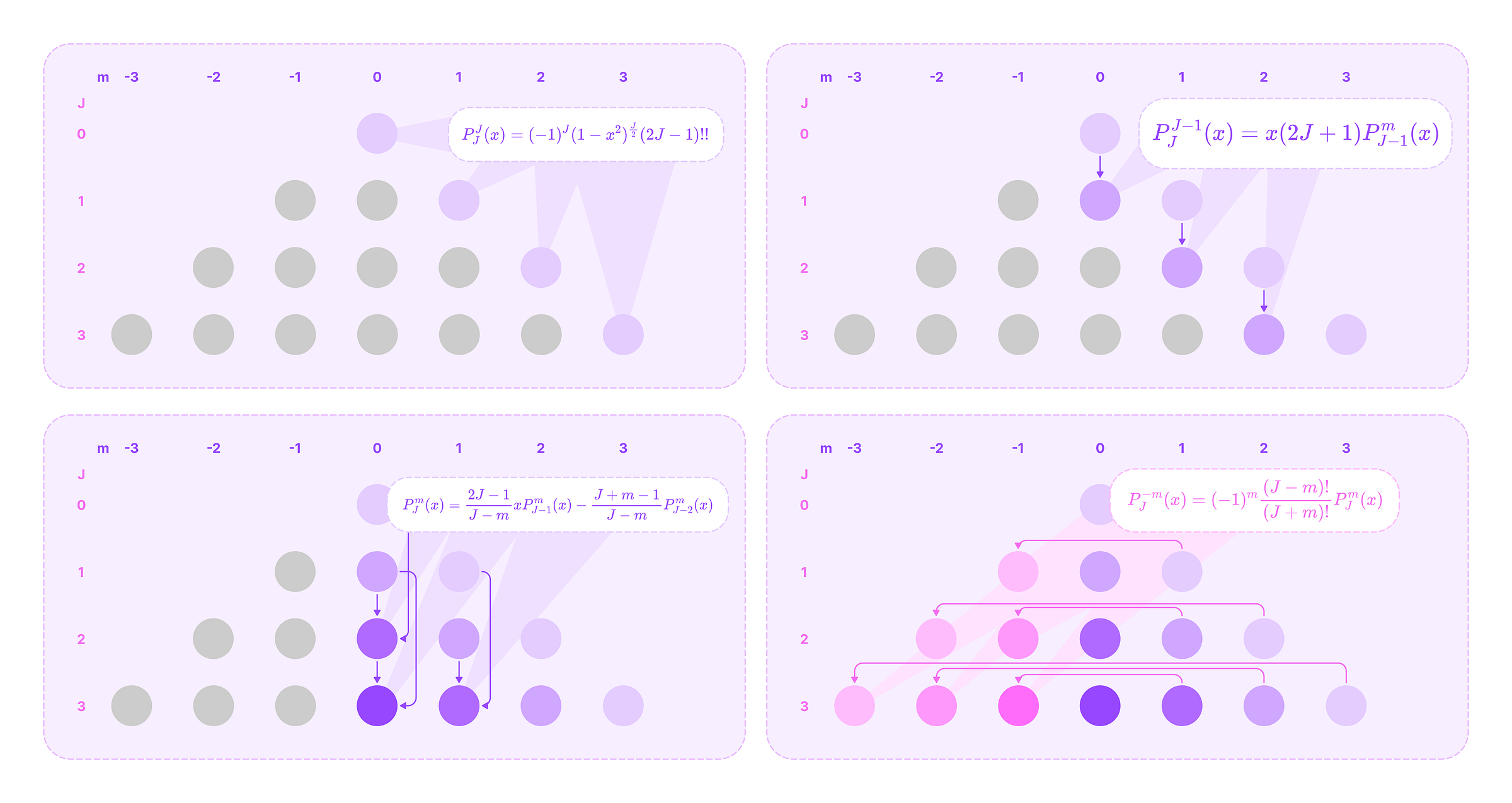

The recursive computation of all non-zero ALPs for all values of J and m involves only three equations applied in the following sequence of steps:

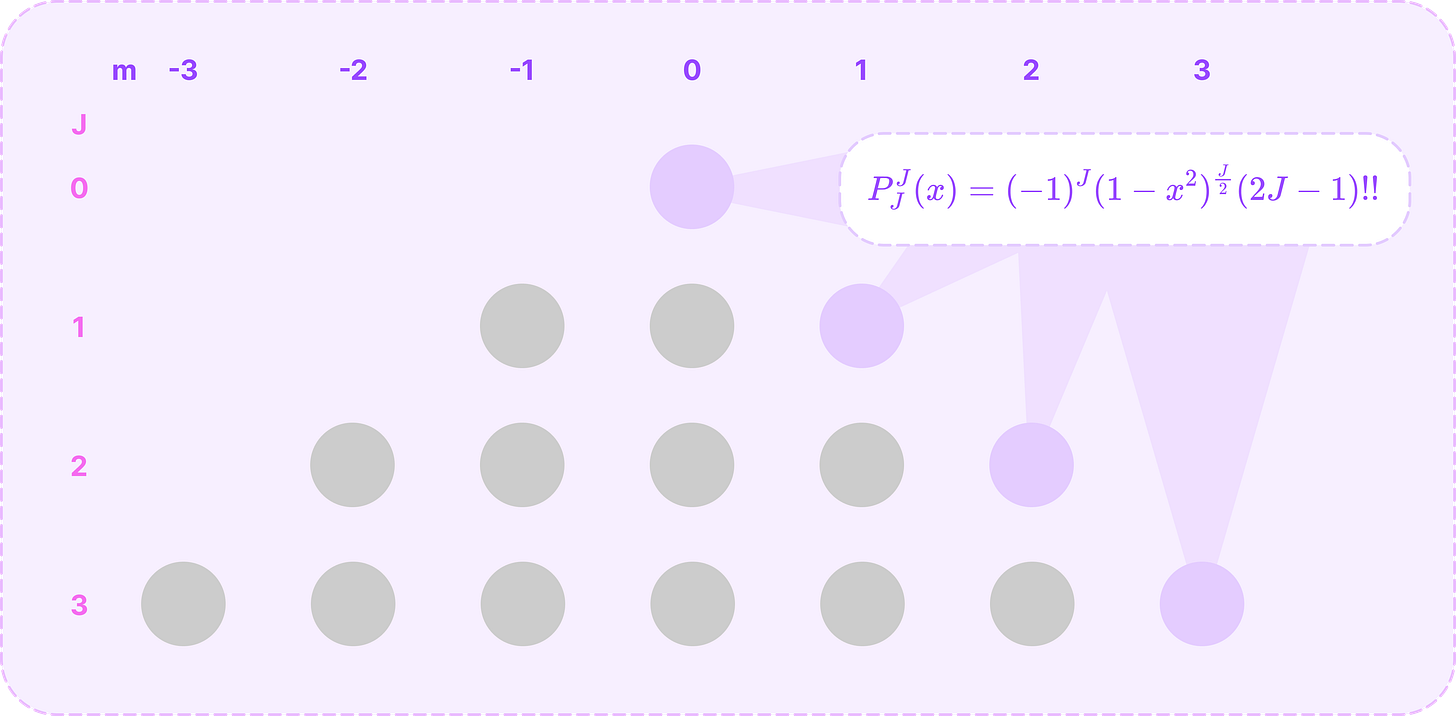

When m=J, which is the maximum value of m where the ALP is non-zero, we can calculate it directly with the following equation:

\(P^J_J(x)=(-1)^J(1-x^2)^\frac{J}{2}(2J-1)!!\tag{$m=J$}\)where x!! is the semi-factorial given by:

\(x!! = x(x-2)(x-4)\dots\)Notice that the Legendre polynomial in this equation is a constant since taking the mth derivative reduces the degree to 1.

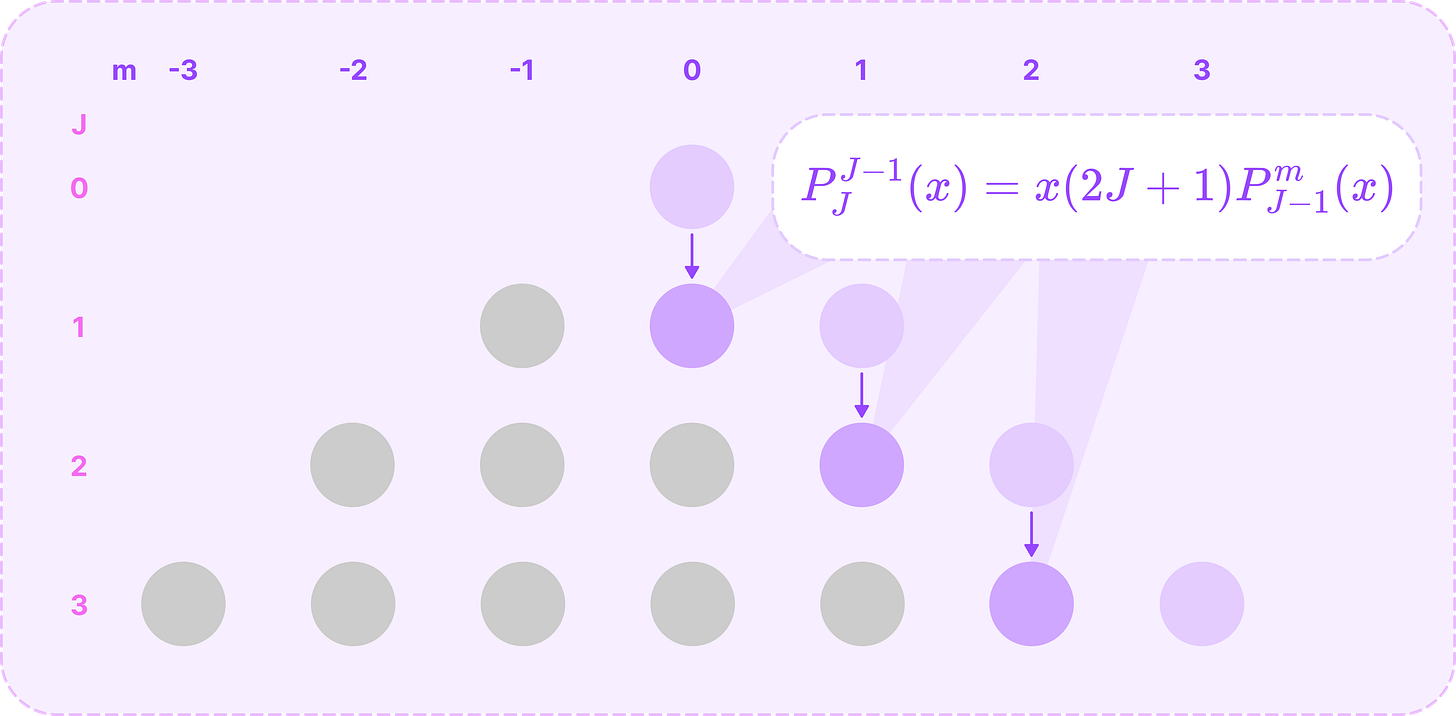

Then, we can compute the polynomials for when m=J-1 from the polynomials stored from step 1 using the following recursive relation:

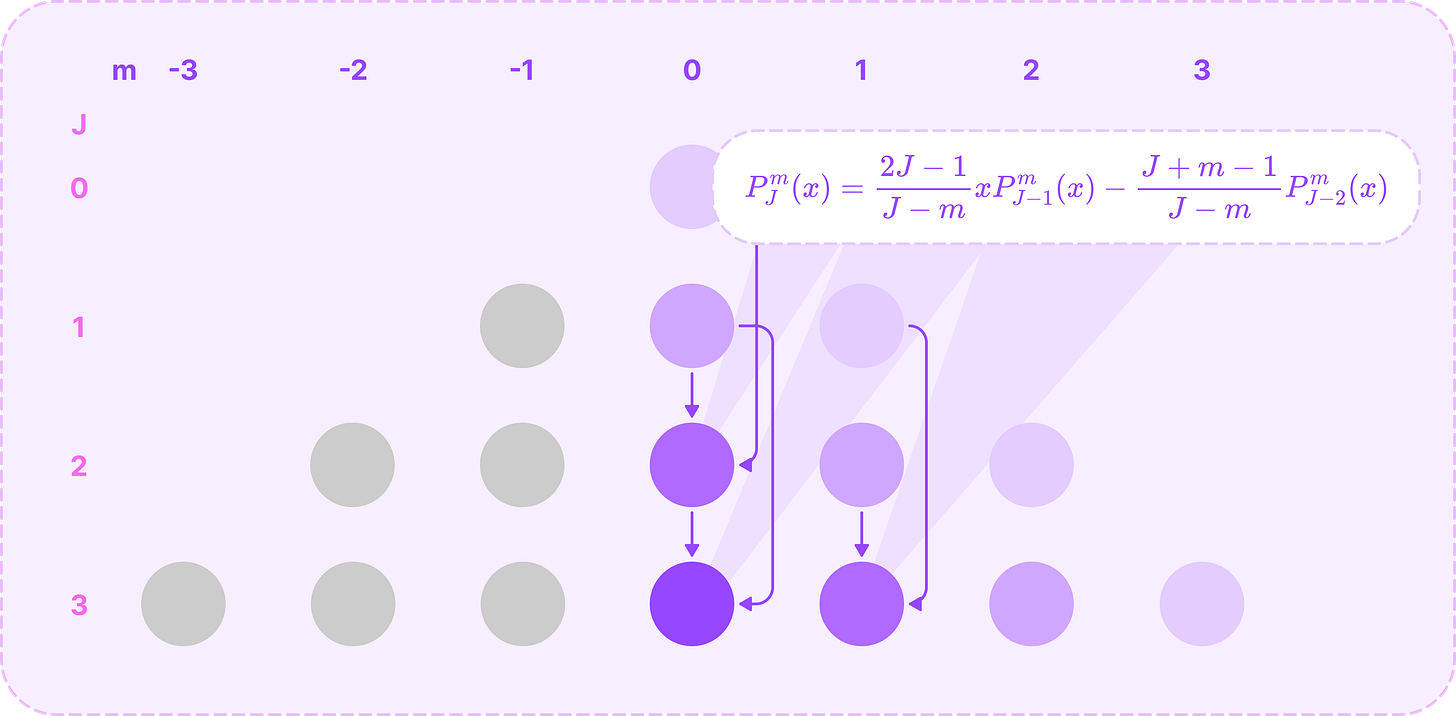

\(P^{J-1}_{J}(x)=x(2J+1)P^m_{J-1}(x)\tag{$m=J-1$}\)For the remaining ALPs with non-negative values of m, we can compute it recursively from the two preceding ALPs with order m and degrees J-1 and J-2 using the recursive relation below:

\(P^m_{J}(x)=\frac{2J-1}{J-m}xP^m_{J-1}(x)-{\frac{J+m-1}{J-m}P^m_{J-2}(x)}\tag{$m\geq 0$}\)Notice that the recursive relation for step 2 is just the first term of this equation, simplified for m=J-1.

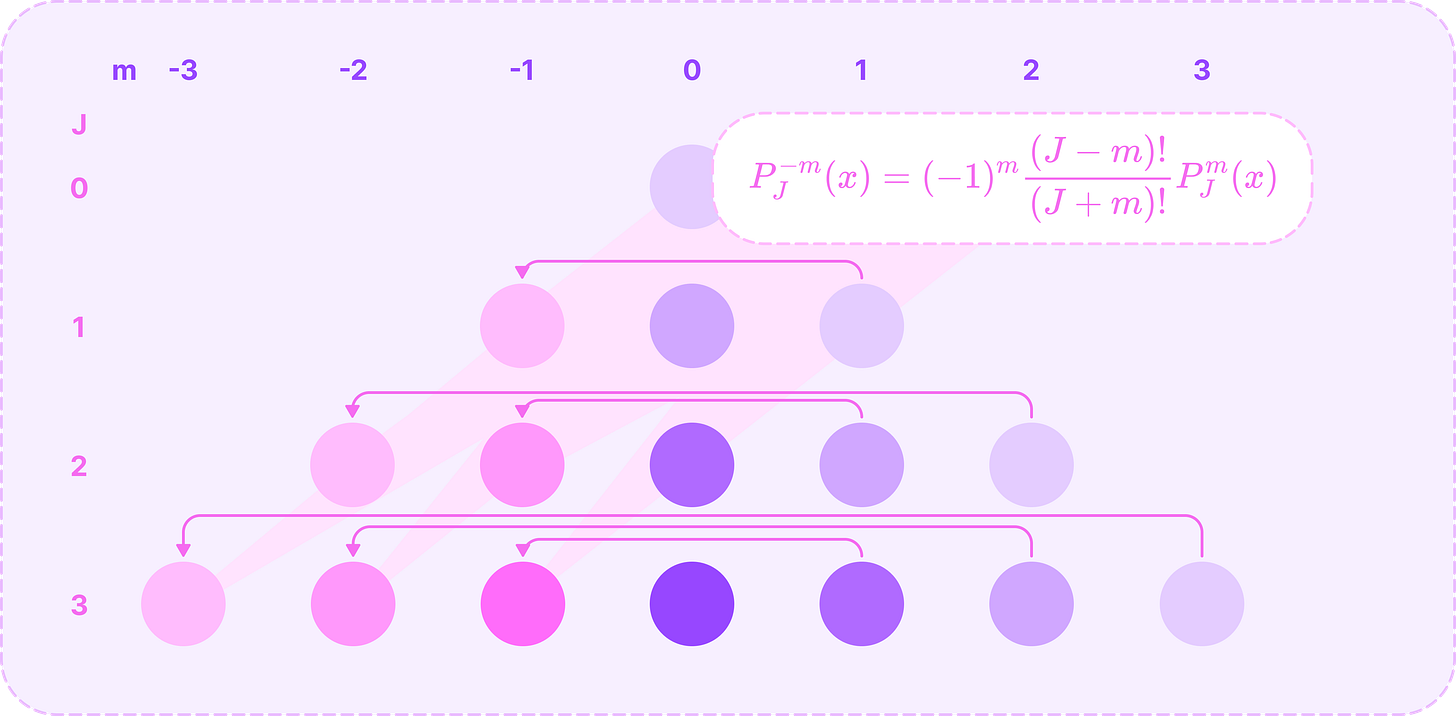

Finally, we can compute the ALPs for all negative values of m from the ALP of its positive counterpart using the following relationship:

\(P^{-m}_J(x)=(-1)^m\frac{(J-m)!}{(J+m)!}P^m_J(x)\)In this equation, the fraction term can be written in terms of the inverse of a falling factorial from (J+m) to (J-m+1):

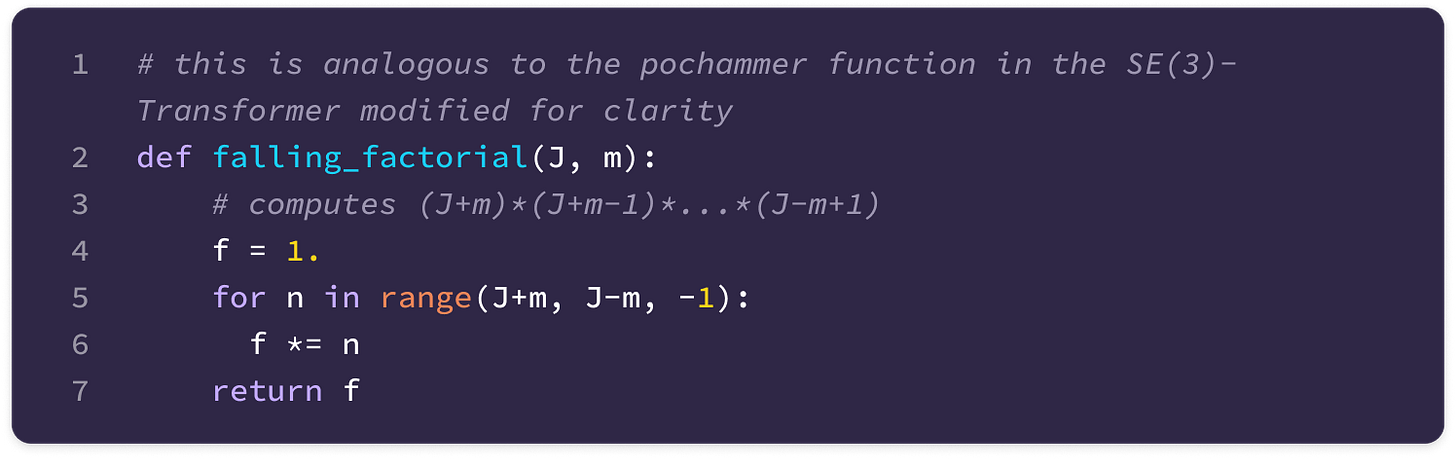

\(\begin{align}\frac{(J-m)!}{(J+m)!}&=\frac{\cancel{(J-m)}\cdot \cancel{(J-m-1)}\cdot \ldots \cdot \cancel{1}}{(J+m)\cdot(J+m-1)\cdot \ldots\cdot\cancel{(J-m)}\cdot\cancel{(J-m-1)}\cdot \ldots\cdot \cancel{1}}\nonumber\\&=\frac{1}{(J+m)\cdot (J+m-1)\cdot\ldots \cdot(J-m+1)}\nonumber\end{align}\)We can calculate the falling factorial for a given value of J and m using the following helper function:

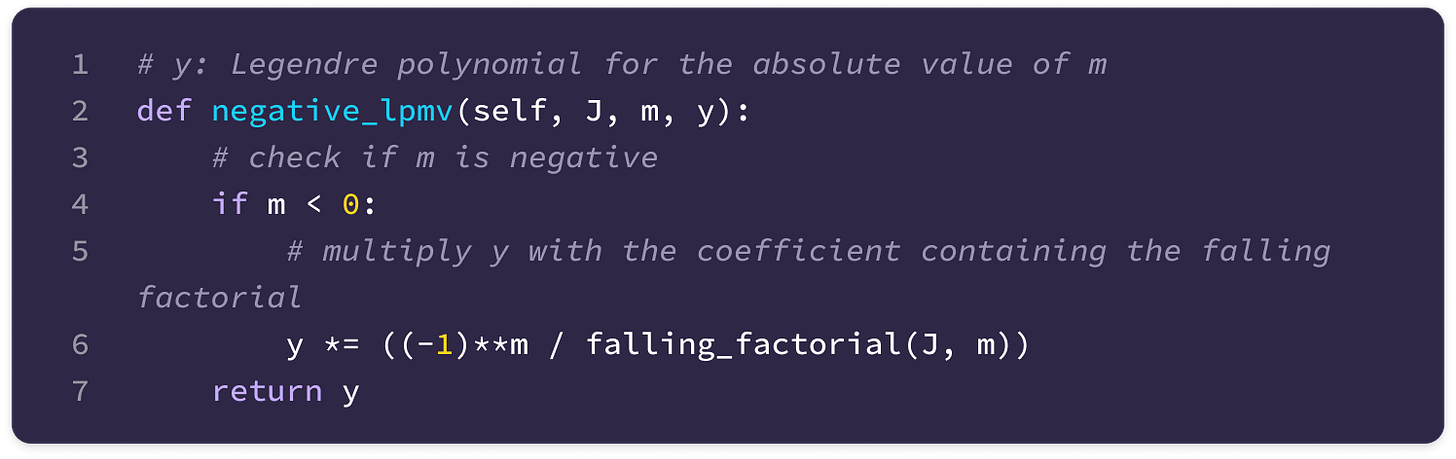

If m is negative, the function below takes the polynomial for the absolute value of m and returns the polynomial scaled by the negative coefficient:

Now that we understand how the recursive relations work, we can implement the code that returns the ALP for a given m and J either by applying the recursive relations using previously stored ALPs or making a recursive call to compute the ALP for m and J-1 until the boundary where m=J:

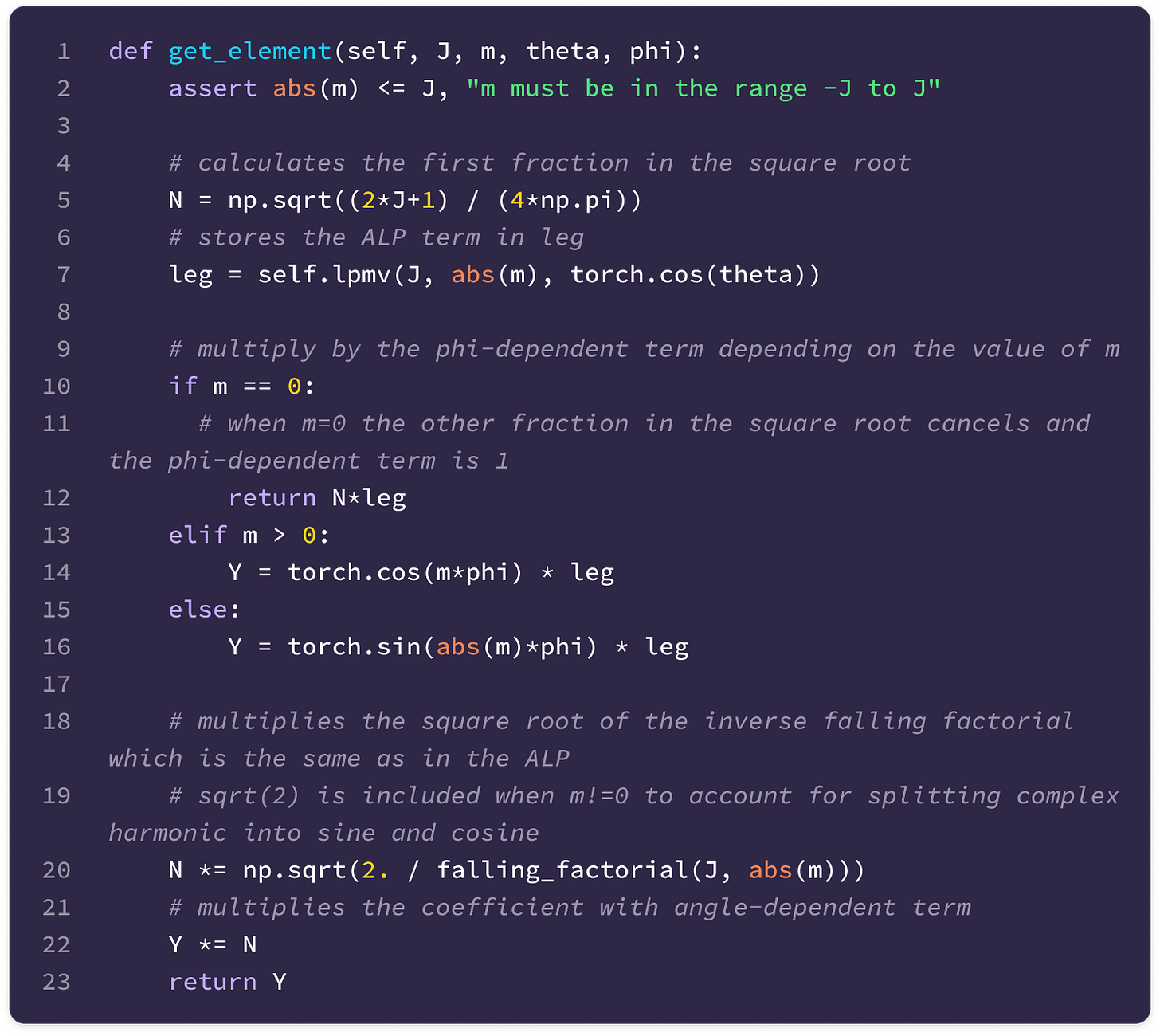

After computing the ALP, we can calculate the real spherical harmonics from the associated Legendre polynomials with the following equations, depending on the sign of m:

This can be converted into the following function that returns the spherical harmonic given values of J, m, θ (theta), and φ (phi):

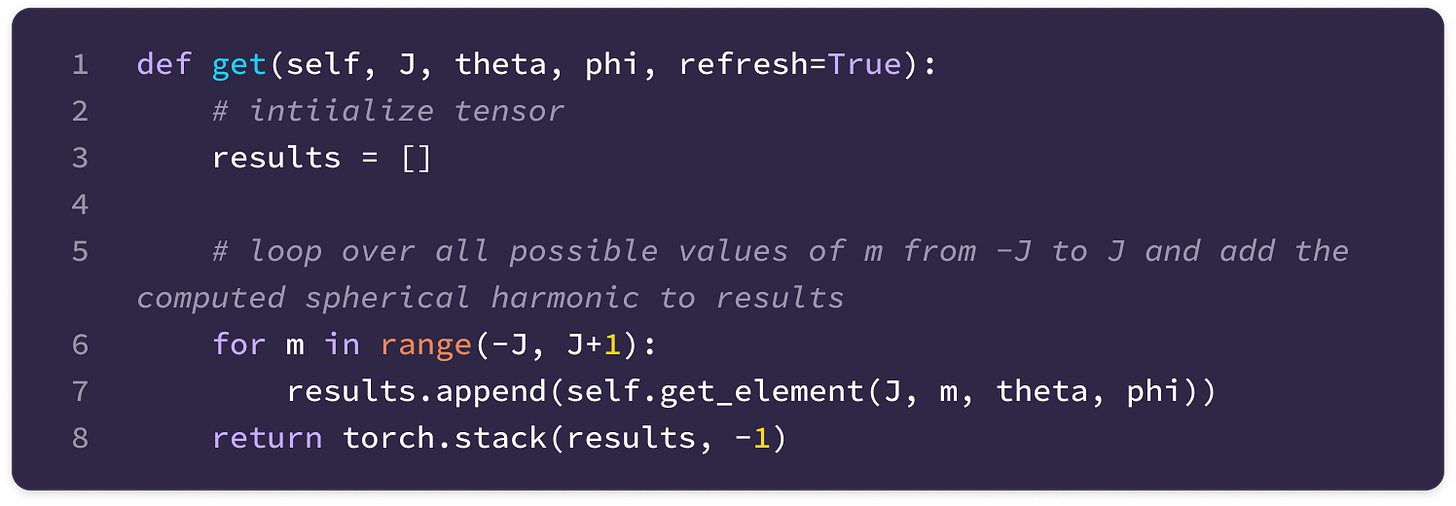

Now, we can generate the tensor of spherical harmonics for all values of m corresponding to a given J, which is a type-J spherical tensor:

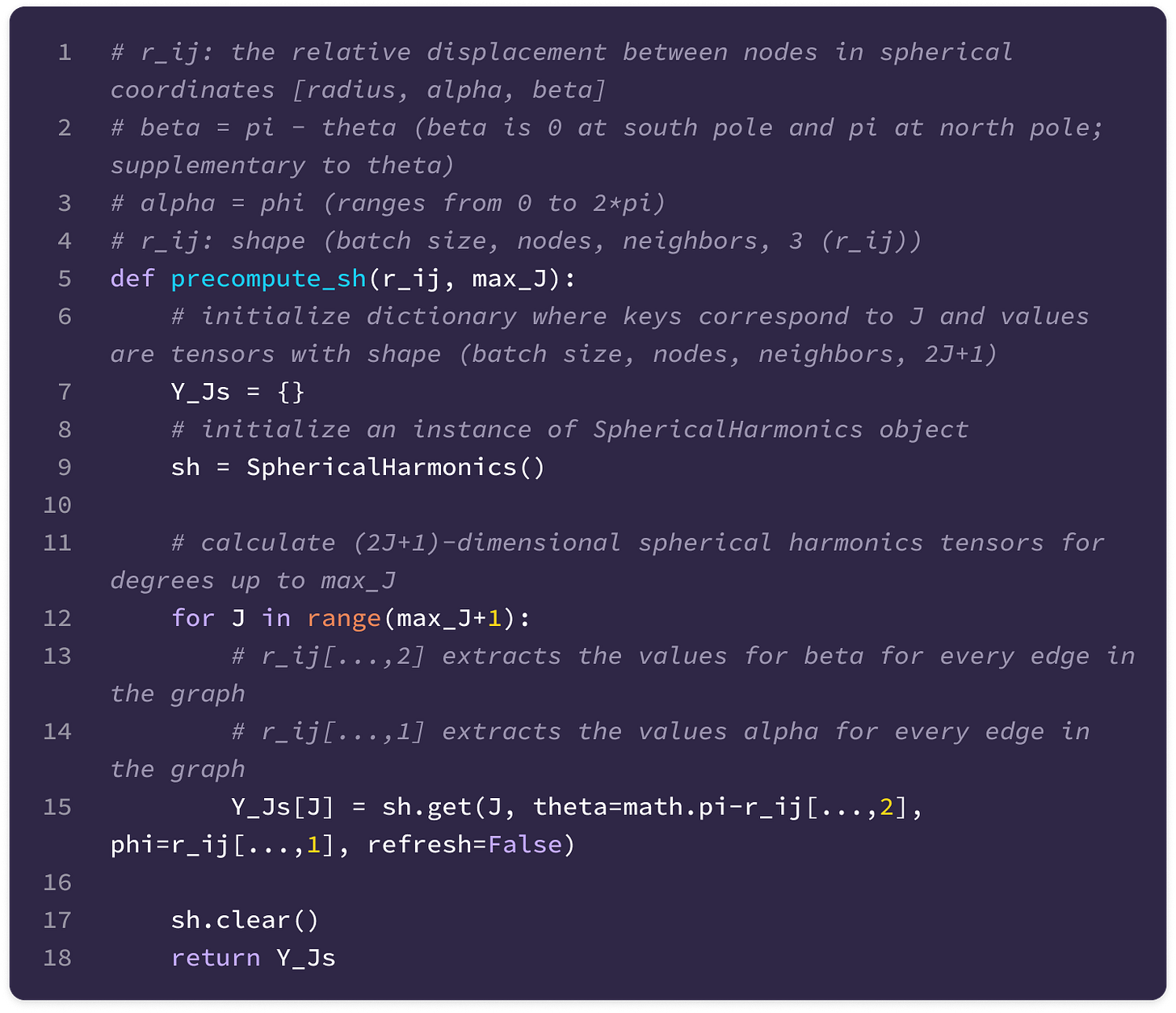

The function below is called to calculate the spherical harmonics given the relative displacement vector between nodes in spherical coordinates, using the angle conventions from the implementation of the 3D steerable CNN where each edge is represented by the radius, beta (angle from south pole), and alpha (same as phi in spherical harmonics):

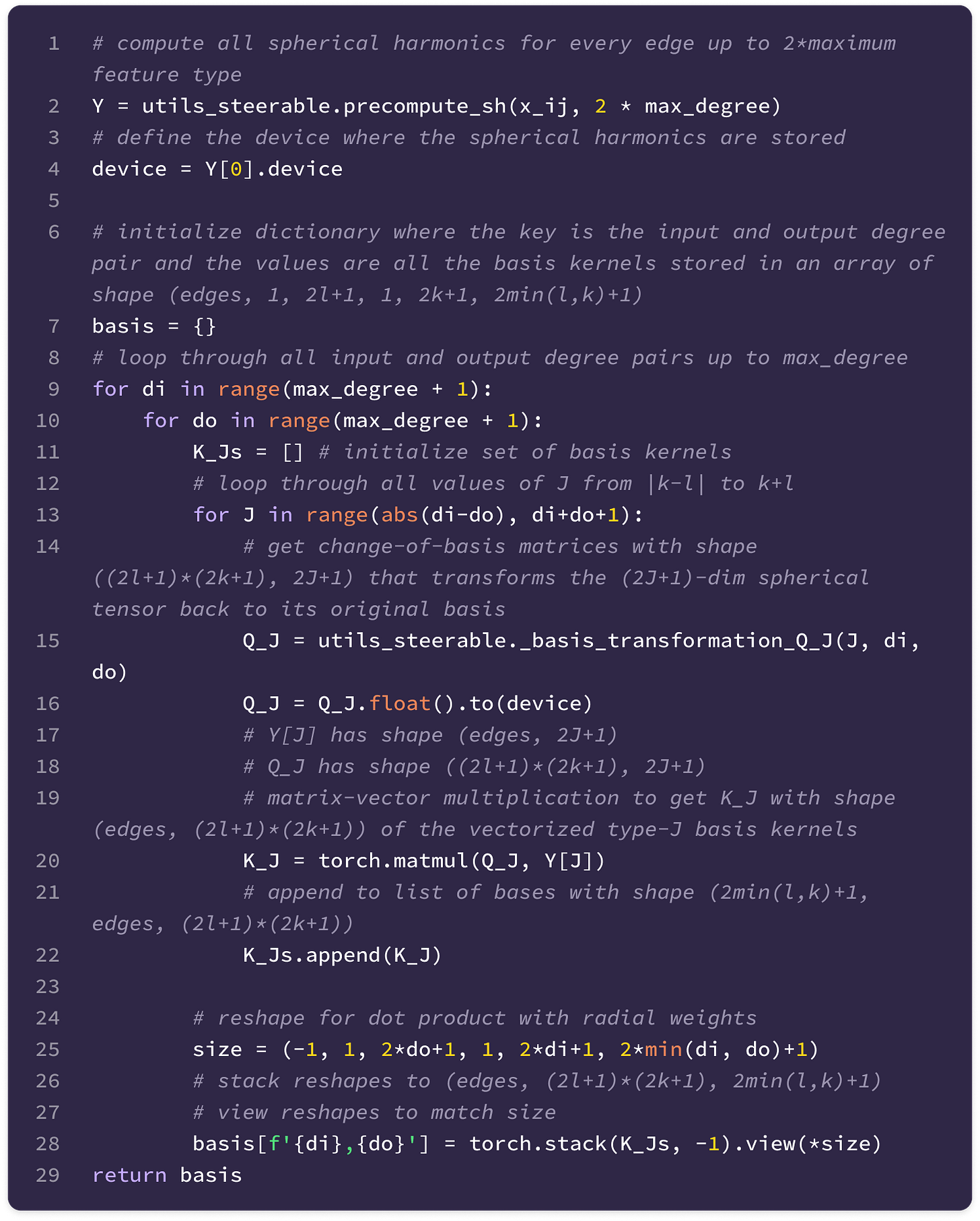

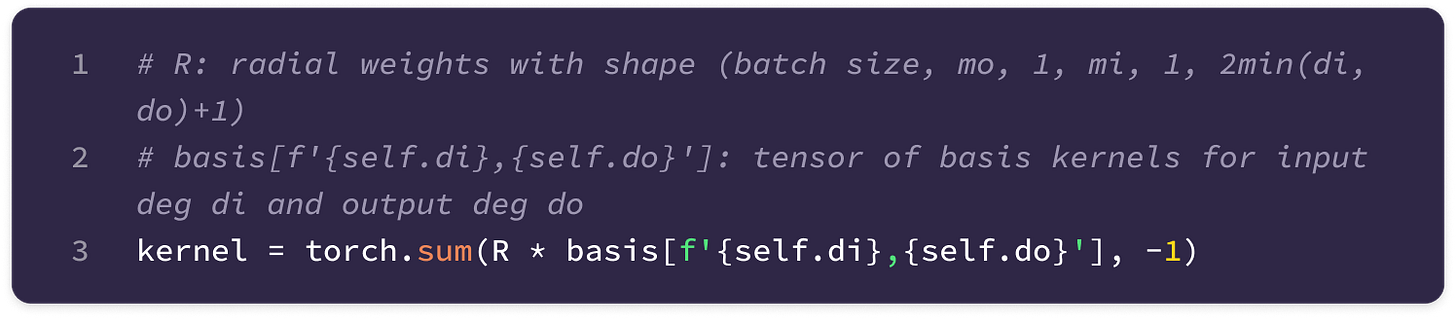

Finally, the basis kernels for all values of J up to a maximum feature degree are computed and stored so that the kernels across every layer in the model transforming from type-l to type-k features can be derived simply by taking a linear combination of stored basis kernels for J from |k-l| to k+l.

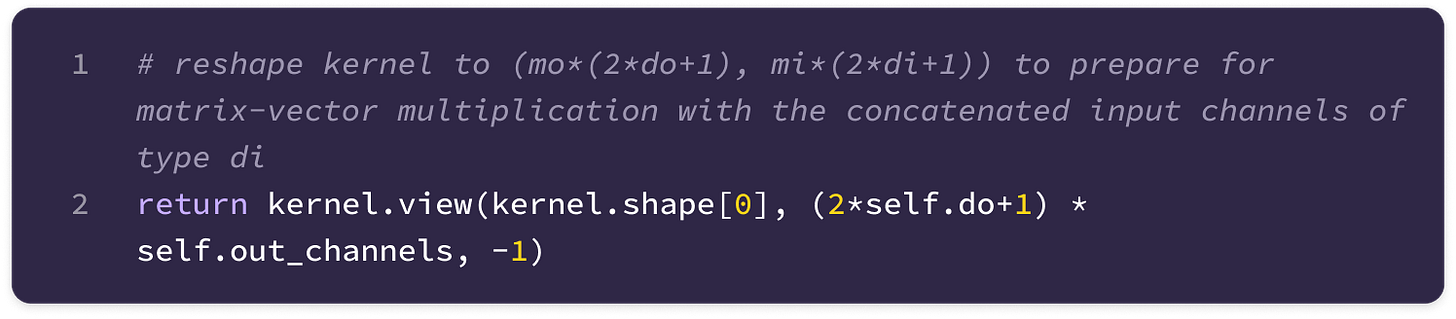

The shape of the set of basis kernels for a given transformation between types k and l is (1, 2l+1, 1, 2k+1, num bases) where the singleton dimensions will be broadcasted into the number of input and output channels when taking the weighted sum to generate a unique kernel that transforms from a specific type-k input channel to a specific type-l output channel.

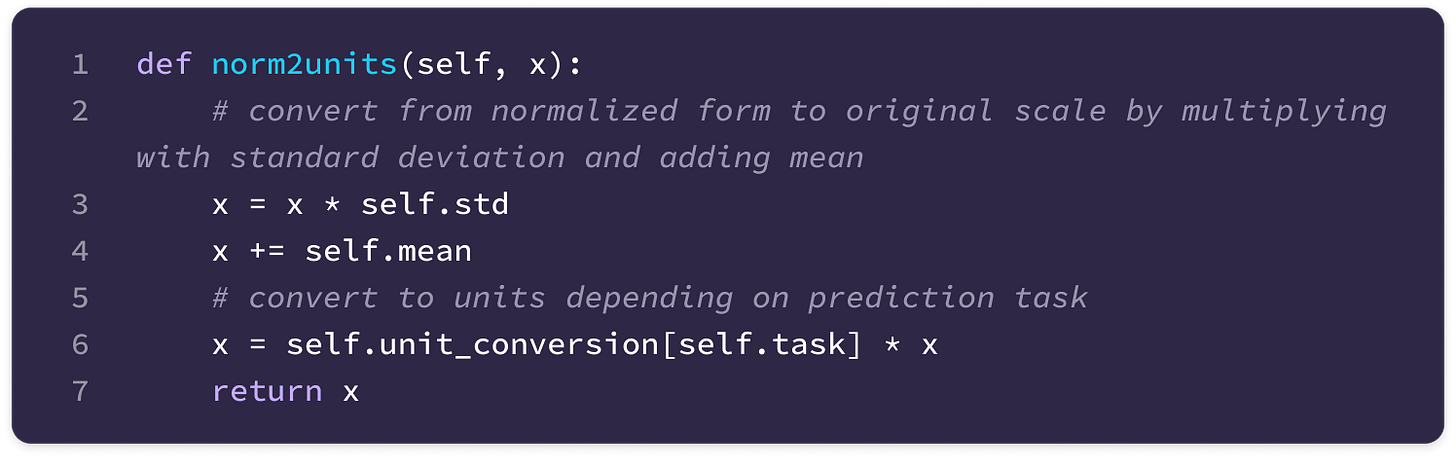

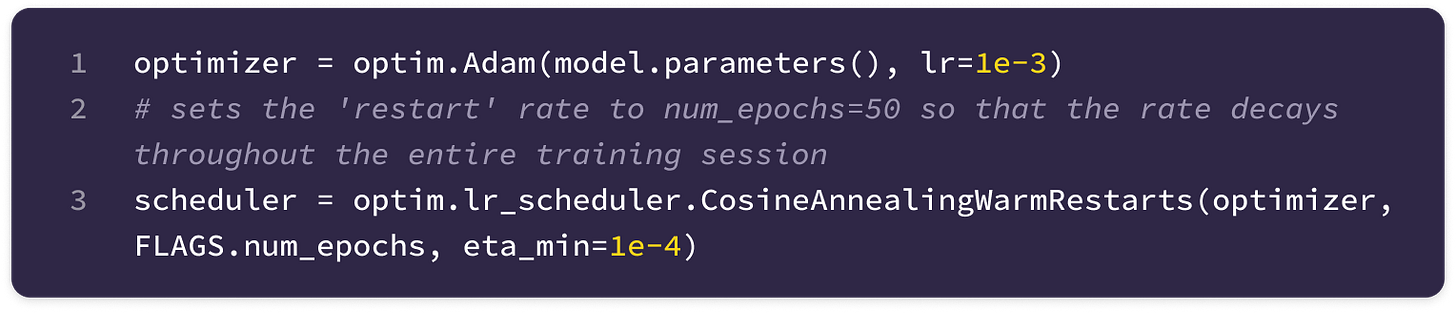

Constructing the Radial Function

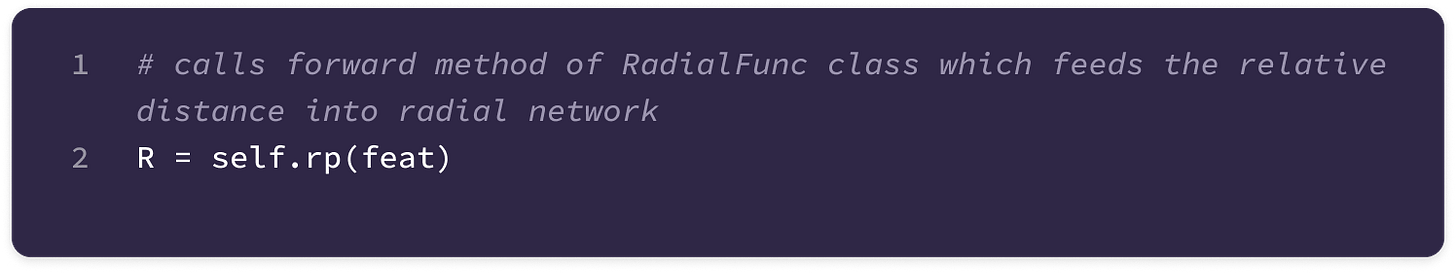

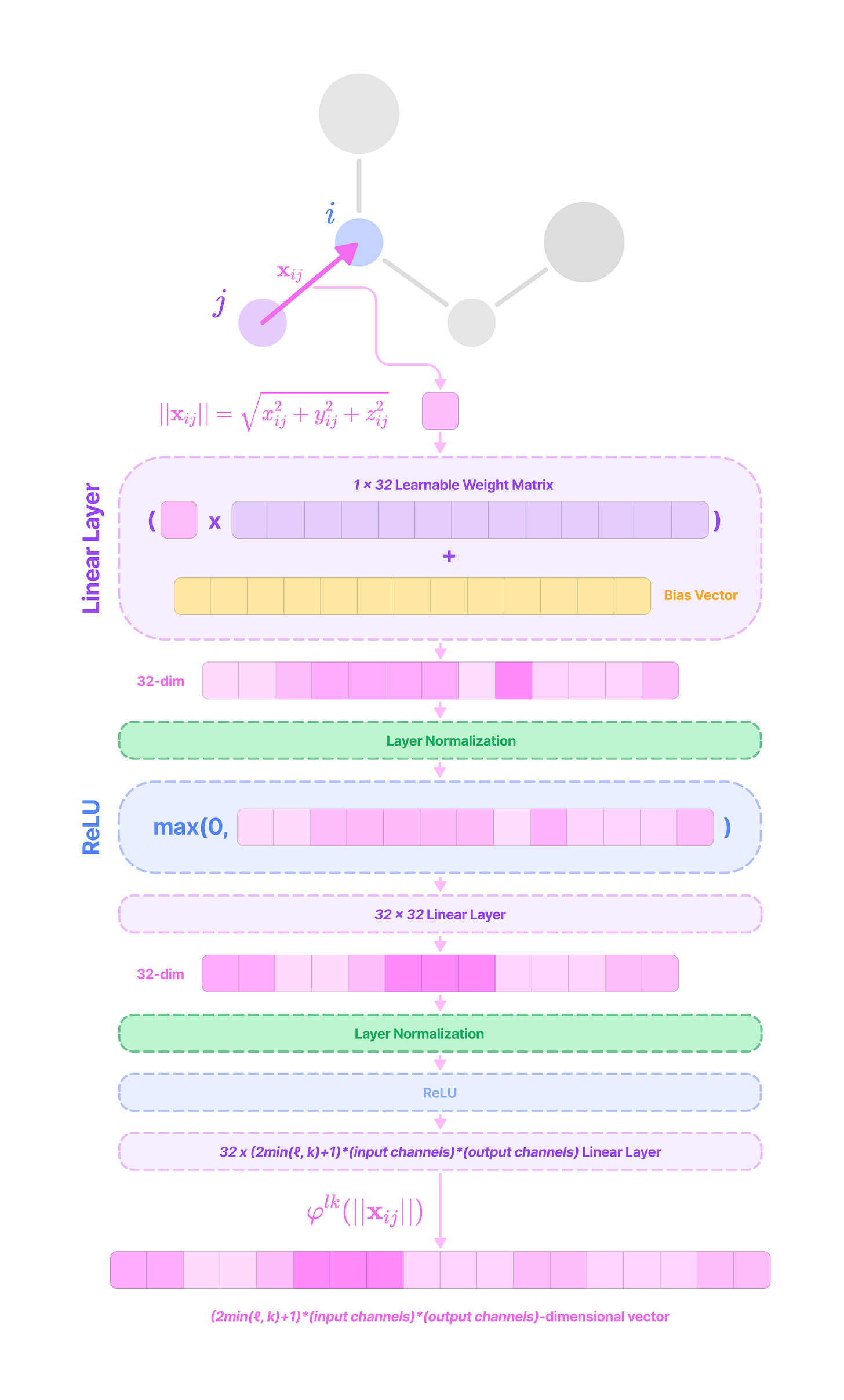

We can construct equivariant kernels by scaling the basis kernels with learned radial functions that transform the radial distance between nodes a set of weights. This function is a feed-forward network (FFN) that is effective in learning complex dependencies between the basis kernels and the distance between nodes.

Since each basis kernel represents a unique function that changes with a defined pattern on the unit sphere (defined by the spherical harmonic), we need to incorporate learnable parameters when constructing the equivariant kernels so that they can learn to detect specific feature motifs represented by distinct weighted combinations of the basis kernels.

The radial function not only allows us to incorporate distance dependence, which contributes to the strength of relationships between nodes, but it also allows the model to learn more complex dependencies on the node and edge features through backpropagation.

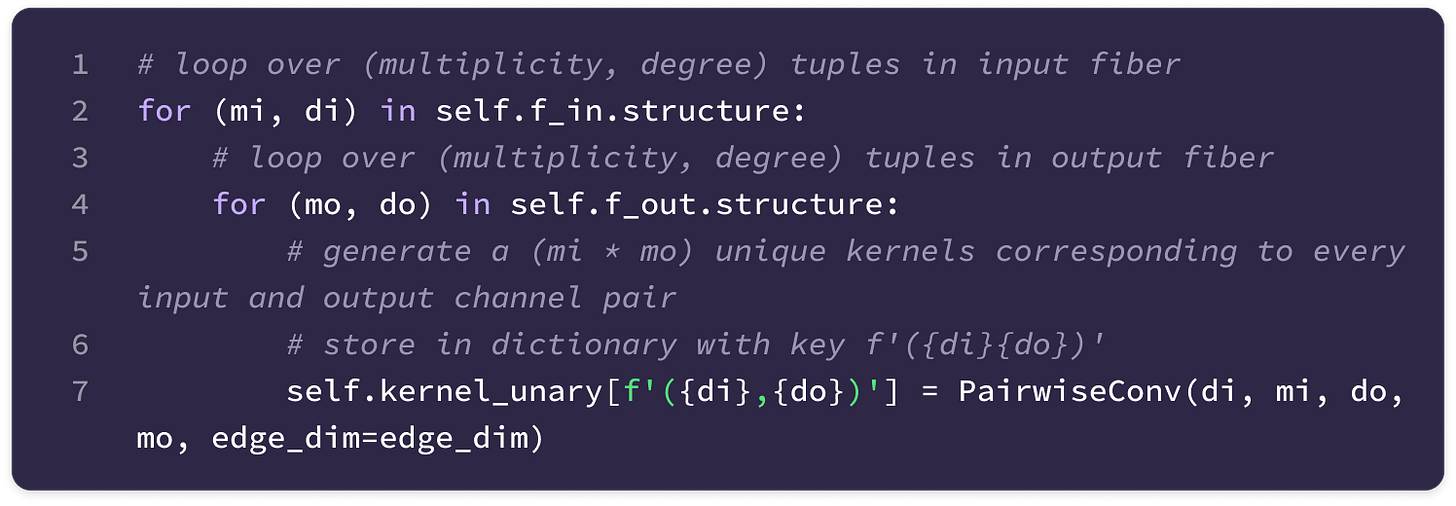

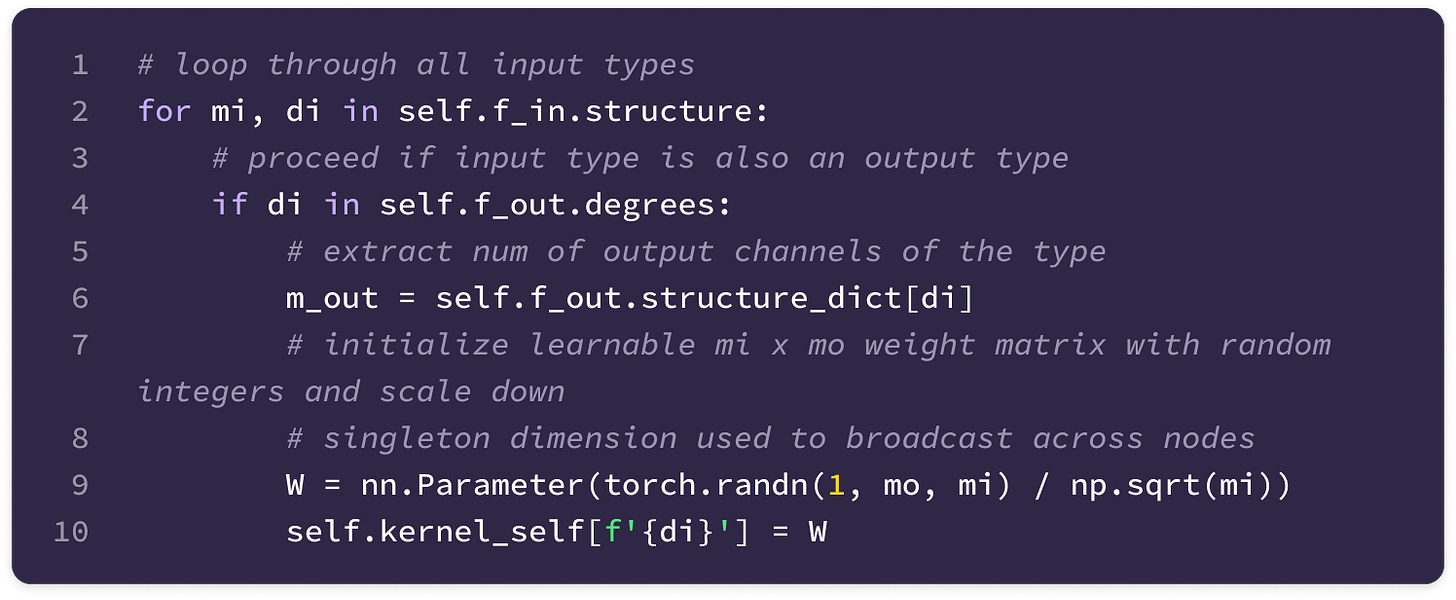

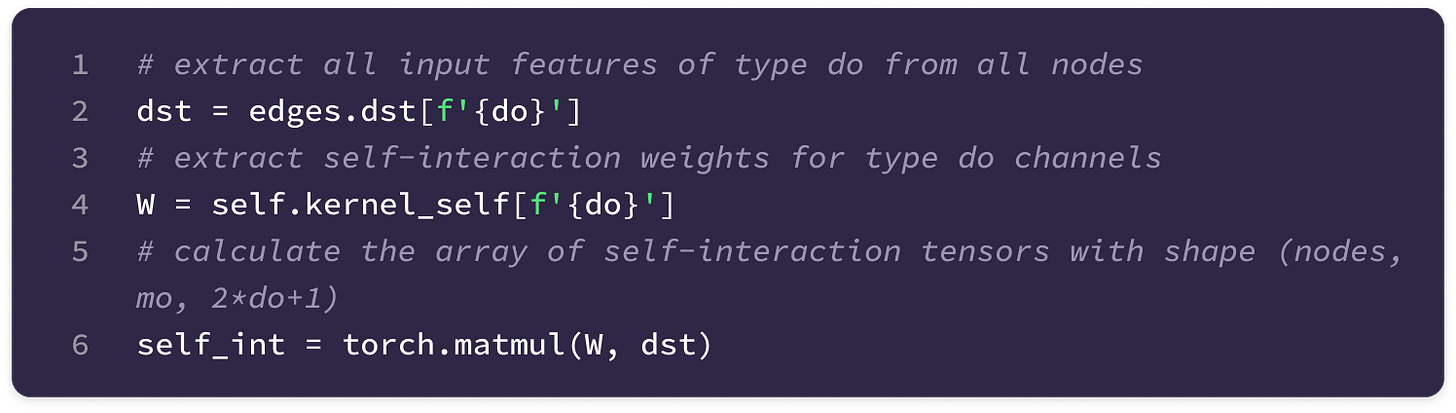

To construct the kernel that transforms type-k to type-l features, we define a radial function that takes the scalar distance as input and returns a weight for each basis kernel for values of J from |k-l| to |k+l|. For multi-channel features, the radial function not only generates a unique weight for each value of J but also for every input-to-output channel pair. This means that the output of the function is a (num_bases)*(input_channels)*(output_channels)-dimensional vector of weights.

We can denote a single weight produced by the function with the following notation:

Since rotating a vector does not change its length, the radial distance is SO(3)-invariant. This means the output of the radial function is invariant to rotations of the input graph for any function definition:

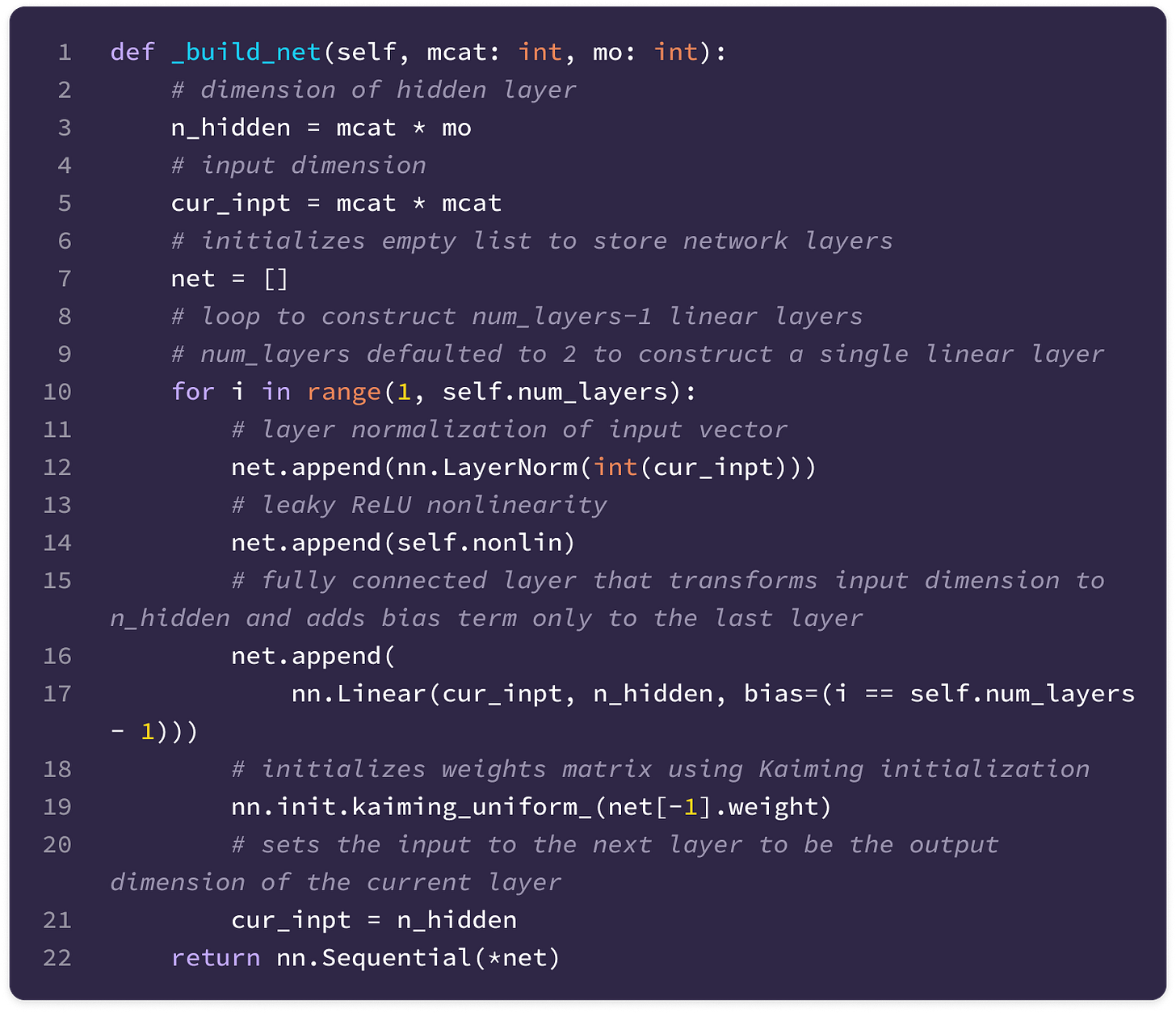

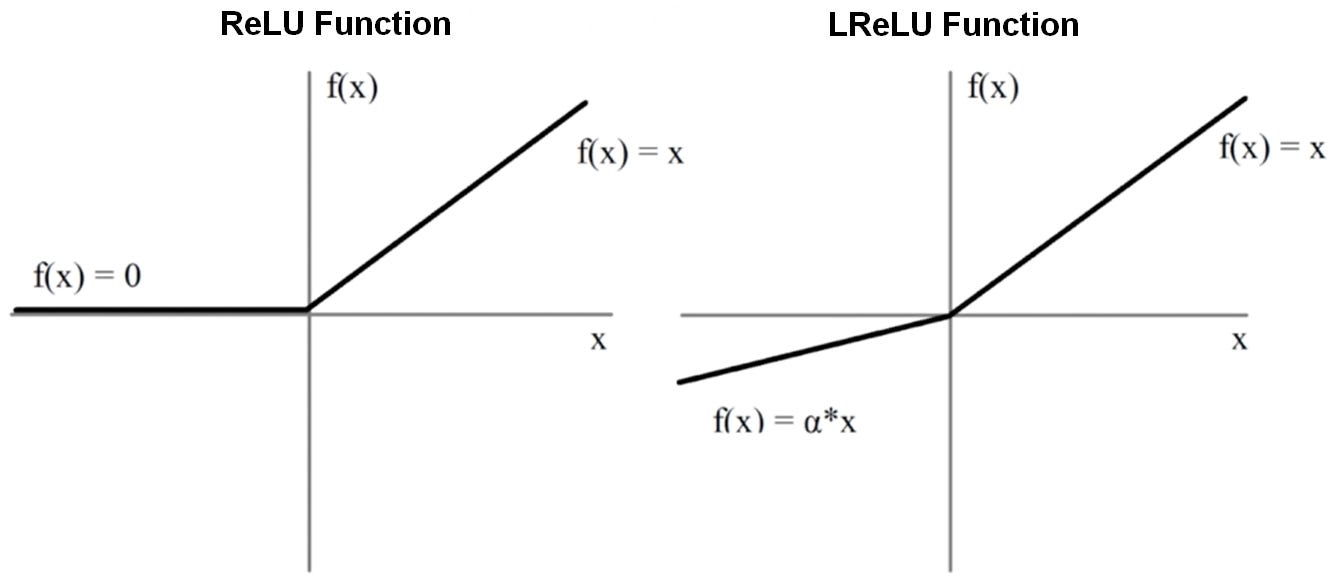

Thus, we define the radial function as a feed-forward network (FFN) with multiple linear layers interspersed with nonlinear activation functions to maximize the model’s ability to learn and detect complex feature motifs. The linear layers of an FFN can transform a vector from one dimension to another via multiplication with a (output dimension) x (input dimension) matrix of learnable weights and the addition of a bias vector with the same dimension as the output. Linear layers are followed by nonlinear activation functions like ReLU or LeakyReLU that capture more complex dependencies across weights.

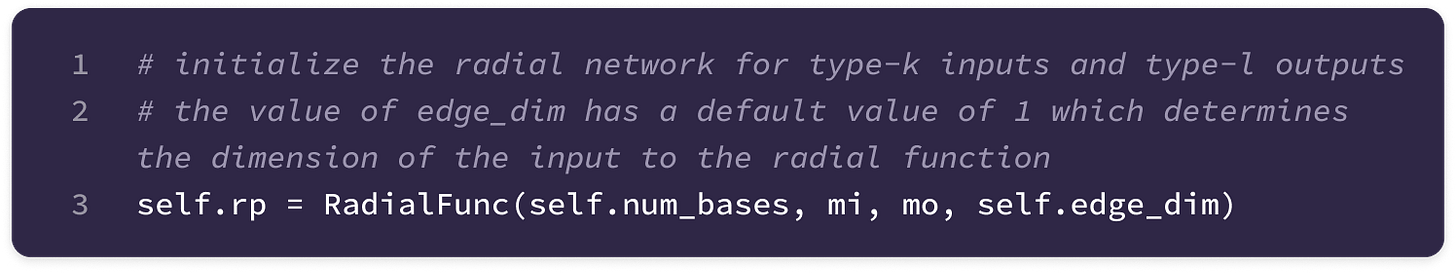

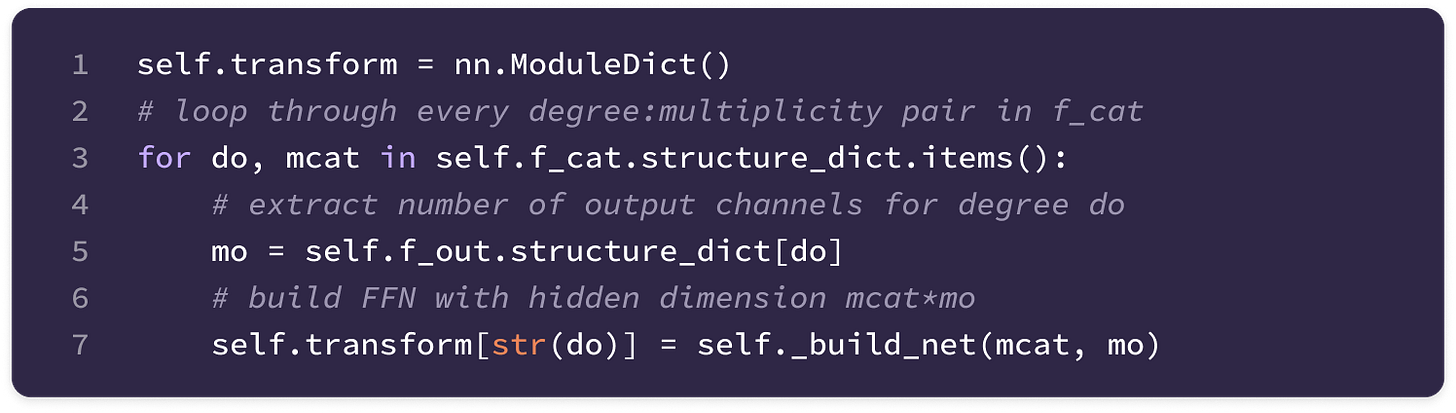

A new network is constructed for every ordered pair (k, l) of input and output feature types and every equivariant layer or type of embedding (attention layers have different networks for generating key and value embeddings). Each network is used across all nodes in the graph.

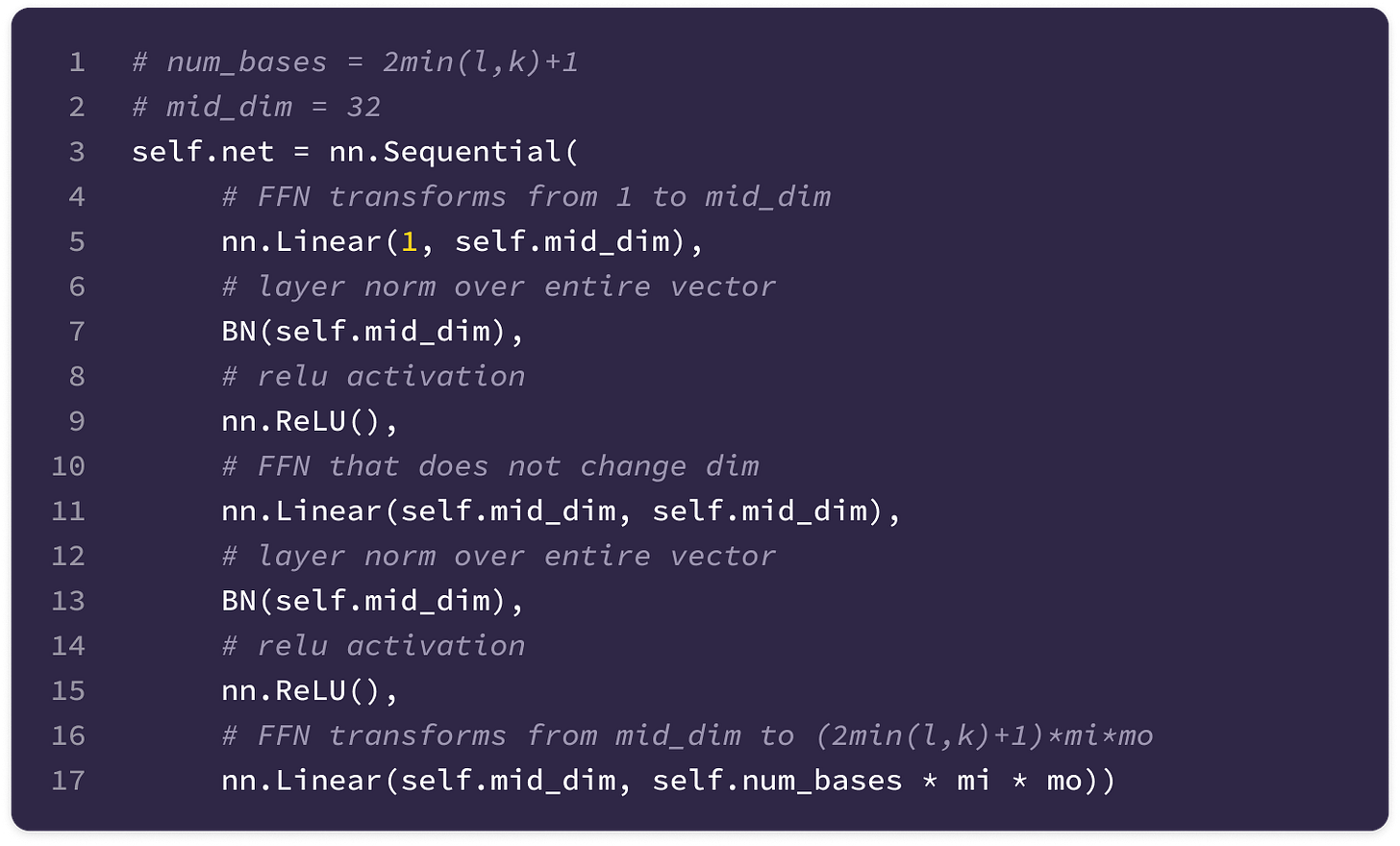

The FFN used in the SE(3)-Transformer consists of the following layers:

The first linear layer transforms the input into a 32-dimensional vector by multiplication with a 32 x 1 learnable weight matrix and addition with a 32-dimensional bias vector.

\(\mathbf{W}_1||\mathbf{x}_{ij}|| + \mathbf{b}_1\tag{$\mathbf{W}_1\in \mathbb{R}^{32\times 1}$}\)A layer normalization step transforms the mean to 0 and the variance to 1 across the values in the 32-dimensional vector.

A non-linear ReLU activation function is applied element-wise. For each element, the ReLU function outputs 0 for negative values or itself for positive values.

\(\mathbf{x}'=\max\left(0,\text{LayerNorm}(\mathbf{W}_1||\mathbf{x}_{ij}|| + \mathbf{b}_1)\right)\)Another FFN block with a linear layer (hidden dimension of 32), normalization step, and ReLU activation function is applied.

A final linear layer transforms the 32-dimensional vector output of the second block into a (2min(l, k)+1)(mi)(mo)-dimensional vector by multiplication with a (2min(l, k)+1)(mi)(mo) x 32 matrix of learnable weights and addition with a (2min(l, k)+1)(mi)(mo)-dimensional bias vector.

\(\begin{align}\varphi^{lk}(||\mathbf{x}_{ij}||)=\mathbf{W}\mathbf{x}'' + \mathbf{b}_2\;\;\;\;(\mathbf{W}_2\in \mathbb{R}^{32\times (2min(l,k)+1)(c_k)(c_l)})\nonumber\end{align}\)

The FNN can be constructed in PyTorch with the following code:

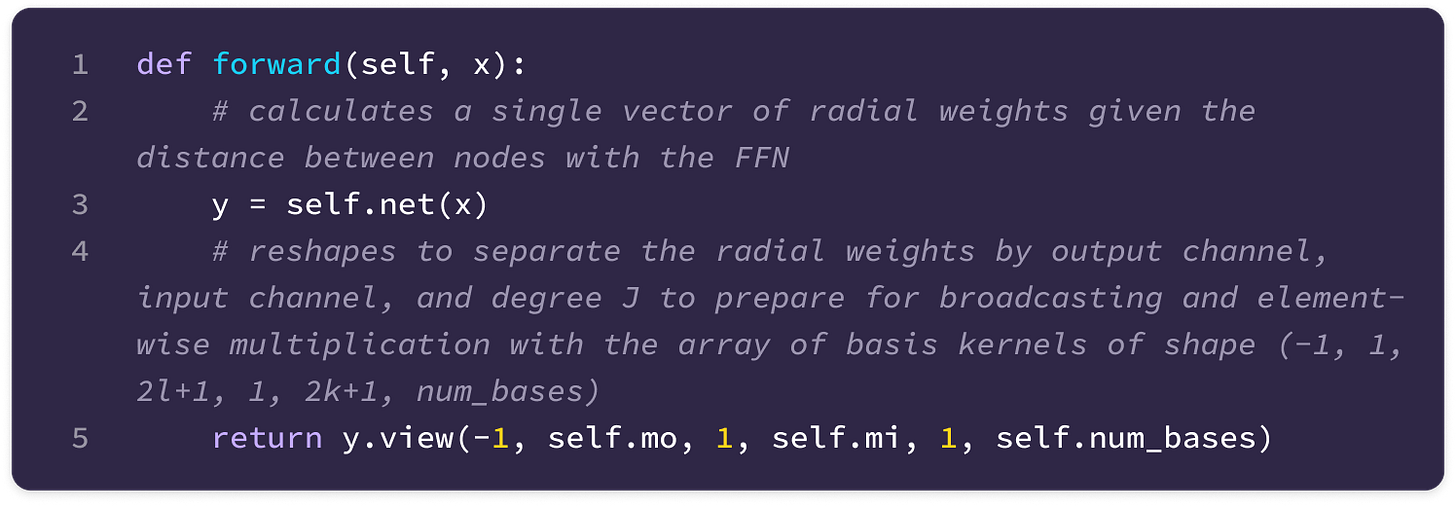

Then, we can generate the radial weights for a given pair of input and output types k, l:

Mechanism of the Equivariant Kernel

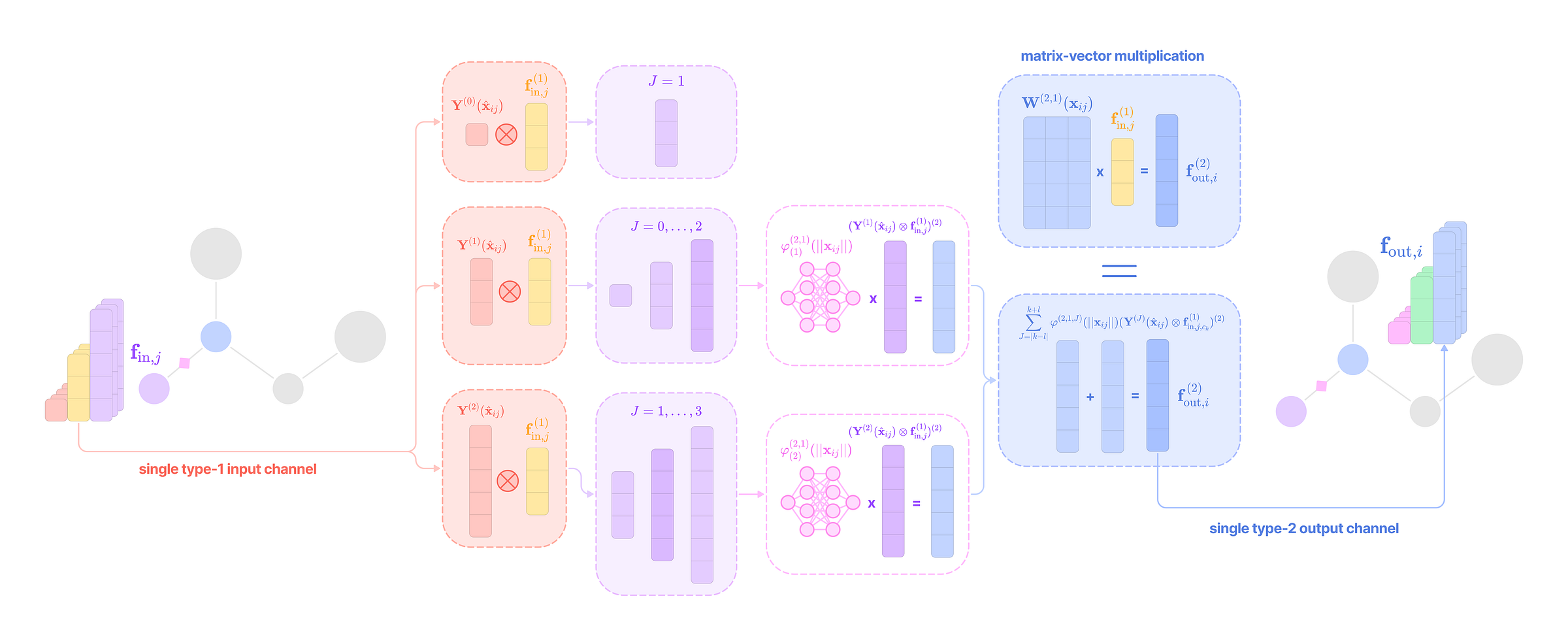

To grasp how the equivariant kernel is able to capture high-frequency rotationally-symmetric patterns in a single matrix-vector multiplication step, let’s break down how to interpret the mechanism of the kernel in terms of tensor products.

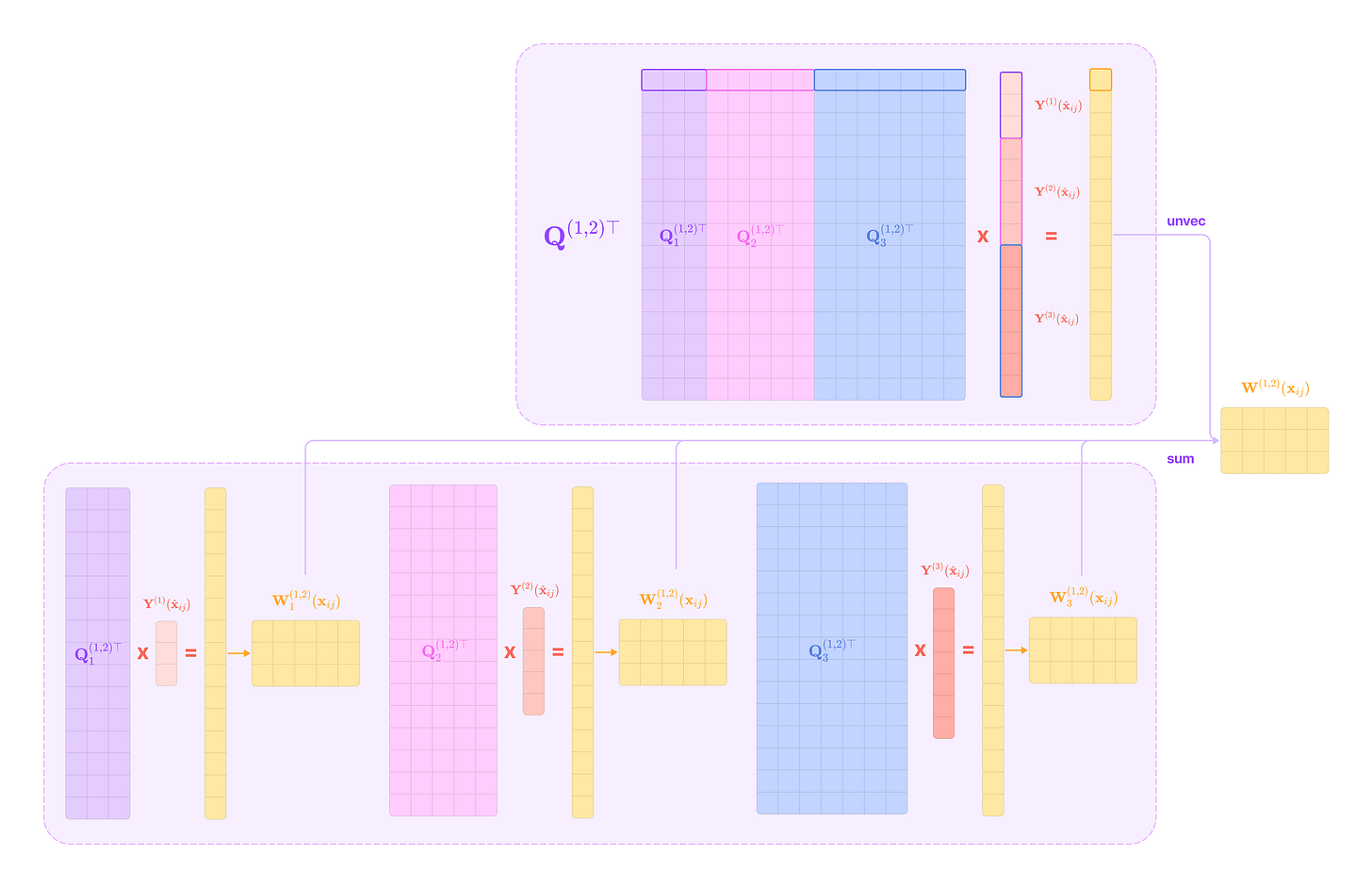

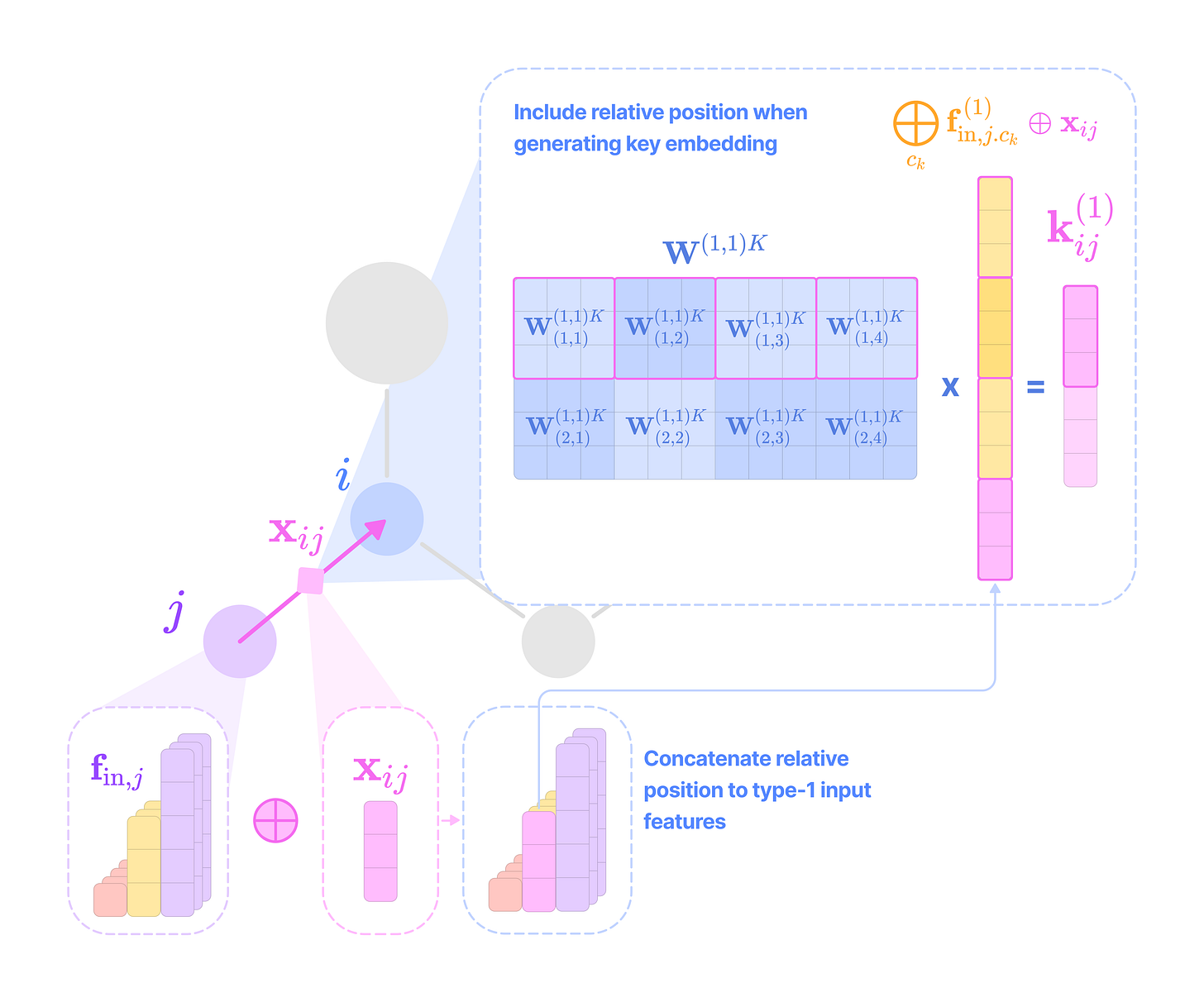

Given that each input-to-output channel pair has a unique radial weight, from now on, we will denote the kernel that transforms the type-k feature at channel c_k in node j for message passing to the type-l feature at channel c_l in node i as:

The weighted kernel acts as an aggregated function that takes multiple tensor products between the type-k input feature tensor and all the type-J projections of the displacement vector.

Intuitively, we can think of the kernel transformation as performing the following operations:

Extracting the type-l tensor component of the tensor product between the type-k feature tensor with the type-J spherical tensor projection of the displacement vector via the Clebsch-Gordan coefficients. Each dimension (indexed by the magnetic quantum number m_l) of the type-l tensor component can be written as:

\((\mathbf{Y}^{(J)}(\hat{\mathbf{x}}_{ij})\otimes\mathbf{f}^{k}_{\text{in,}j,c_k})^{(l)}_{m_l}=\sum_{m=-J}^J\sum_{m_k=-k}^kC^{(l, m_l)}_{(J, m)(k, m_k)}Y^{(J)}_{m}f^{(k)}_{m_k}\)We can write the full type-l tensor as a (2l + 1)-dimensional vector by concatenating all dimensions indexed by m from -l to l :

\((\mathbf{Y}^{(J)}(\hat{\mathbf{x}}_{ij})\otimes\mathbf{f}^{k}_{\text{in,}j,c_k})^{(l)}=\begin{bmatrix}(\mathbf{Y}^{(J)}(\hat{\mathbf{x}}_{ij})\otimes\mathbf{f}^{k}_{\text{in,}j,c_k})^{(l)}_{-l} \\\\ (\mathbf{Y}^{(J)}(\hat{\mathbf{x}}_{ij})\otimes\mathbf{f}^{k}_{\text{in,}j,c_k})^{(l)}_{-l+1} \\ \vdots \\(\mathbf{Y}^{(J)}(\hat{\mathbf{x}}_{ij})\otimes\mathbf{f}^{k}_{\text{in,}j,c_k})^{(l)}_{l} \end{bmatrix}\)Scaling the type-l tensor component by the weight calculated by a learnable function on the radial component of the displacement vector.

\(\varphi^{lk}_{(J,c_l,c_k)}(||\mathbf{x}_{ij}||)(\mathbf{Y}^{(J)}(\hat{\mathbf{x}}_{ij})\otimes\mathbf{f}^{k}_{\text{in,}j,c_k})^{(l)}\)Repeating Steps 1 and 2 for all types-J projections of the angular unit vector ranging from |k - l| to |k + l| and scaling the type-l output by a unique learnable weight. Then, taking the sum of all the weighted type-l components of the tensor products to get the output type-l message for channel c_l from the type-k feature at channel c_k:

\(\mathbf{W}^{lk}_{(c_l,c_k)}(\mathbf{x}_{ij})\mathbf{f}^k_{\text{in,}j,c_k}=\sum_{J=|k-l|}^{k+l}\varphi^{lk}_{(J,c_l,c_k)}(||\mathbf{x}_{ij}||)(\mathbf{Y}^{(J)}(\hat{\mathbf{x}}_{ij})\otimes\mathbf{f}^k_{\text{in,}j,c_k})^{(l)}\)

Instead of performing all these steps and taking the tensor product with every type-J projection, the equivariant kernel aggregates all of these operations into a single kernel matrix-vector multiplication step.

Rules of Equivariant Layers

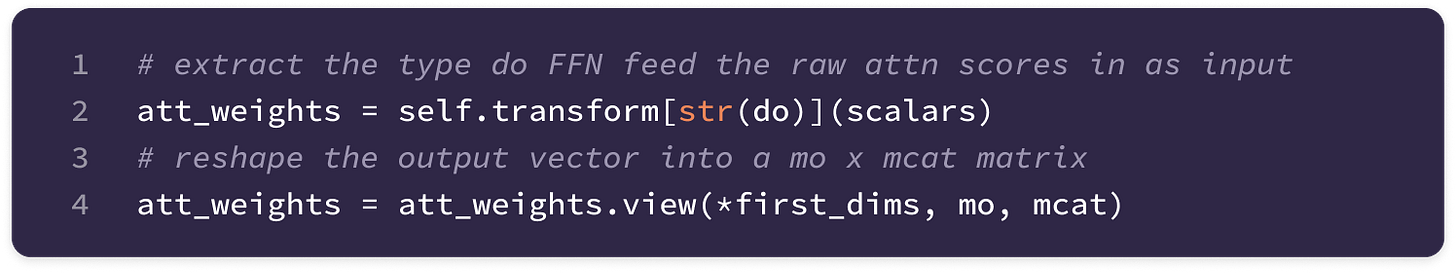

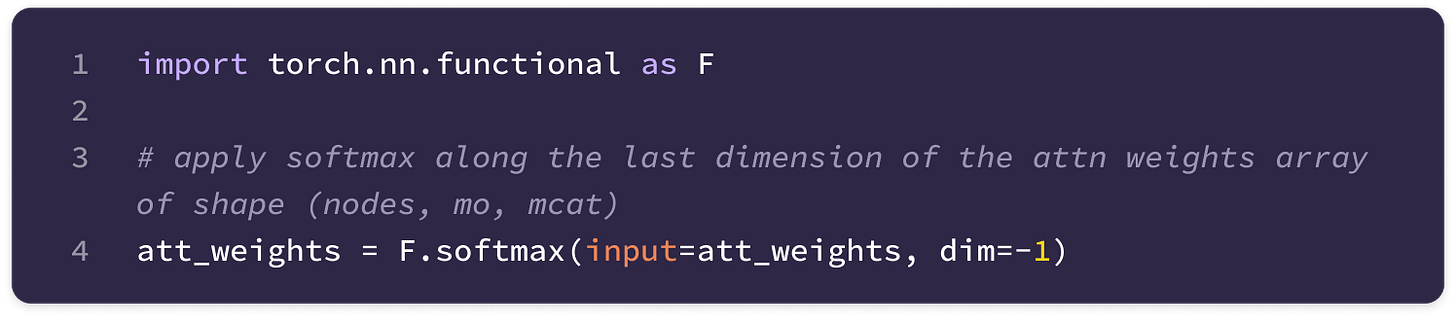

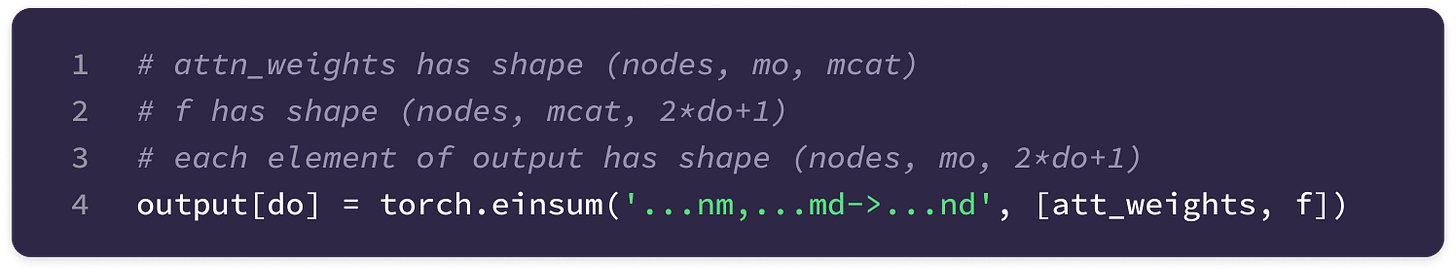

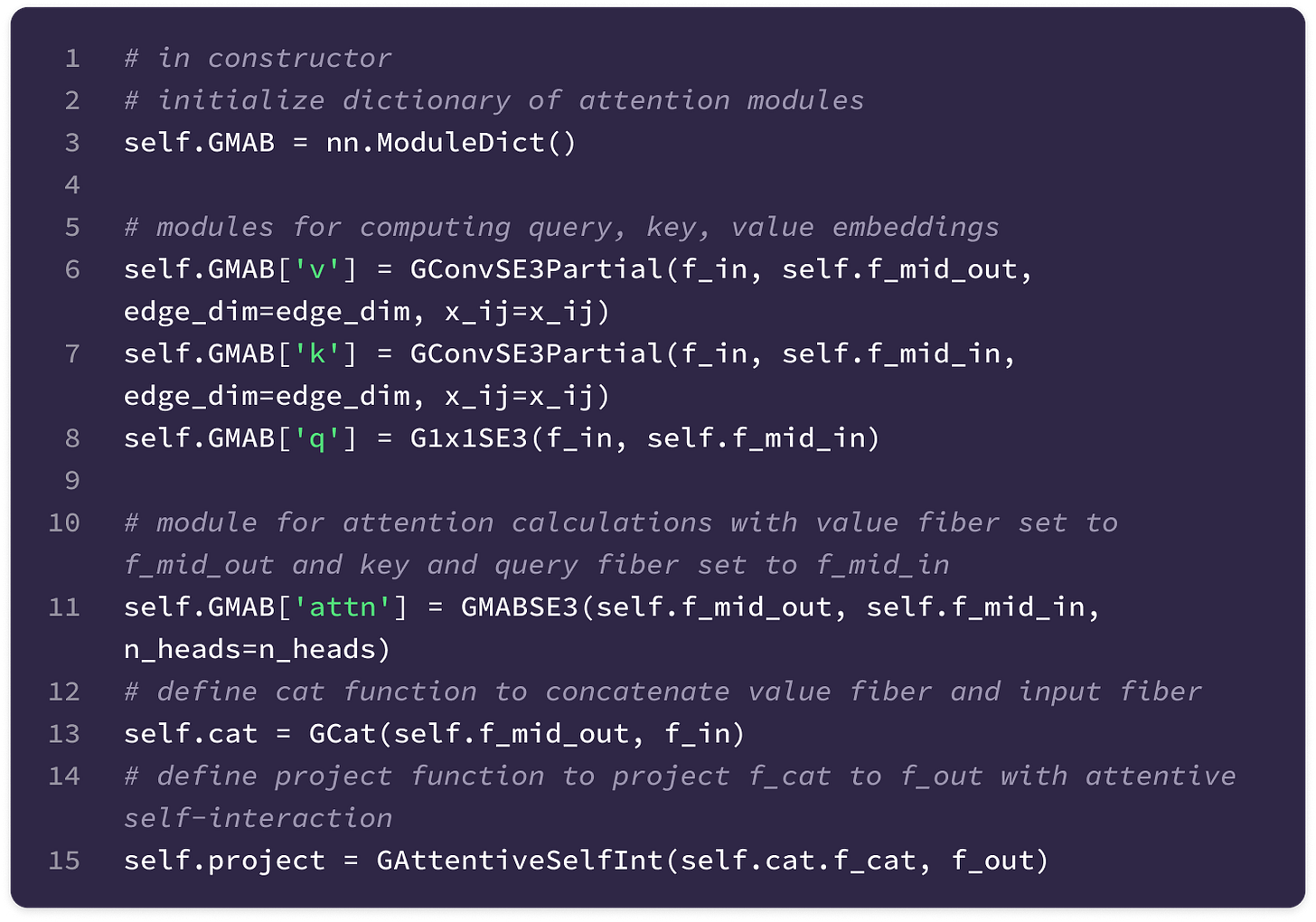

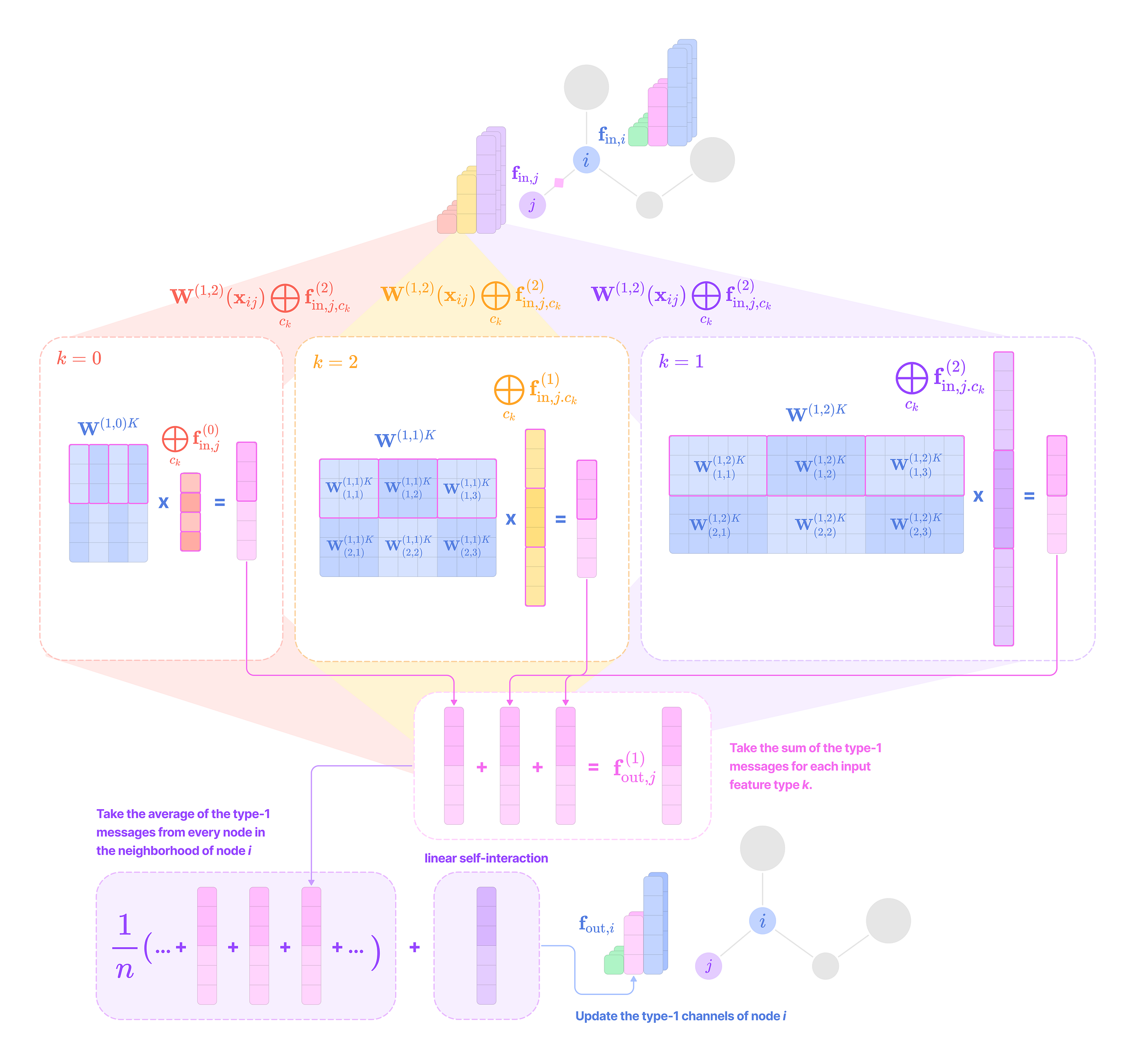

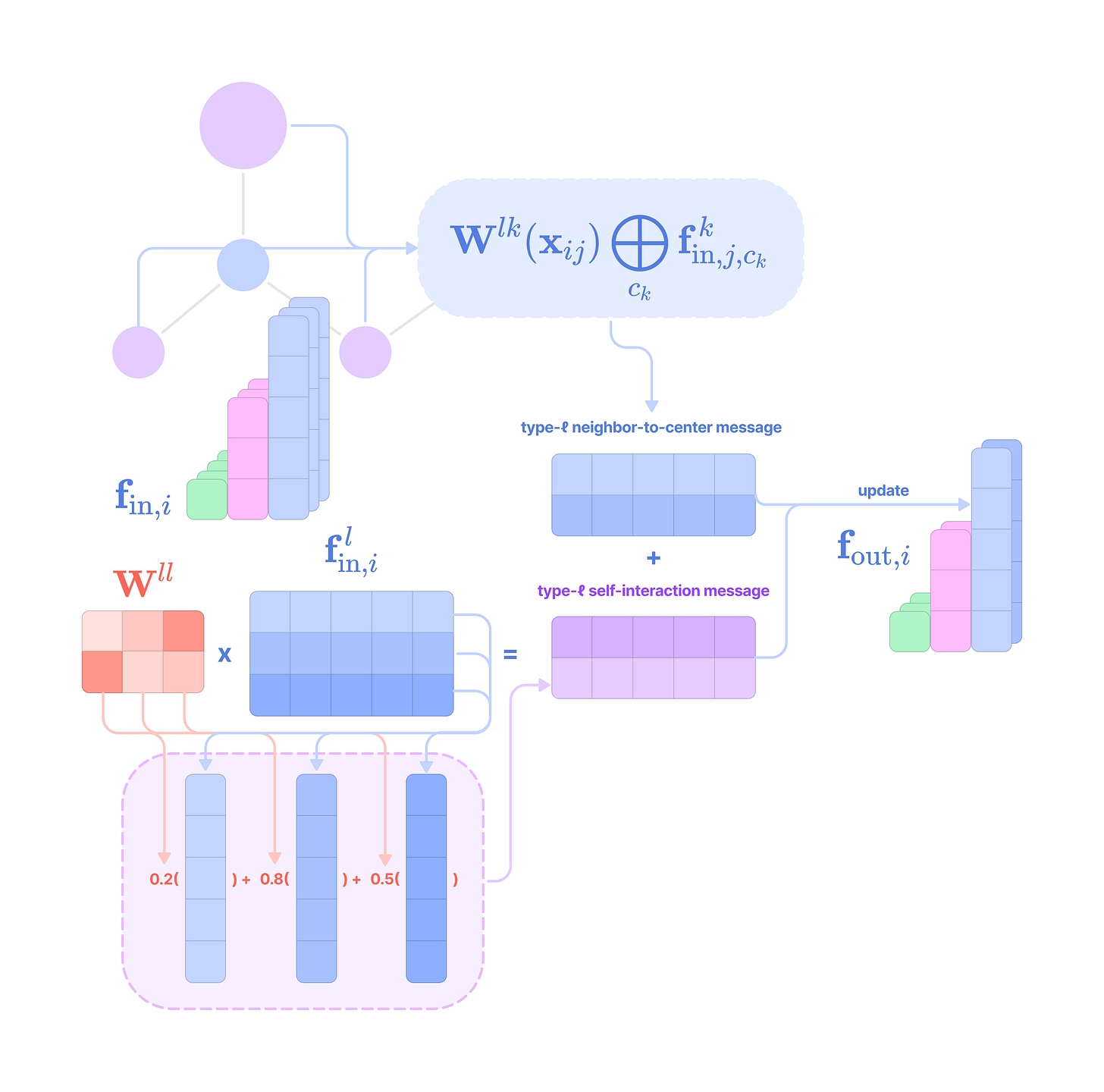

Now, let’s quickly solidify some general rules when constructing equivariant layers: